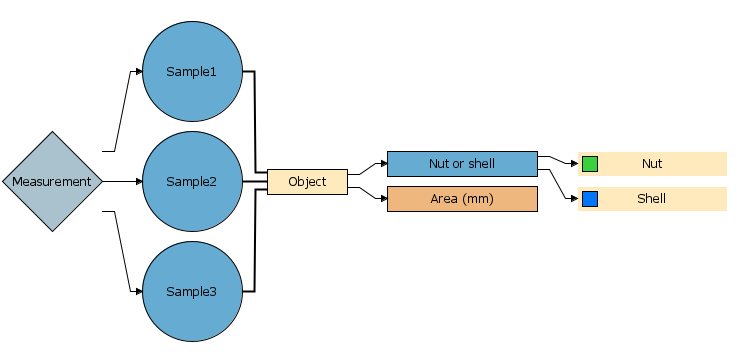

Object detection using pre-trained algorithms via ONNX models. The object detection algorithms supported can be found under the parameter model type.

Note that instance segmentation where individual pixels are masked is not supported.

See ONNX image segmentation for more information.

tip

In the analyze tree add the descriptor Segmentation label and select a text file including the names of objects in the ONNX file.

Parameters

Model type

The .onnx model type. Available options are:

-

Faster R-CNN

-

YOLO v4

-

YOLO v5

-

YOLO v8

-

YOLO v11

Onnx file

Select the pre-trained ONNX file for the selected model type.

Source

On which image the ONNX segmentation should be applied. The pseudo-rgb image or a painted prediction image.

Confidence

The confidence level required by the model for an object to be categorized.

Normalize the pixel values

Only applicable to Faster-RCNN

If Normalize and center is used the values will be scaled to 0-1 before the We don't have a way to export this macro.is subtracted and the We don't have a way to export this macro.used in the division. See below.

-

No normalization

We don't have a way to export this macro.

-

Normalize and center

We don't have a way to export this macro.

Image dimension order

Only applicable to Faster-RCNN

In which order the input dimensions are:

-

Width / Height

-

Height / Width

Output layer to use

Only applicable to YOLOv5

Which type of output layer to use:

-

Sigmoid layer

-

Detection layer

Min area

The minimum number of pixels for an object to be included.

Max area

The maximum number of pixels for an object to be included.

If 0 no maximum area is defined.

Object filter

Use an expression to further exclude unwanted objects based on shape.

Operators than can be used expressions include the data operators wNNN and bMMM for referring to wavelength bands, the range operator : used for averaging data, standard arithmetic (+,-,/,* …) and comparison operators (=,>,< …) as well as some mathematical functions (We don't have a way to export this macro.…) and constants (We don't have a way to export this macro.).

Breeze does not validate the provided expression until you click Apply changes to apply it to some data.

|

Data Operator |

Description |

|---|---|

|

|

Wavelength lookup operator that finds the wavelength band closest to the provided number A setting controls how far off a wavelength is allowed to be to be considered a match. If there isn’t matching data an error is displayed when applying the workflow to data. Learn more in Wavelength matching. Example of this syntax: |

|

|

Band index operator. If the index |

|

|

Average range operator that returns the average value for a range of wavelength bands. For example: |

Properties that can be used for the Expression:

-

Area -

Length -

Width -

Circumference -

Regularity -

Roundness -

Angle -

D1 -

D2 -

X -

Y -

MaxBorderDistance -

BoundingBoxArea

For details on each available property see: Object properties Details

Shrink

Takes away x numbers of pixels at the borders of the objects included in images.

Separate

-

Normal

-

Can have both separated and combined objects.

-

-

Separate adjacent objects

-

All objects are defined separately.

-

-

Merge all objects into one

-

All objects are defined as one.

-

-

Merge all objects per row

-

All objects per row segmentation are defined as one.

-

-

Merge all objects per column

-

All objects per column segmentation are defined as one.

-

Max objects

Max number of objects in image, takes the first We don't have a way to export this macro.objects sorted by confidence.

Inverse

✅ Includes the opposite of the sample specified in the Deep learning image model.

⬜ Includes the sample specified from the Deep learning image model.

Link

Only visible when applicable

Link output objects from two or more segmentations to top segmentation. Descriptors can then be added to the common object output and will be calculated for objects from all segmentations.

The segmentations must be at same level to be available for linking.