Goal

In this tutorial, you will analyze the hyperspectral images of plastic samples. Your goal is to learn how to use Breeze to create a model based on machine learning which can be used for classification of different types of plastic.

The data

The data consist of two images containing samples of five different types of plastic.

-

Polyethylene terephthalate bottle (PET BOTTLE)

-

Polyethylene terephthalate sheet (PET SHEET)

-

Polyethylene terephthalate glycol (PET G)

-

Polyvinyl chloride (PVC)

-

Polycarbonates (PC)

The tutorial images contain samples of known plastic type which will be used as training data set and test set. The spectral acquisition was carried out using a HySpex SWIR-384 camera, with a spectral range of 930 - 2500 nm. The plastic samples were placed on a moving stage and broadband halogen lamps were used as illumination sources. The data used in this tutorial is reduced using average binning, 2 times spatially and 4 times spectrally leaving the images with 192 pixels across the field of view, and 72 spectral bands. This was done to reduce the size of the data files for internet downloading.

In this tutorial you will learn how to:

-

Use “Manual” segmentation to use your mouse to select samples in an image that will be used as the training set

-

Use “Grid and Inset” segmentation to add additional data points that will be used for the training

-

Training of a Machine learning classification model

-

Classification of the images using your machine learning model

-

Add additional training data to your model and then re-train and apply it to your image

-

Do a real-time analysis workflow with object segmentation and classification and apply it using “Simulator camera”

Download tutorial image data

Start Breeze.

optional

Change to dark mode by pressing the “Switch to Dark mode”

You will see the following view (if you already have a Project in Analyzer press the “Add” button in the lower-left corner).

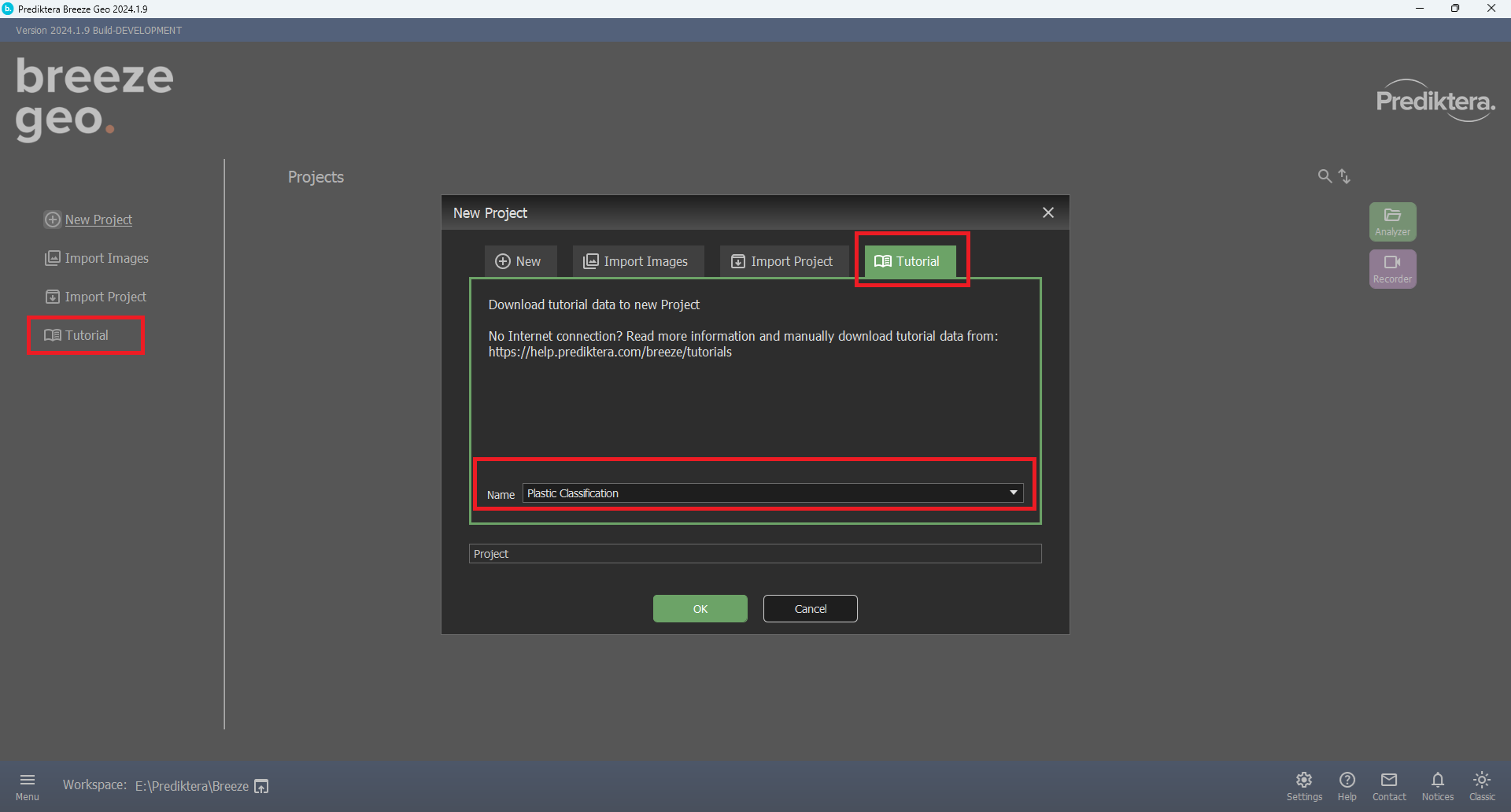

Select the “Tutorial” tab.

Select “Plastic Classification” in the “Name” drop-down menu.

Press “OK” to start downloading the image data.

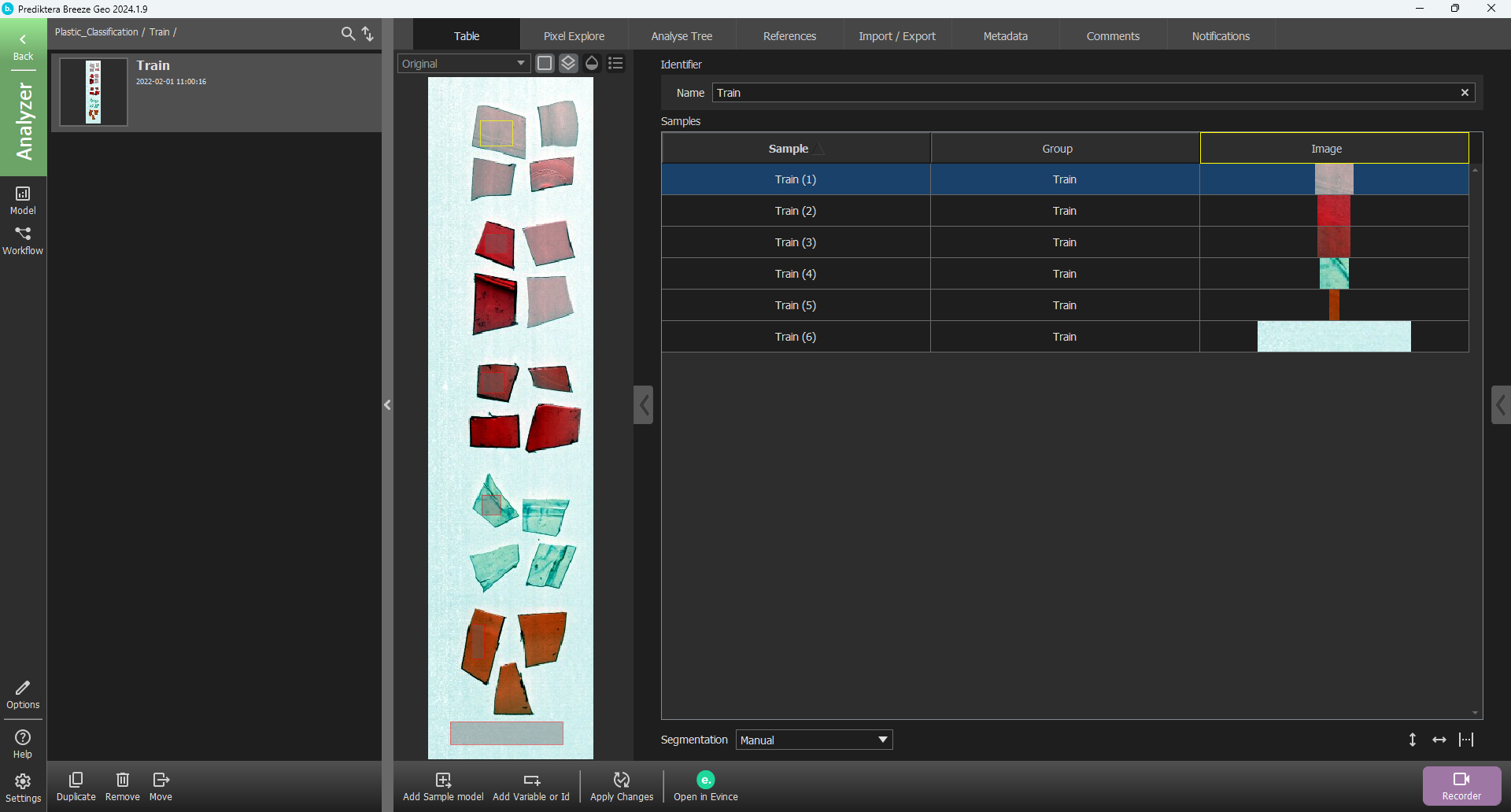

After the tutorial data is downloaded you will see the following table:

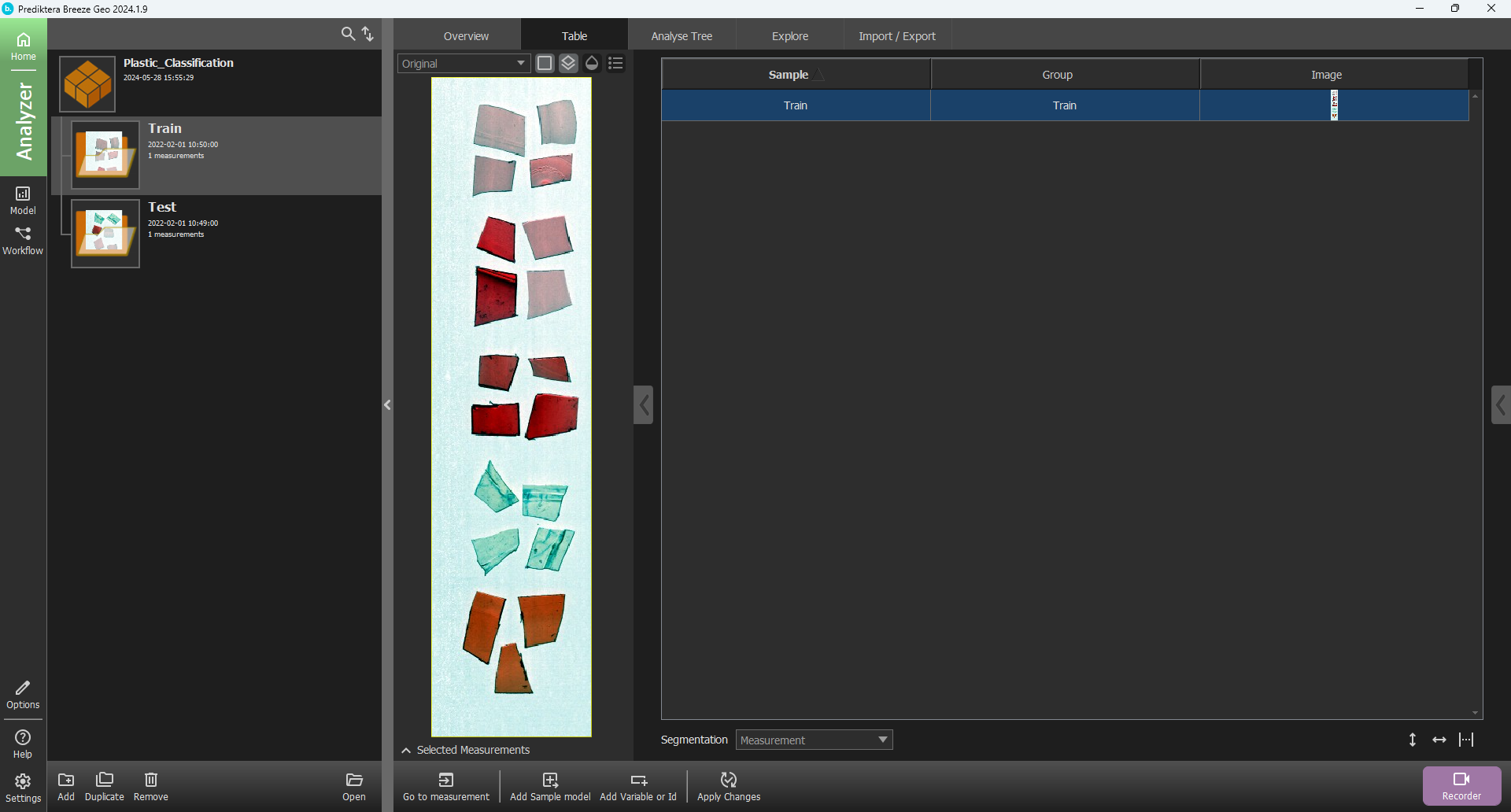

A project called “Plastic_Classification” has now been created. It includes one training image and one test image. You can click on a row in the table to see the preview image (pseudo-RGB) for each image.

Click on the “Open” button to open the project or double-click on the project on the left.

The Group level should look like this:

The image data in the project is organized into two groups called “Train” and “Test”.

Select the Train group in the left menu and press the “Open” button again.

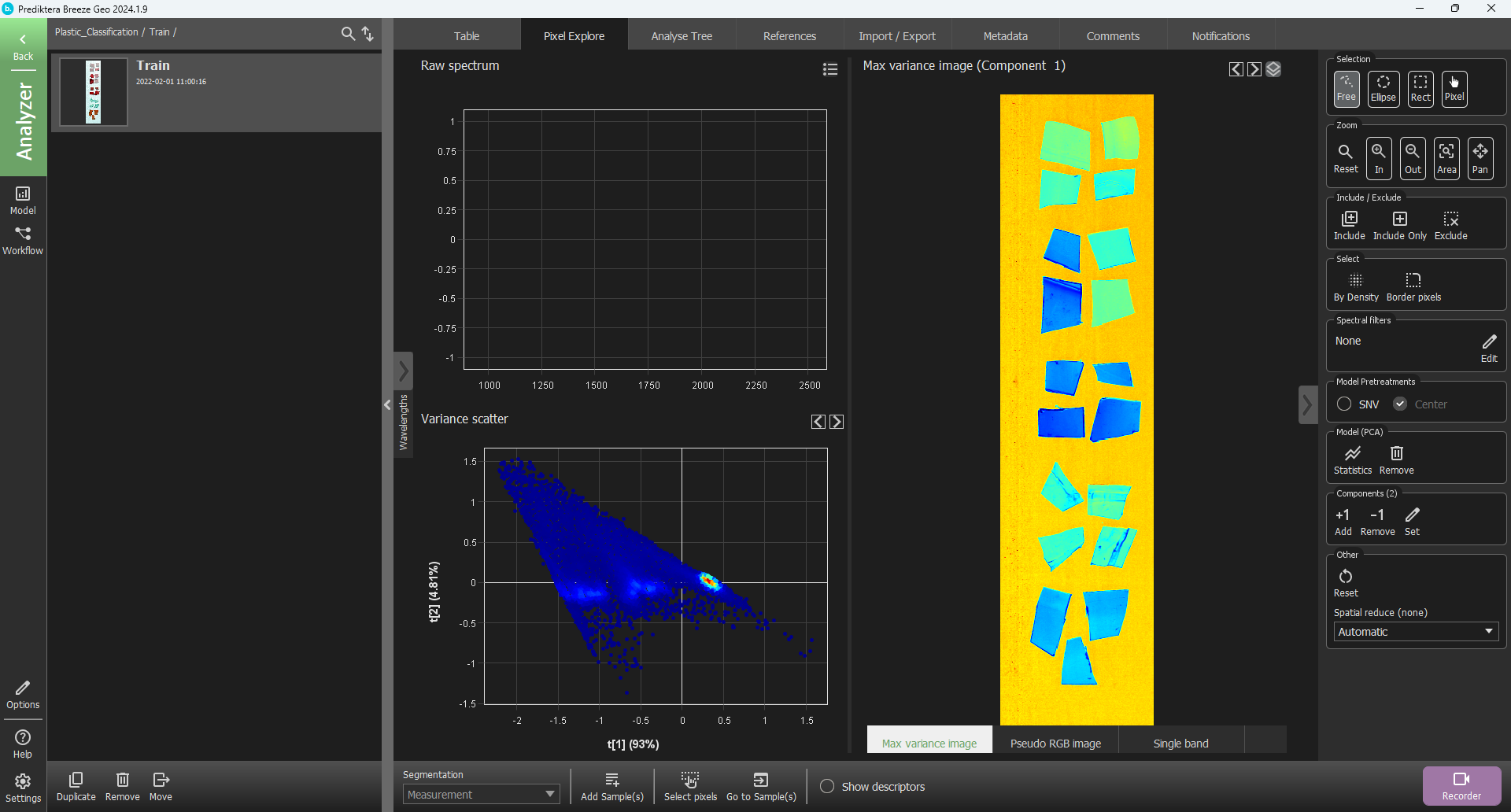

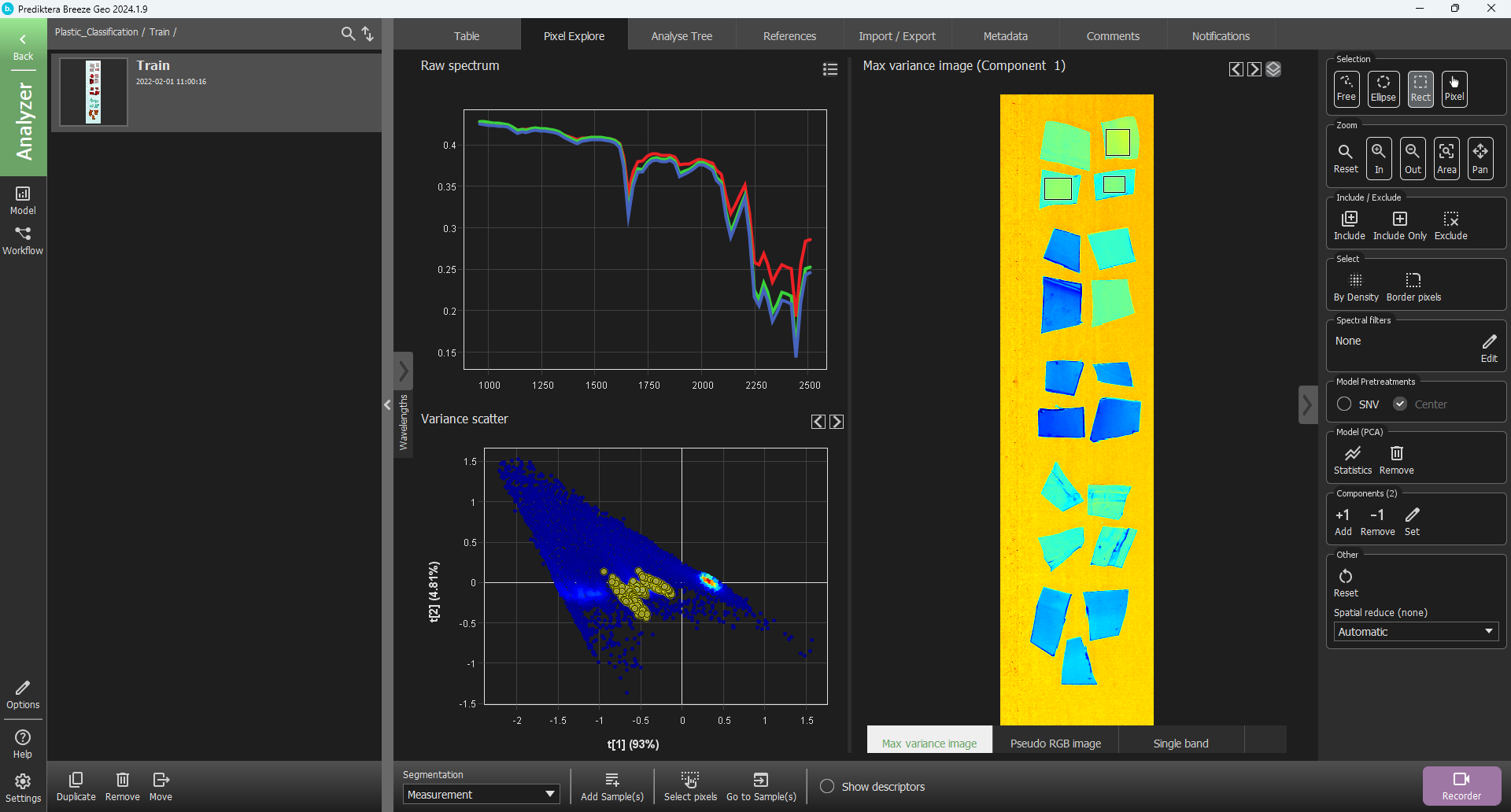

Click on the “Pixel Explore” tab.

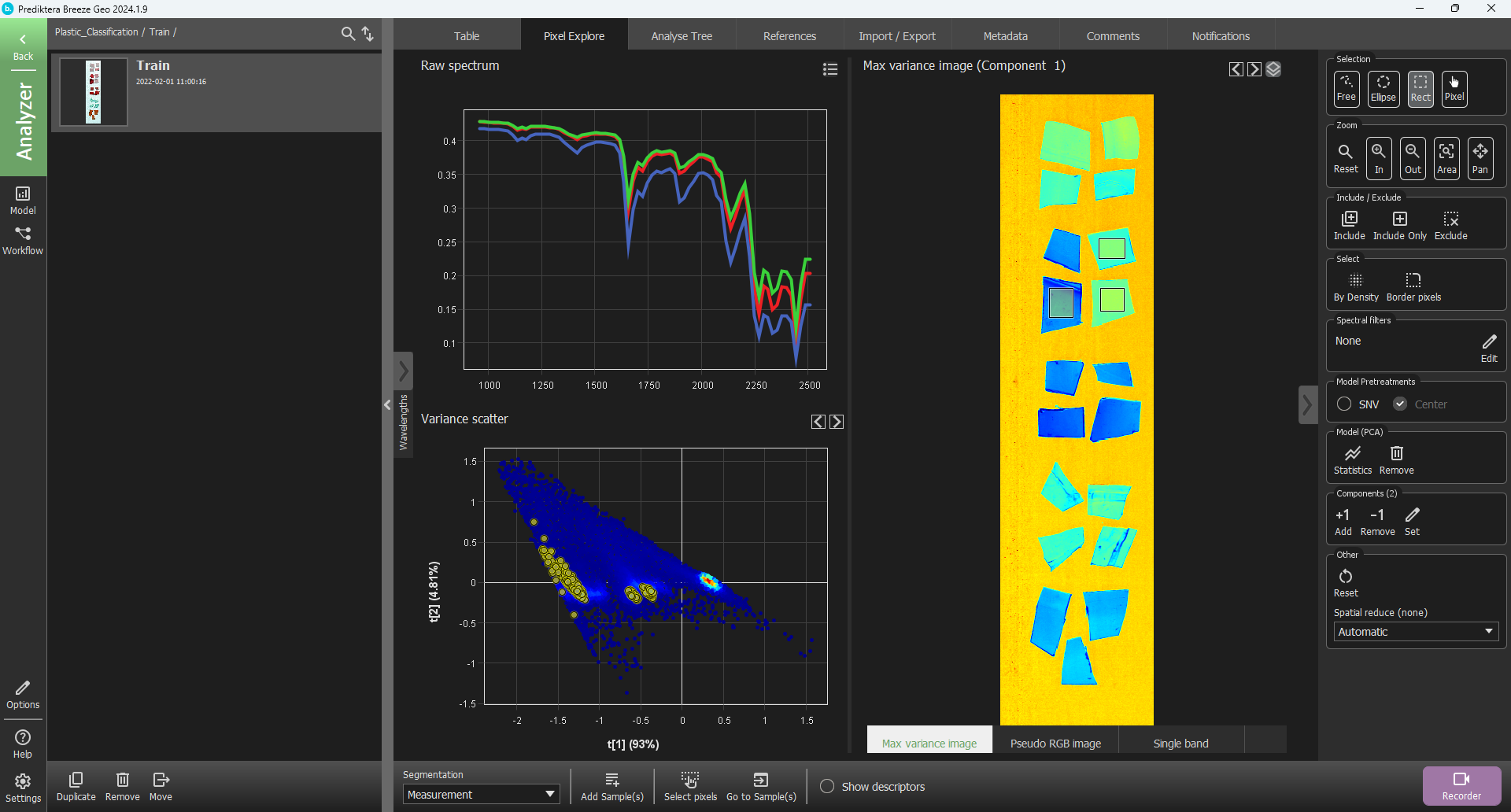

To do a quick analysis of the spectral variation in the image, a PCA model has been created based on all pixels in the image. Each point in the “Variance scatter” plot corresponds to a pixel in the image. The points in the scatter plot are clustered based on spectral similarity. The color of the points in the scatter plot are based on density (i.e. red = many points close to each other).

The “Max variance image” is colored by the variation in the 1st component of the PCA model (the X-axis in the scatter plot, t1), and visualizes the biggest spectral variation in the image. In this case, this is the difference between the sample objects and the background.

Hold down the left mouse button to do a selection of a cluster of points to see where these pixels are located in the image. Move the mouse around in the image to see the spectral profile for individual pixels or do a selection to see the average spectra for several pixels.

Select training data

The training data consists of five different types of plastic as mentioned earlier.

-

PET Bottle

-

PET Sheet

-

PET G

-

PVC

-

PC

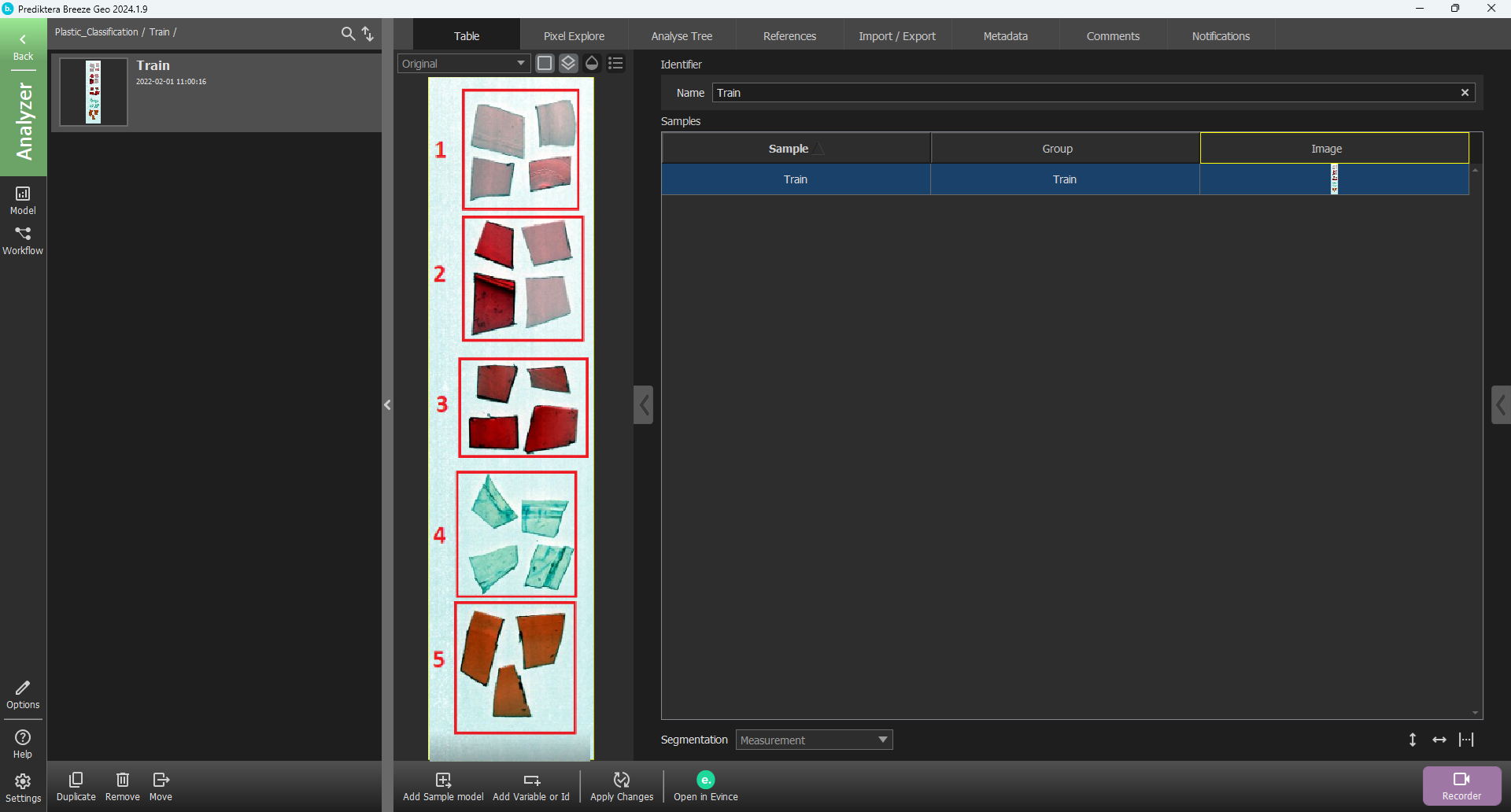

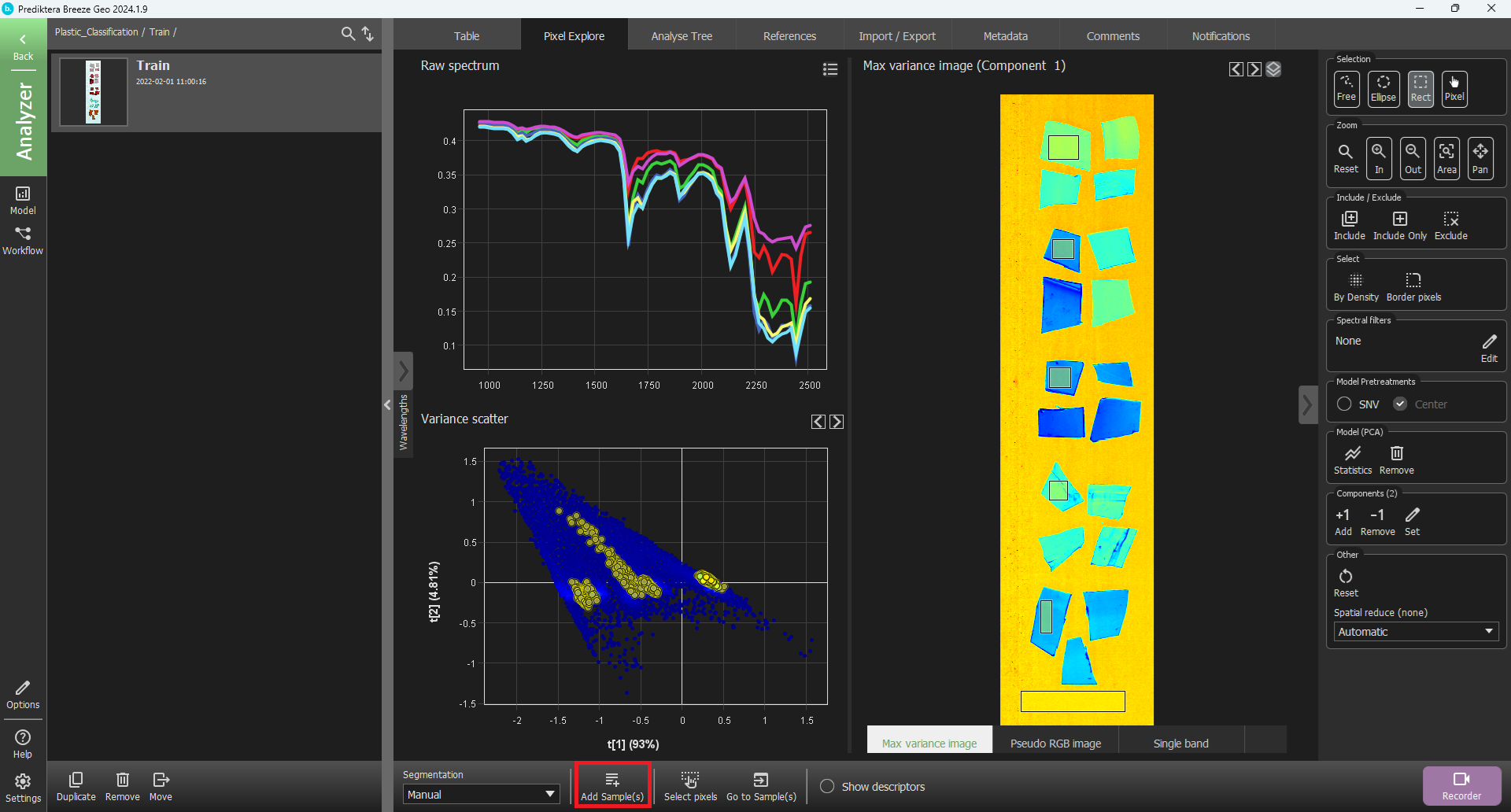

To acquire the data needed from each class to train the model we will manually select areas in Pixel Explore. Select the Selection tool “Rect” that will make rectangular areas.

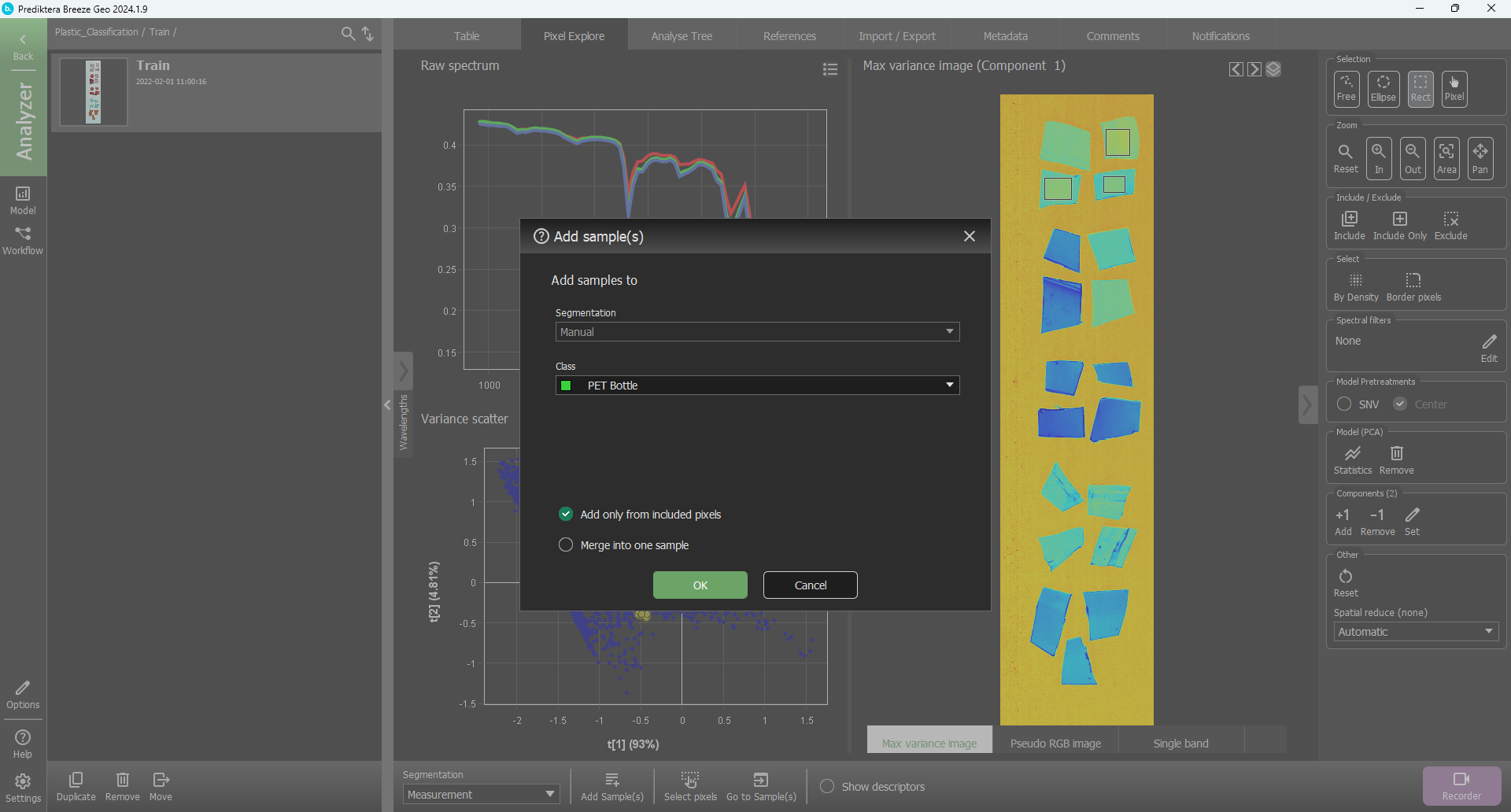

Now we will select areas in the “Max variance image” corresponding to the five different plastic types and the background. To select more than one area in the image hold down “Ctrl” on your keyboard. To zoom, use the scroll wheel on your mouse. To pan the zoomed image hold down the scroll wheel and move the image. First, select areas from five samples and the background. After you have selected the different samples and the background, press “Add Sample(s)” and then press OK.

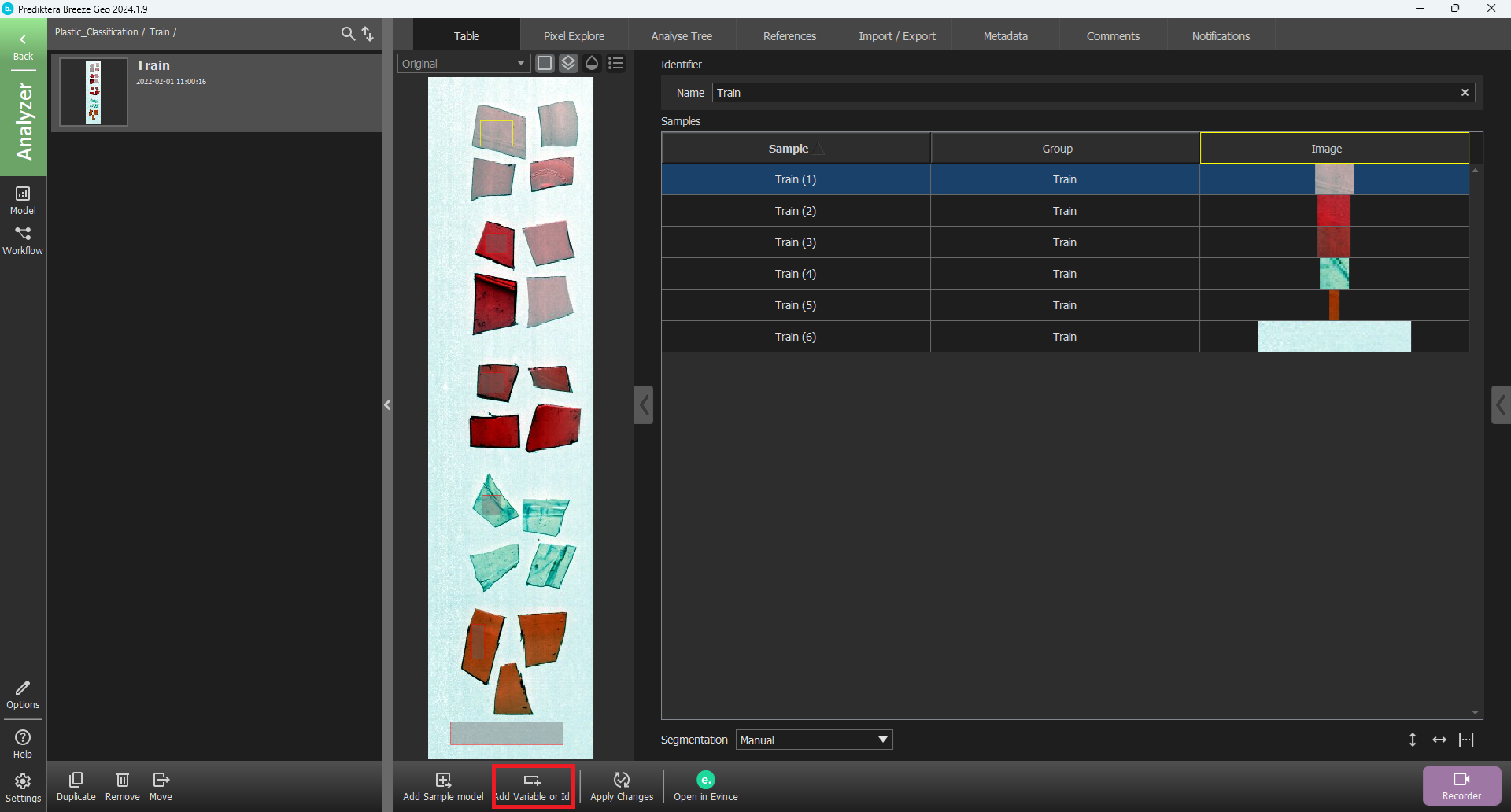

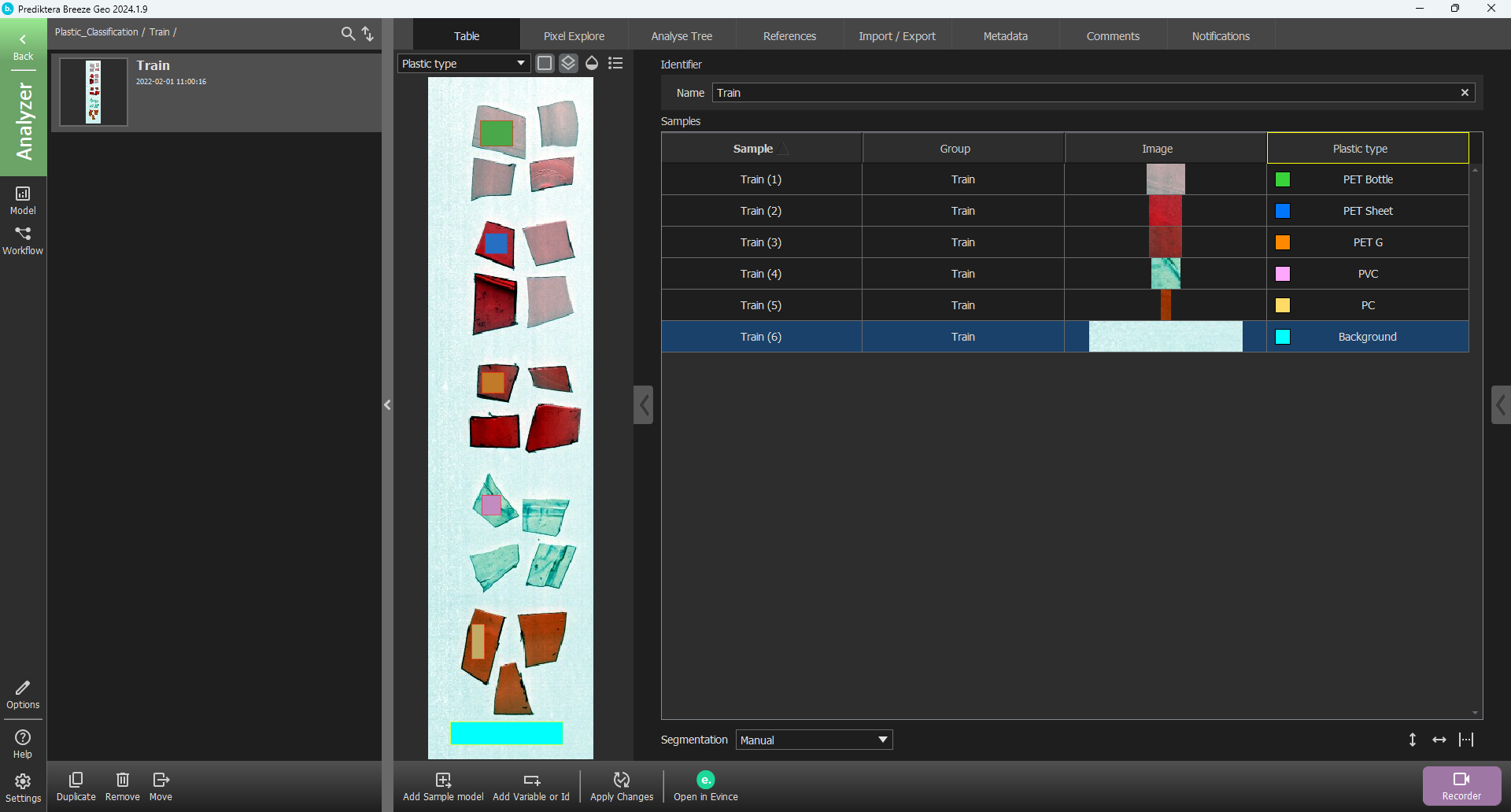

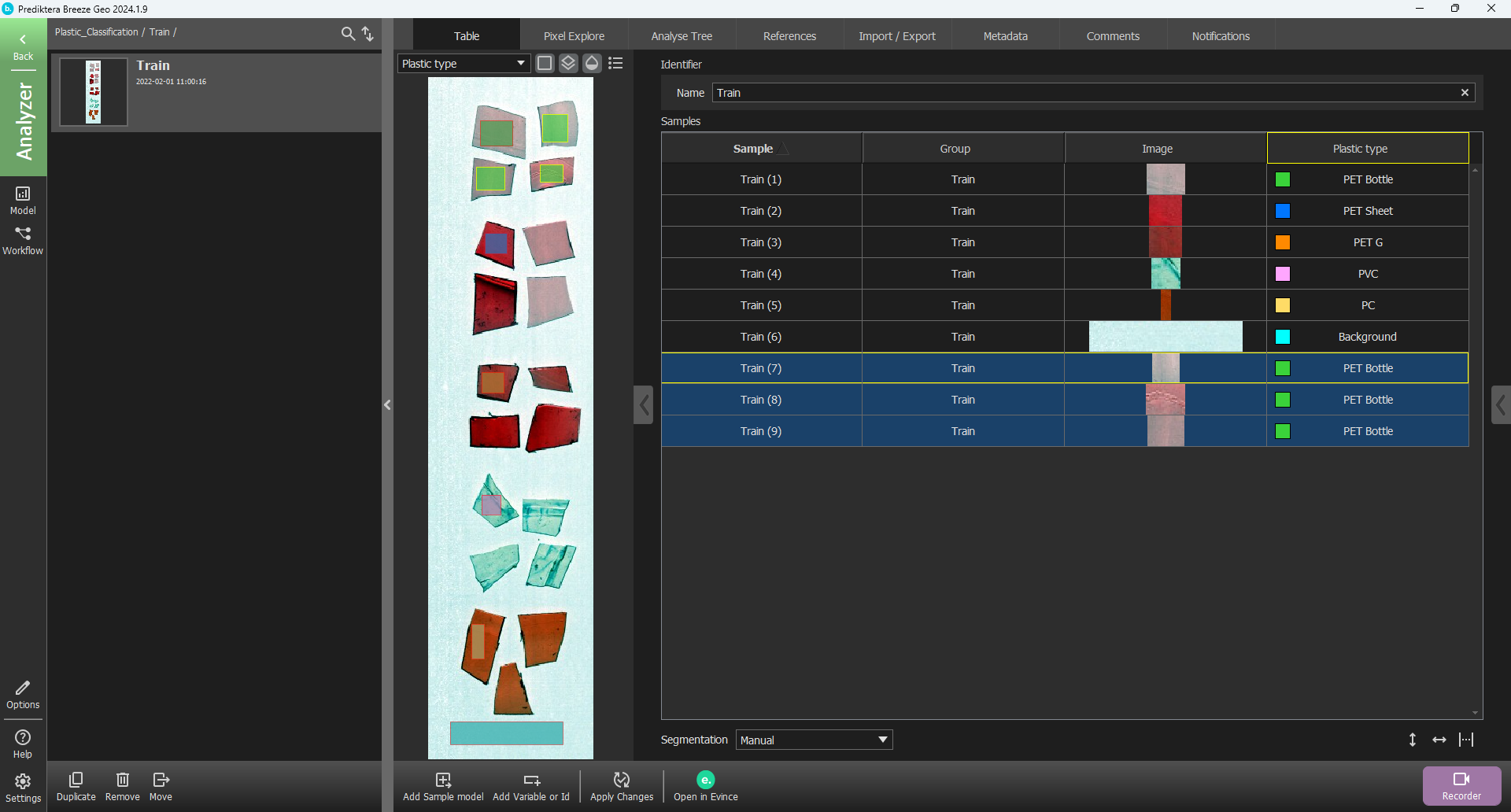

You will now be back in the Table view and you can see your six manual selected segmentations.

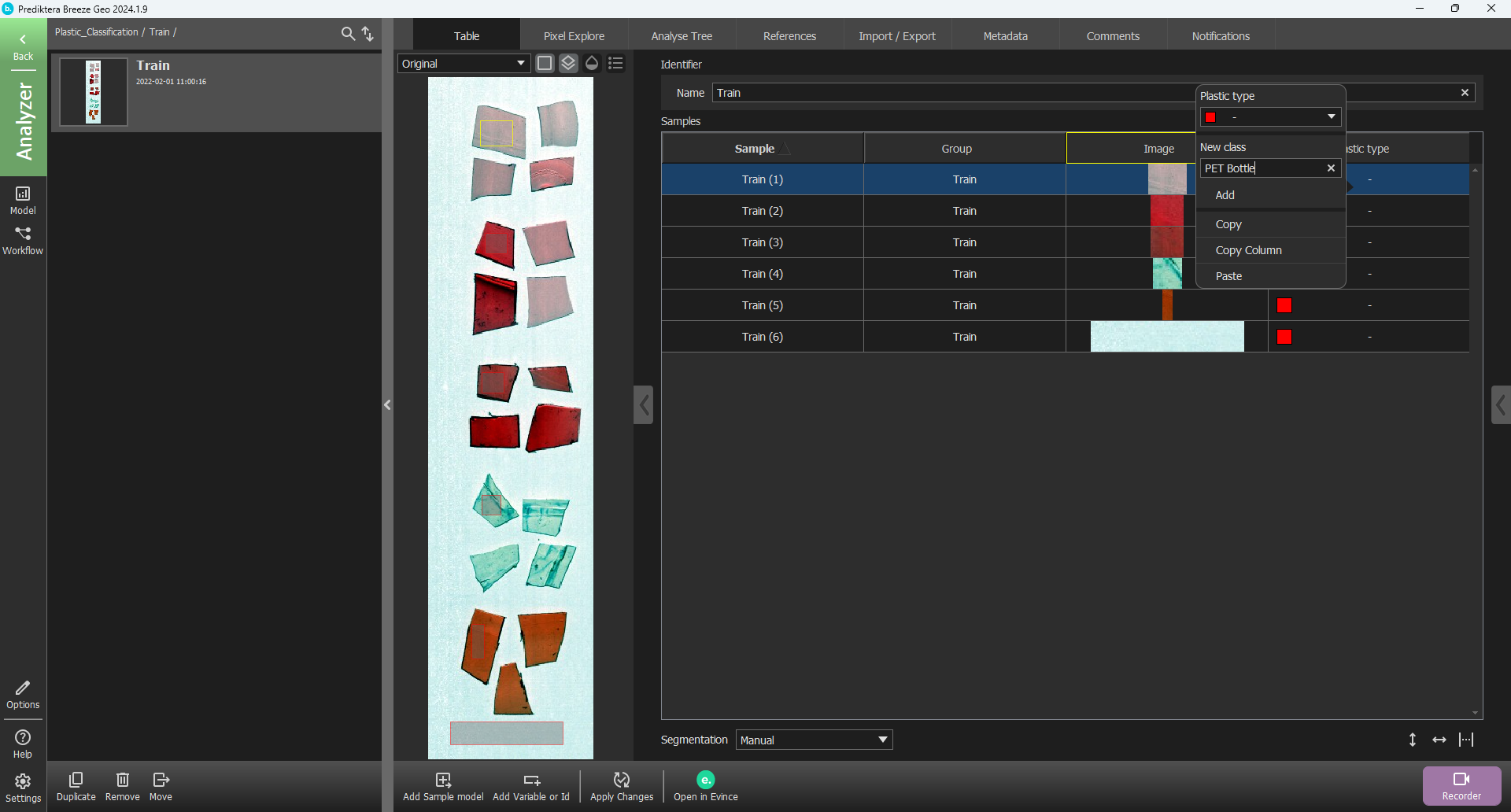

Now we will enter known class information for the training samples. First press “Add Variable or Id”.

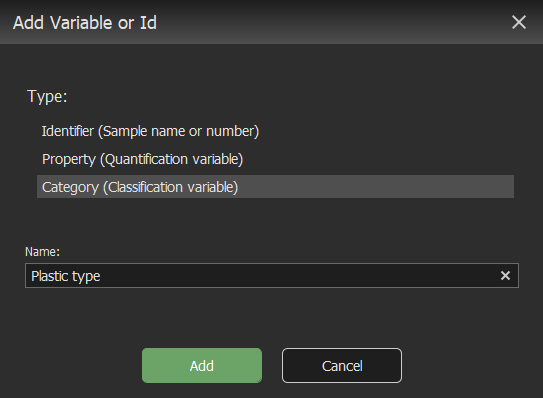

Select “Category (Classification variable)”

Change the name to “Plastic type” and press “Add”.

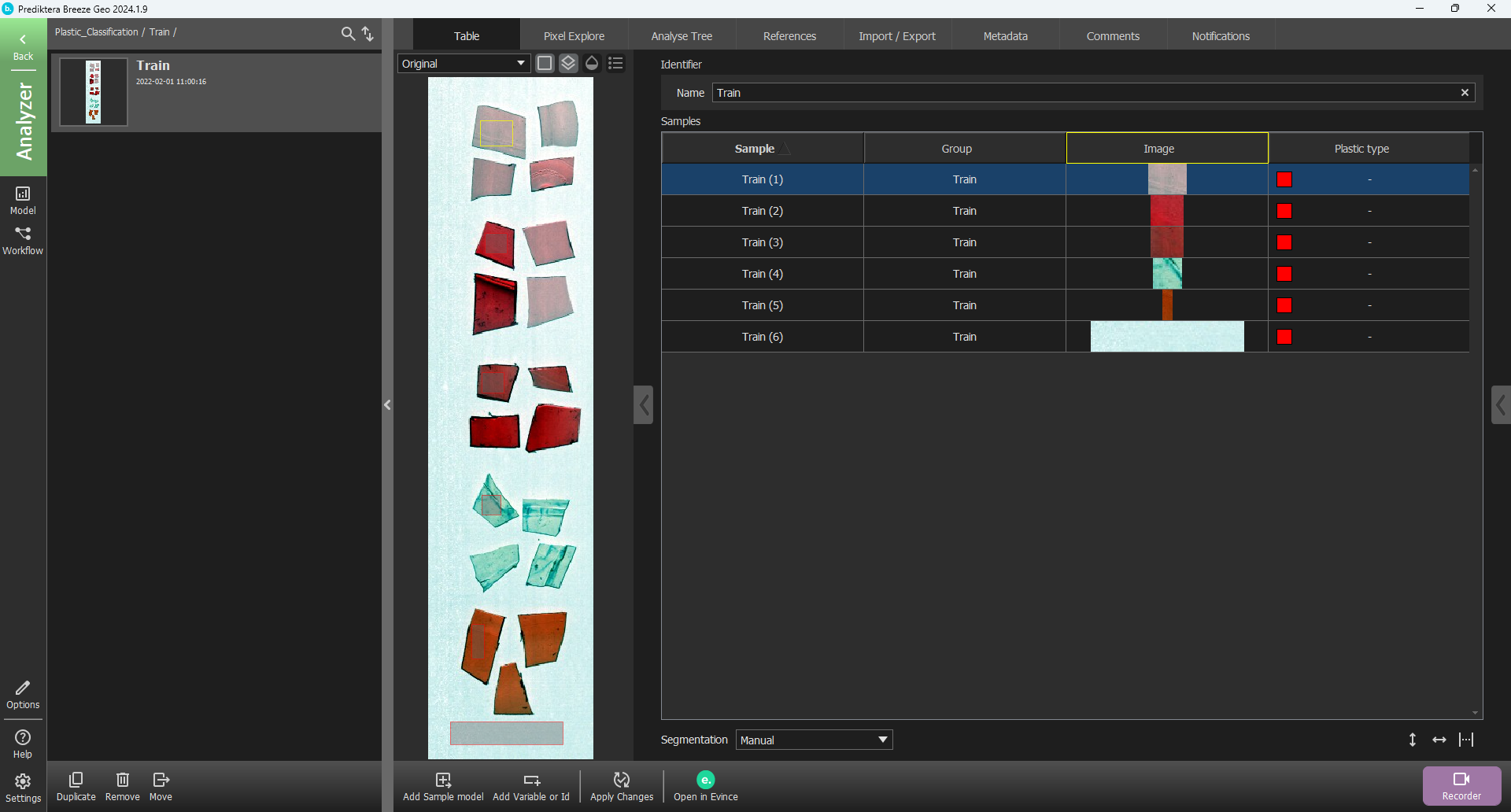

A new column in the table view has appeared.

Right-click on a row in the new Plastic type column and under New class write “PET Bottle” and press Enter on your keyboard or press “Add”

You will now see that the class of the first manual sample is PET Bottle. Do the same procedure and add the classes, “PET Sheet”, “PET G”, “PVC”, “PC” and “Background”. (Please note that the colors used for each class might be slightly different in the version of Breeze you are using).

Create a Machine learning model and then retrain it with more data

You will now create a Machine learning (ML) model based on the training set. But before we go into the model wizard to create the model we need to apply a second layer of segmentation to get more training data for the ML model. In this tutorial, we will use the “Grid and inset” segmentation.

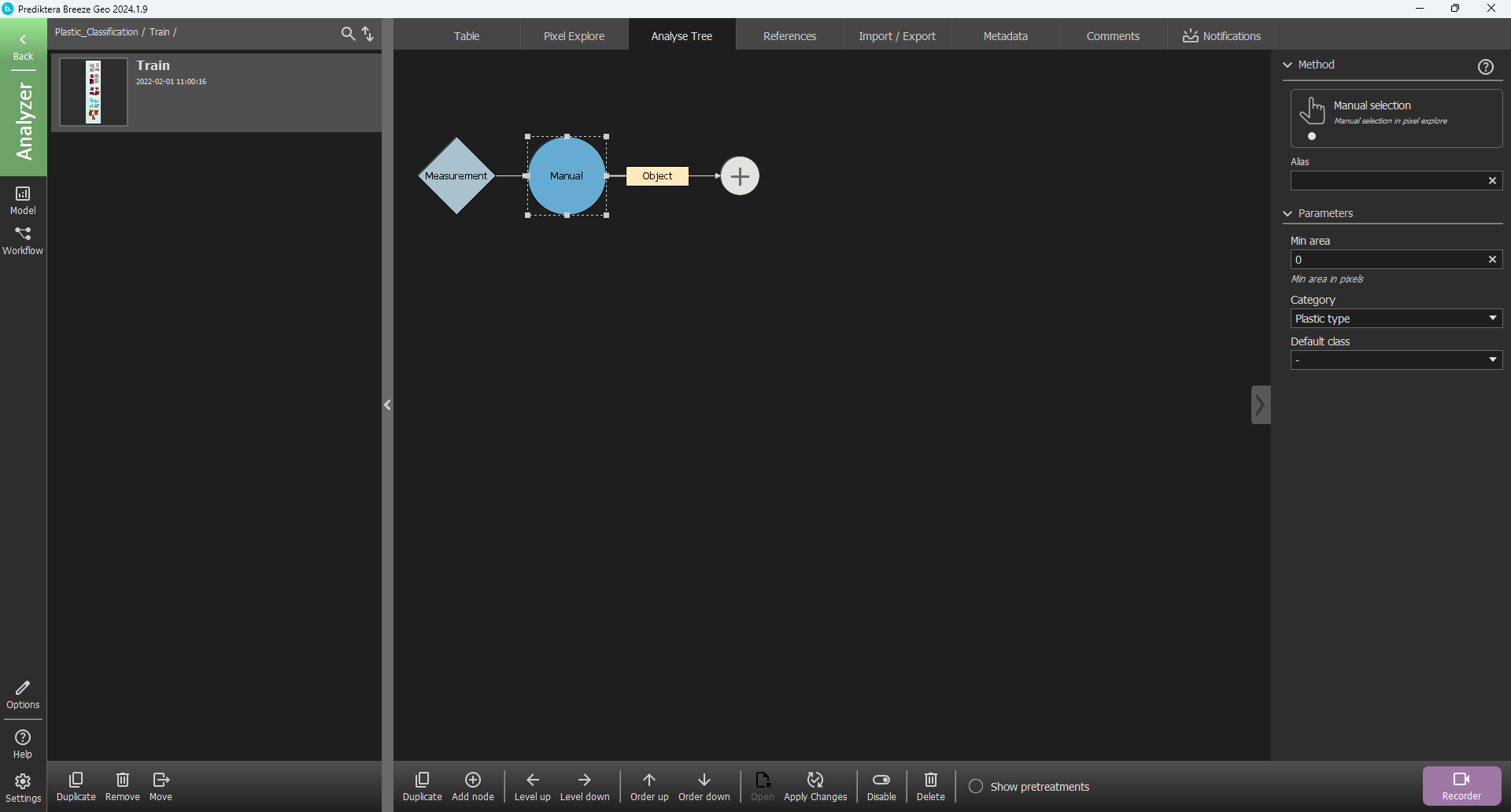

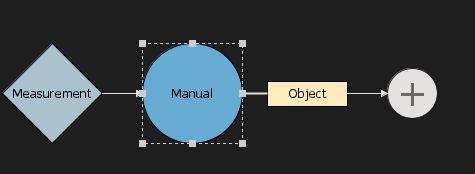

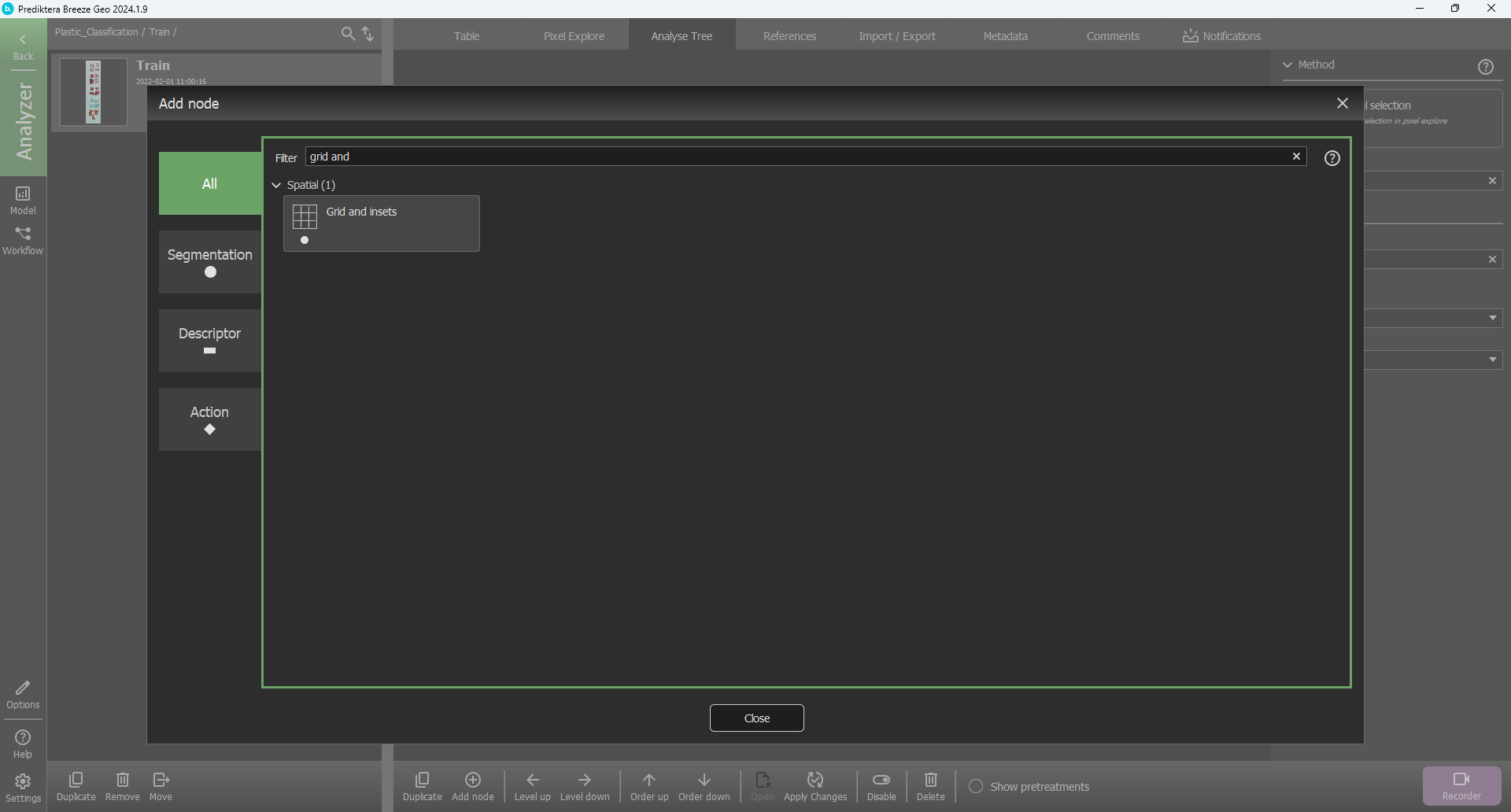

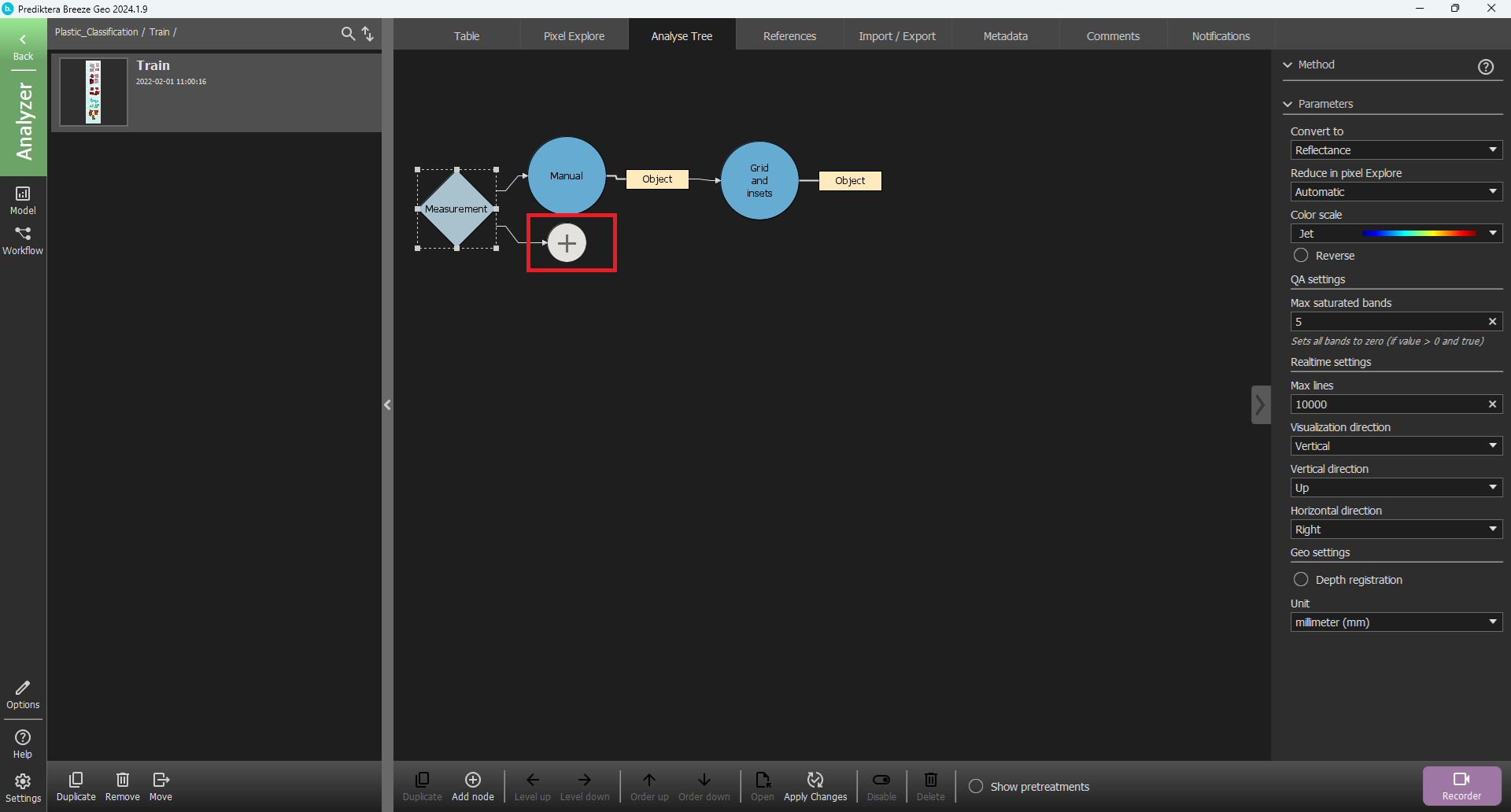

Go to the “Analyse Tree” tab.

Click on “Manual” and you will see a plus sign appear.

Click on the plus sign.

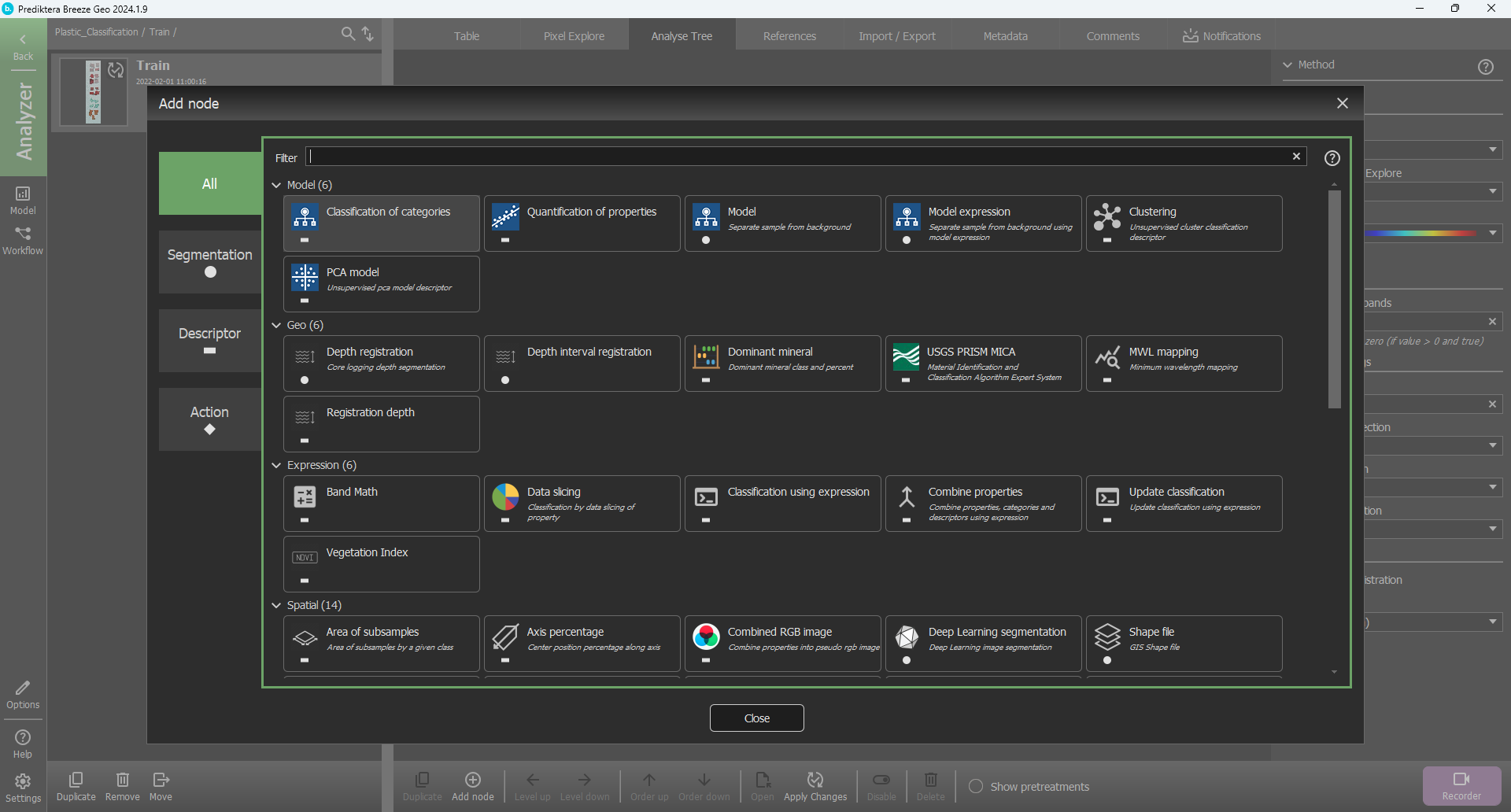

Select “Segmentation”, press the drop-down menu beside “Method” and locate “Grid and inset” and press “OK”

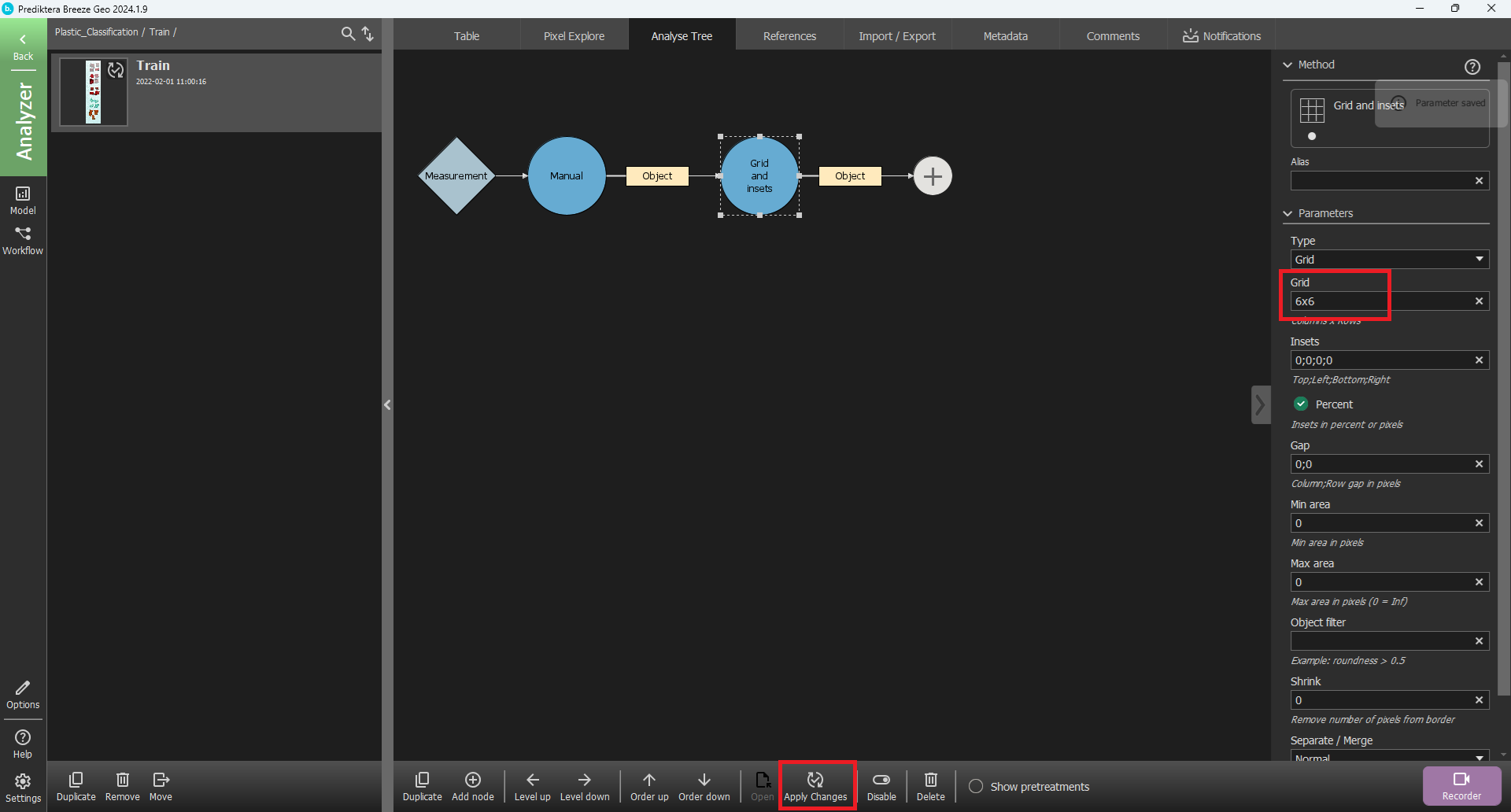

In the menu to the right, change the “3x3” under the parameter “Grid” to “6x6”

See Grid and insets for all options.

Press “Apply Changes”

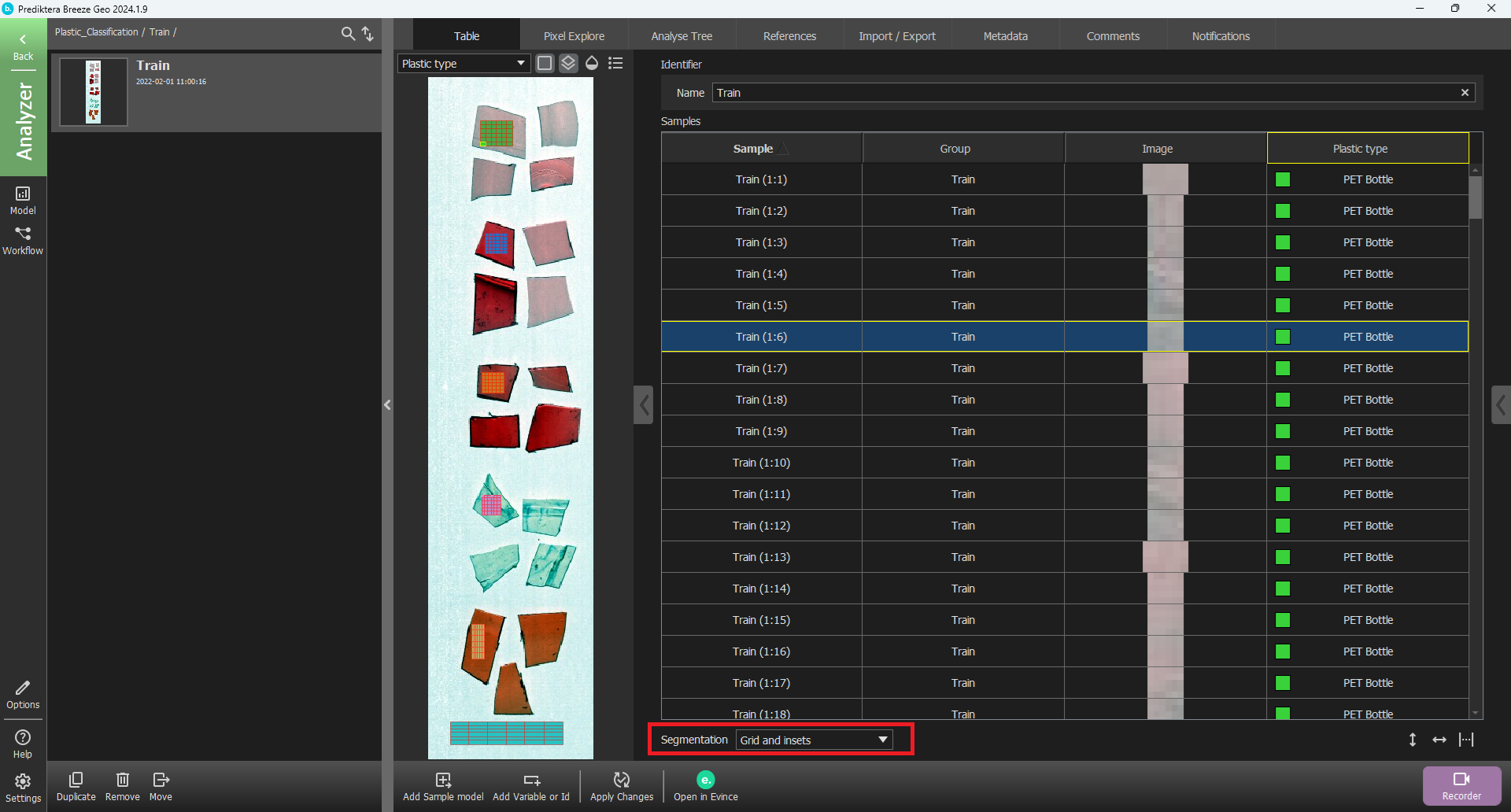

If you change the Segmentation in the drop-down menu under the Table to “Grid and Inset” you can see the 6x6 grid added under each manually selected area.

Press the “Model” button at the bottom right corner.

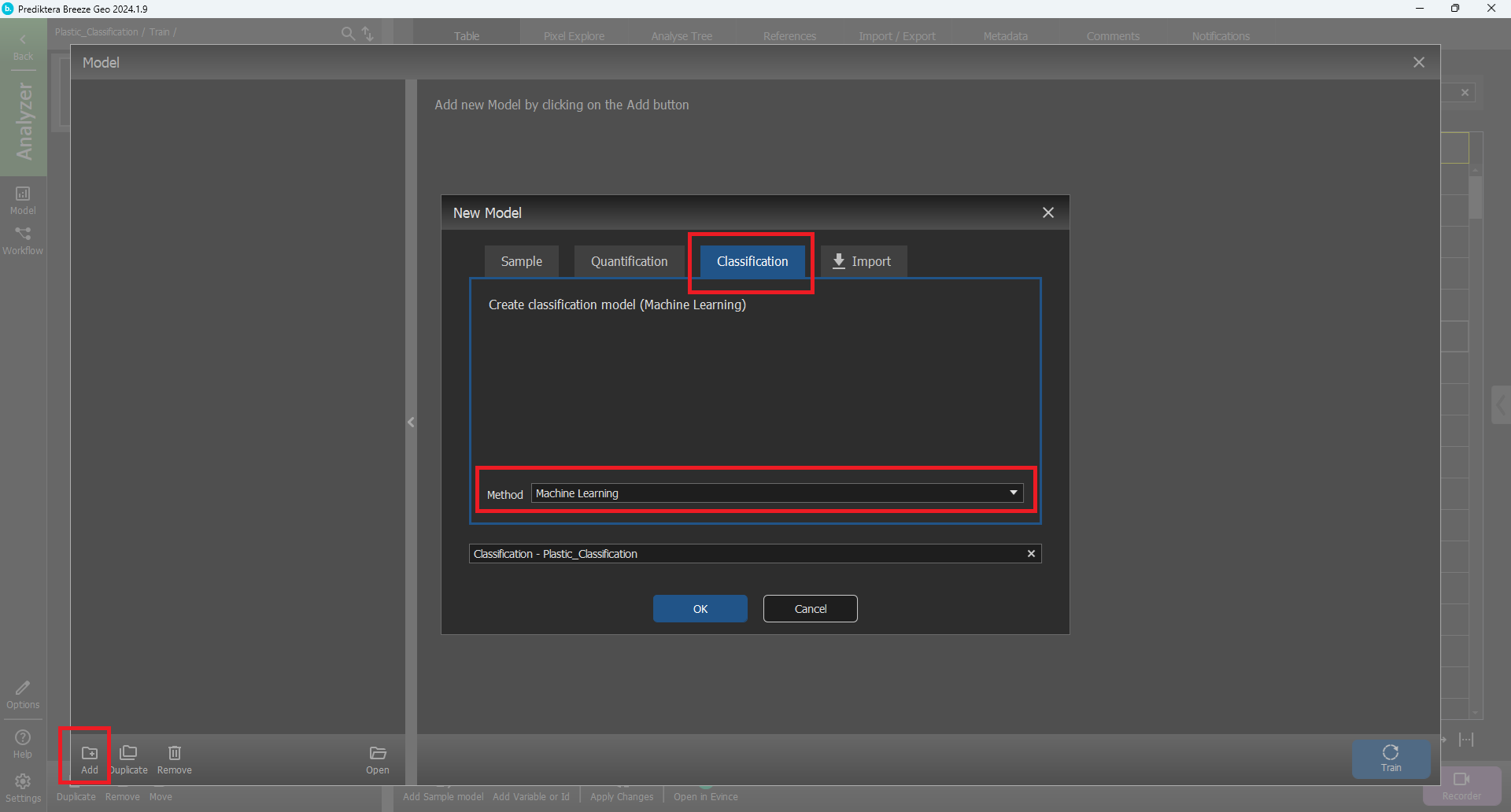

In “Model” press the “Add” button at the bottom left corner.

Select “Classification” and in the drop-down menu change from “PLS-DA” to “Machine Learning”, press “OK”. ( You can change the name on the model if you want)

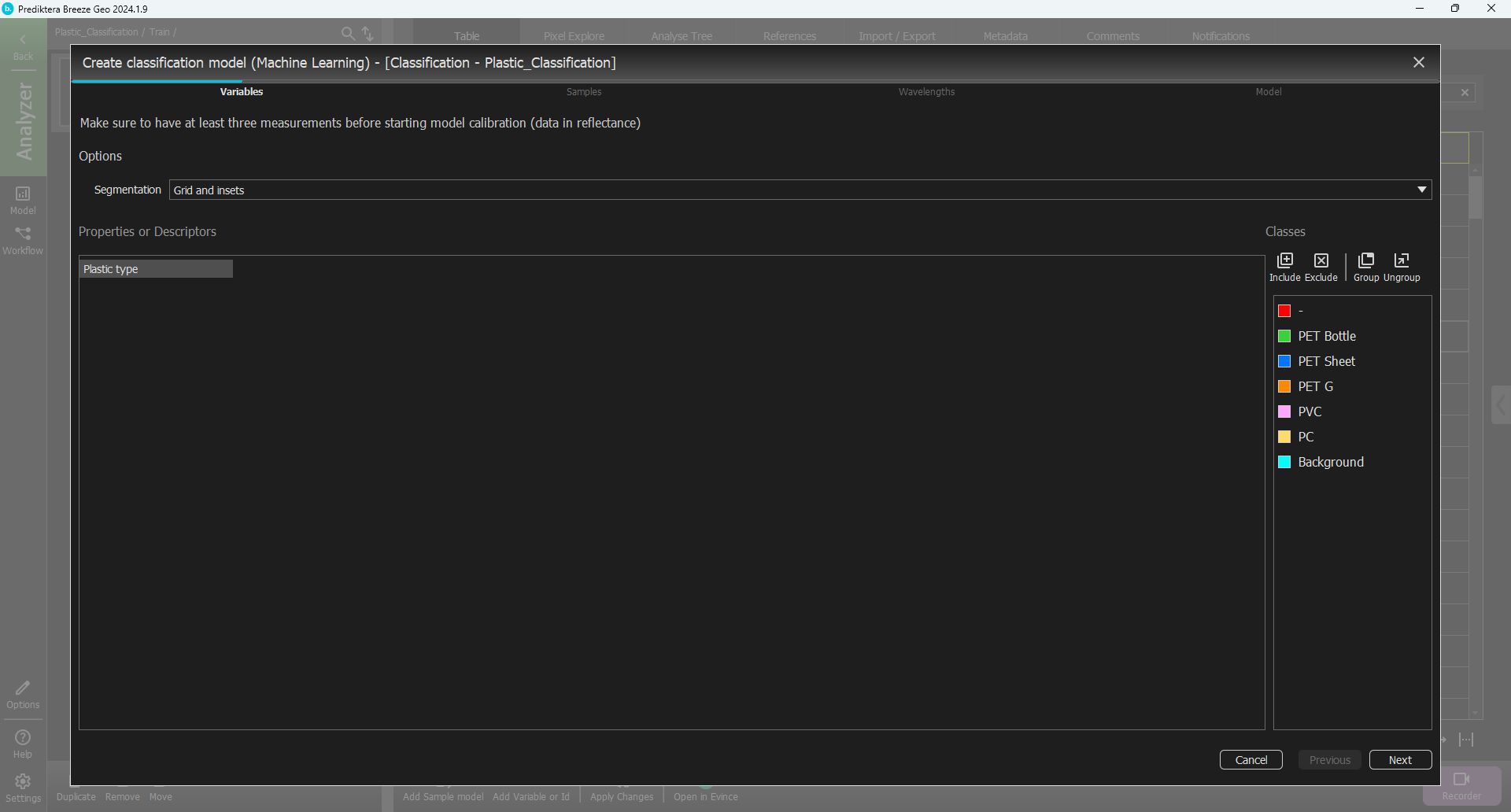

In the first step of the Classification wizard, you can see that the “Grid and inset” segmentation is selected. and the “Plastic type” descriptor that you will use to build the model. Press “Next”.

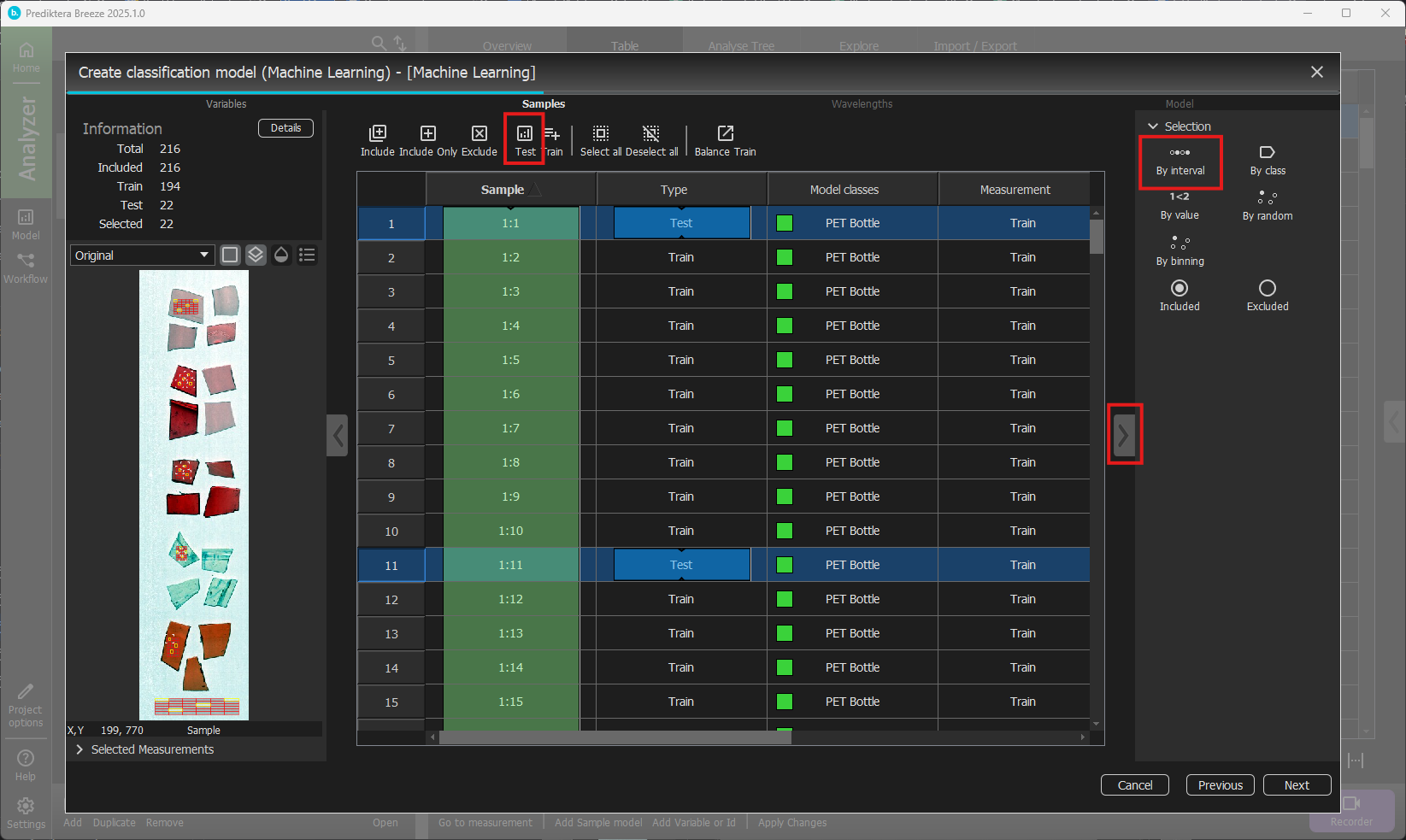

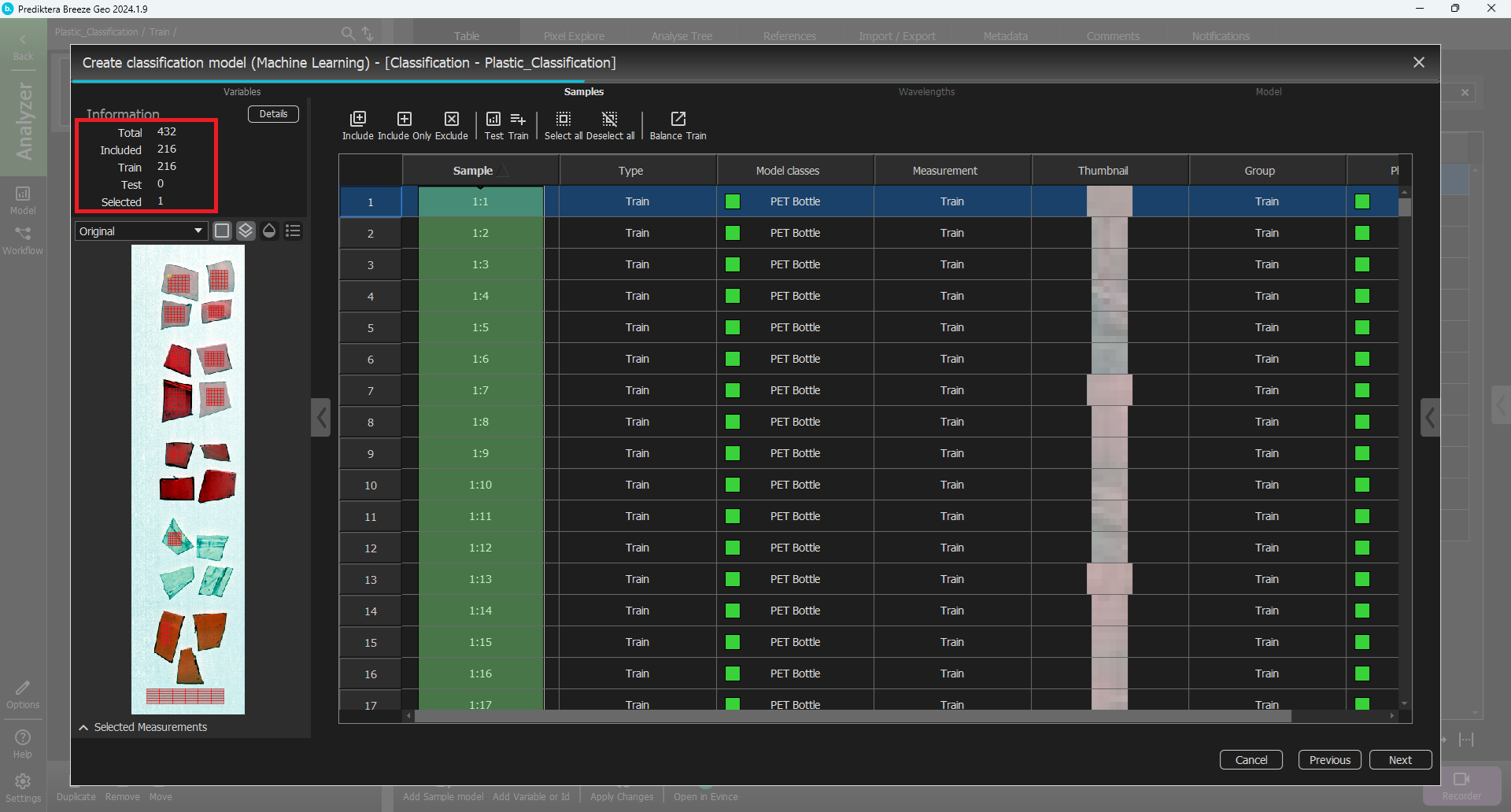

In the next step of the wizard, you can select the samples that you want to include in the model. By default, the measurements from the “Train” group have been included since they have entered class information. Each sample that will be used for the training corresponds to one of the segments in the 6x6 grid on the plastic pieces as you can see in the image on the left.

Expand the panel to the right and select “By interval”. Start position and interval can be left default of 1 and 10. With the selected interval press “Test” above the table to change Type to Test.

Press “Next”.

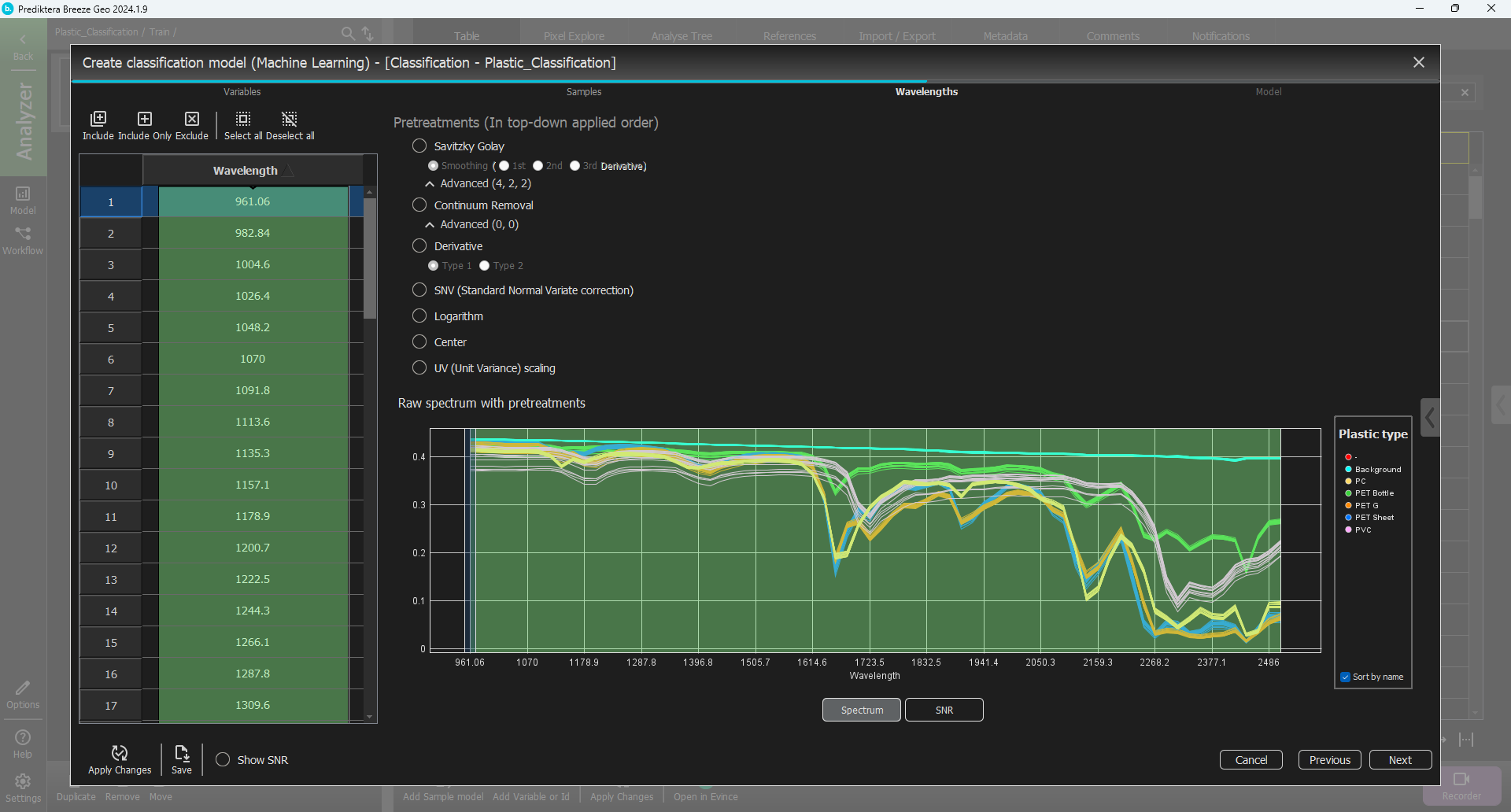

By default, all wavelength bands are included and no pretreatment is added. The graph on the right is showing the average spectrum for each sample (i.e. each grid). Above this graph, there is an option to select different Pretreatments of the spectral data. All default settings here are OK.

Press “Next”.

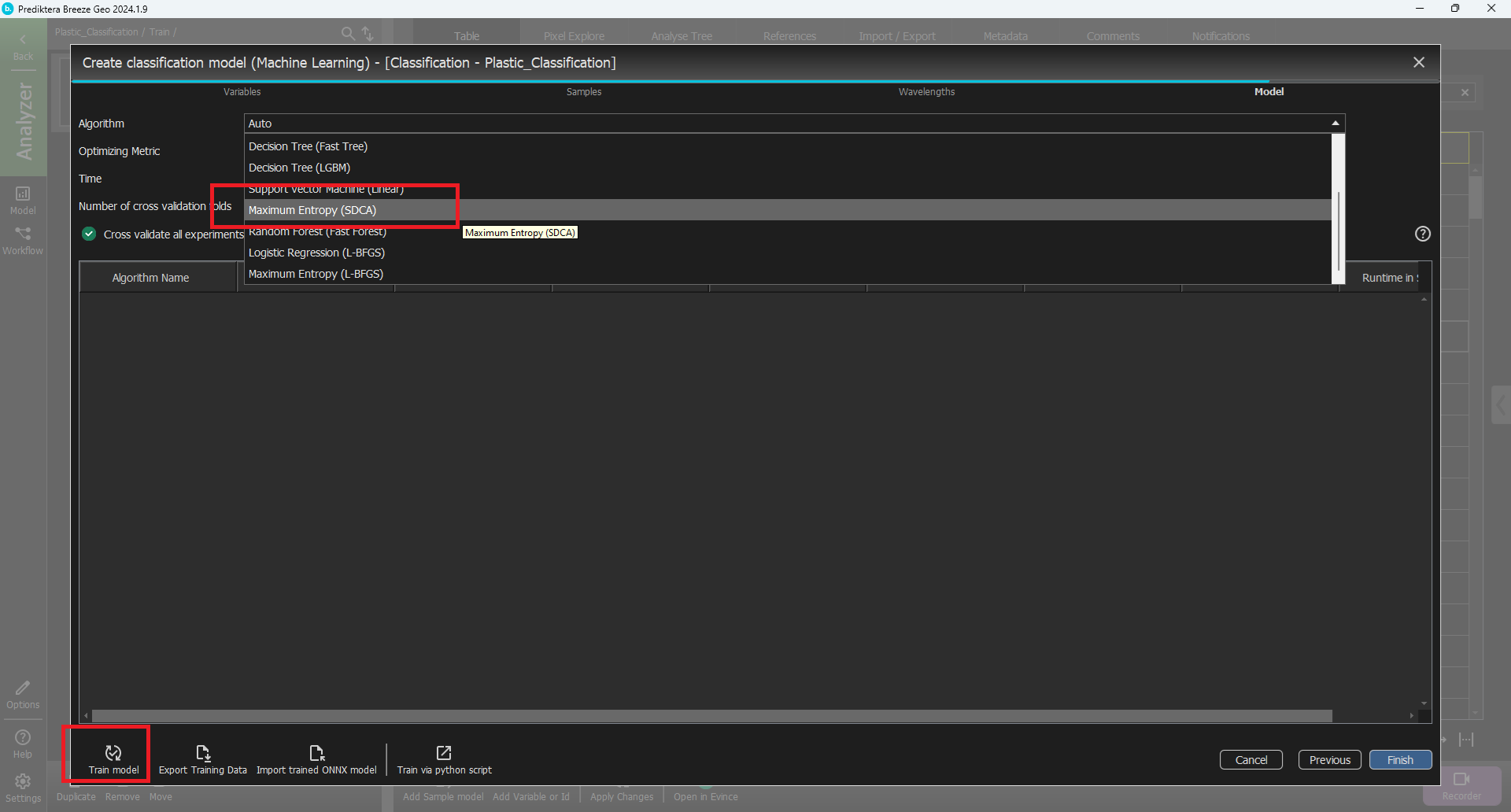

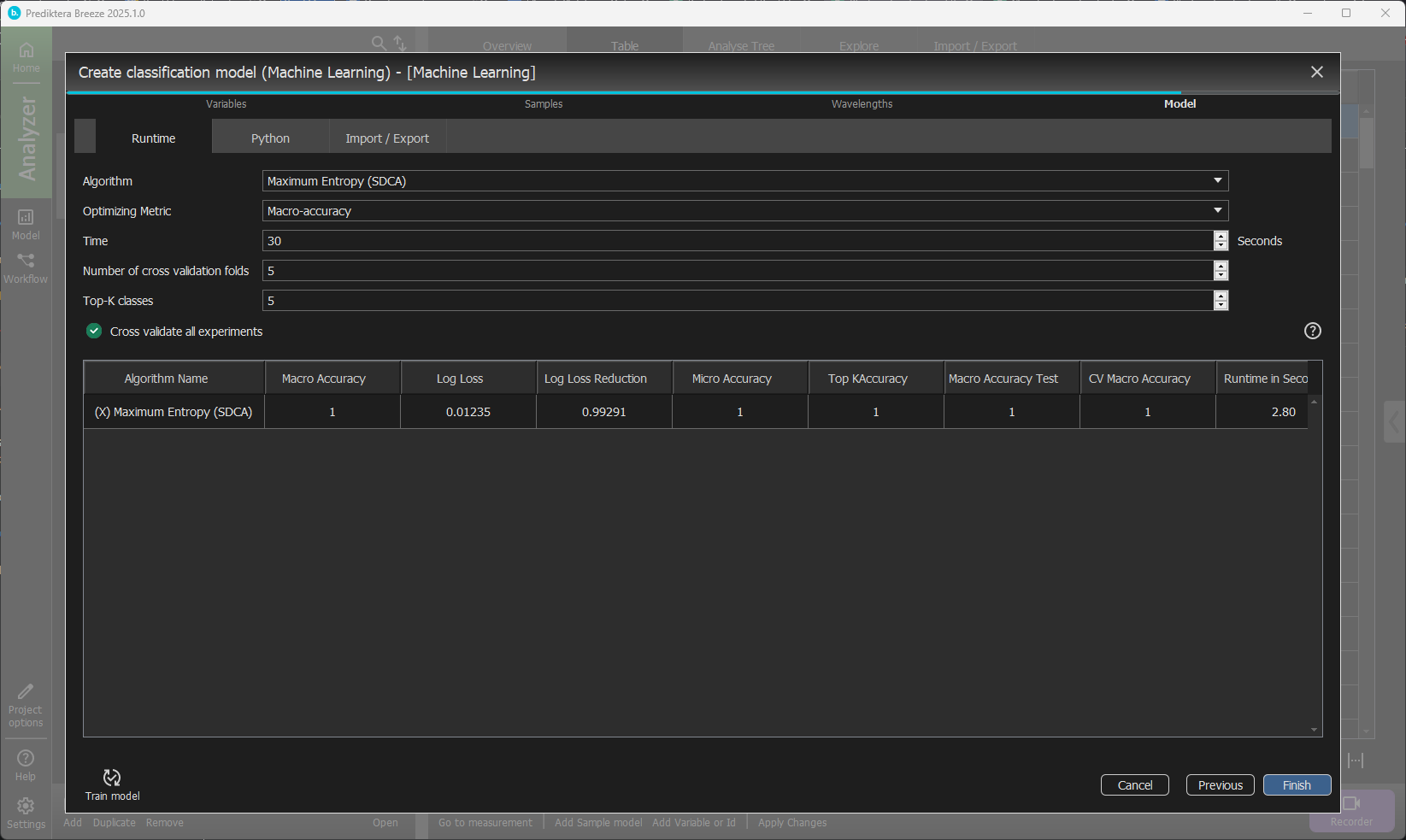

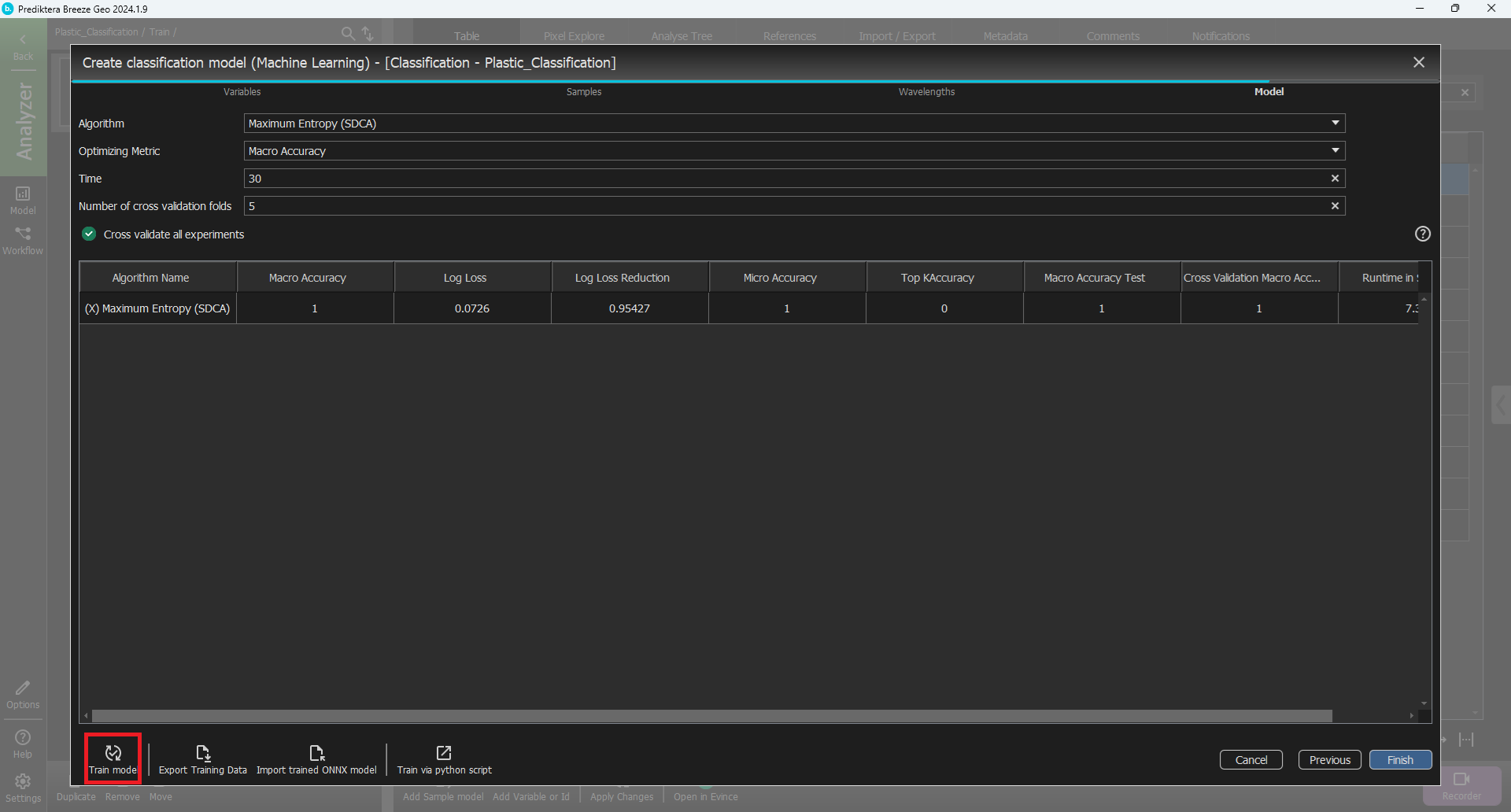

In the last step, we will train the model. For this tutorial, we will use Maximum entropy as the ML model. Press the drop-down with “Algorithm” and change from the default “Auto” and scroll down until you find the Maximum Entropy (SDCA). We can let the training time be 30 seconds.

Press “Train”

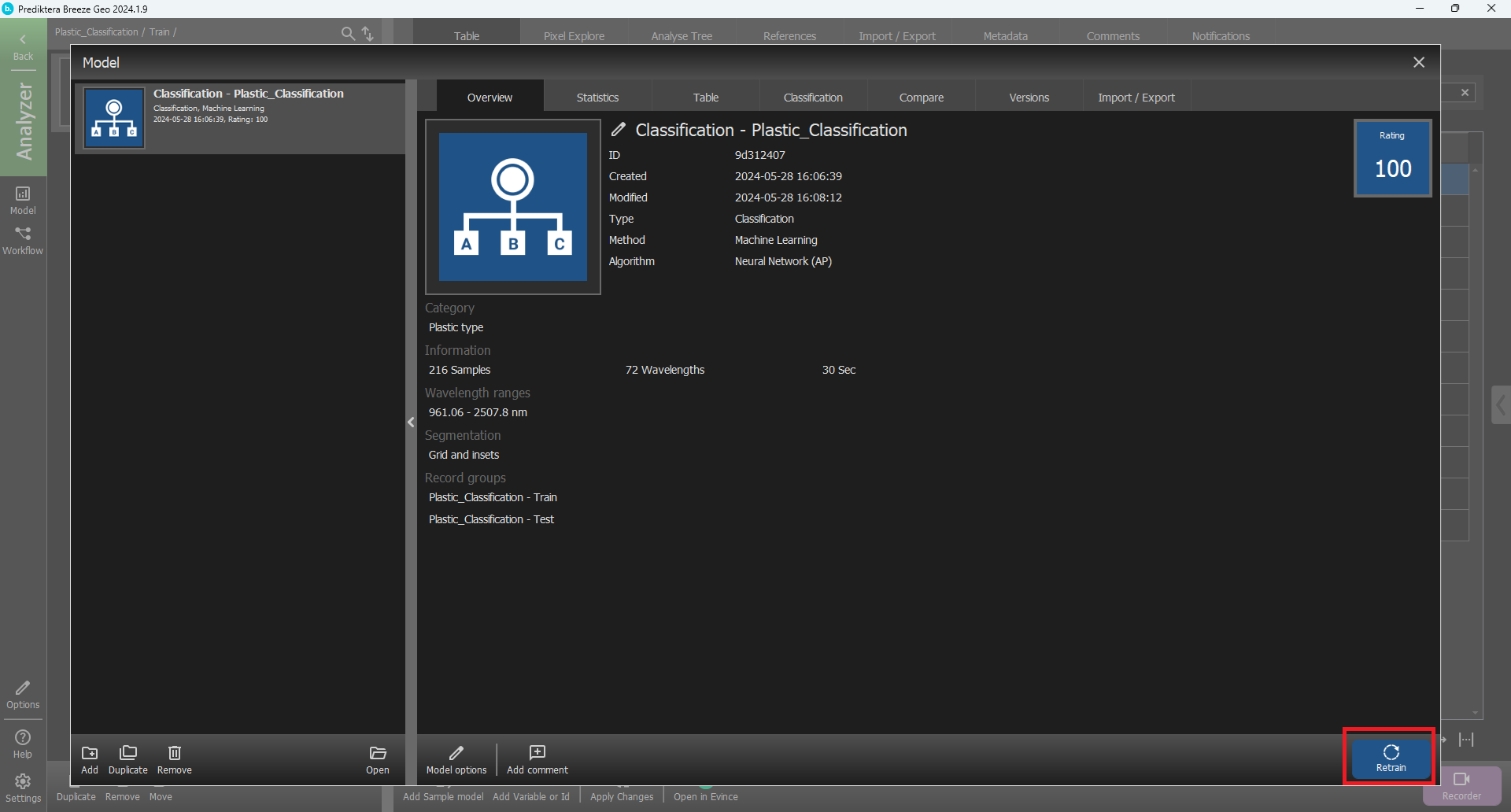

You now have a trained model.

Press “Finish”

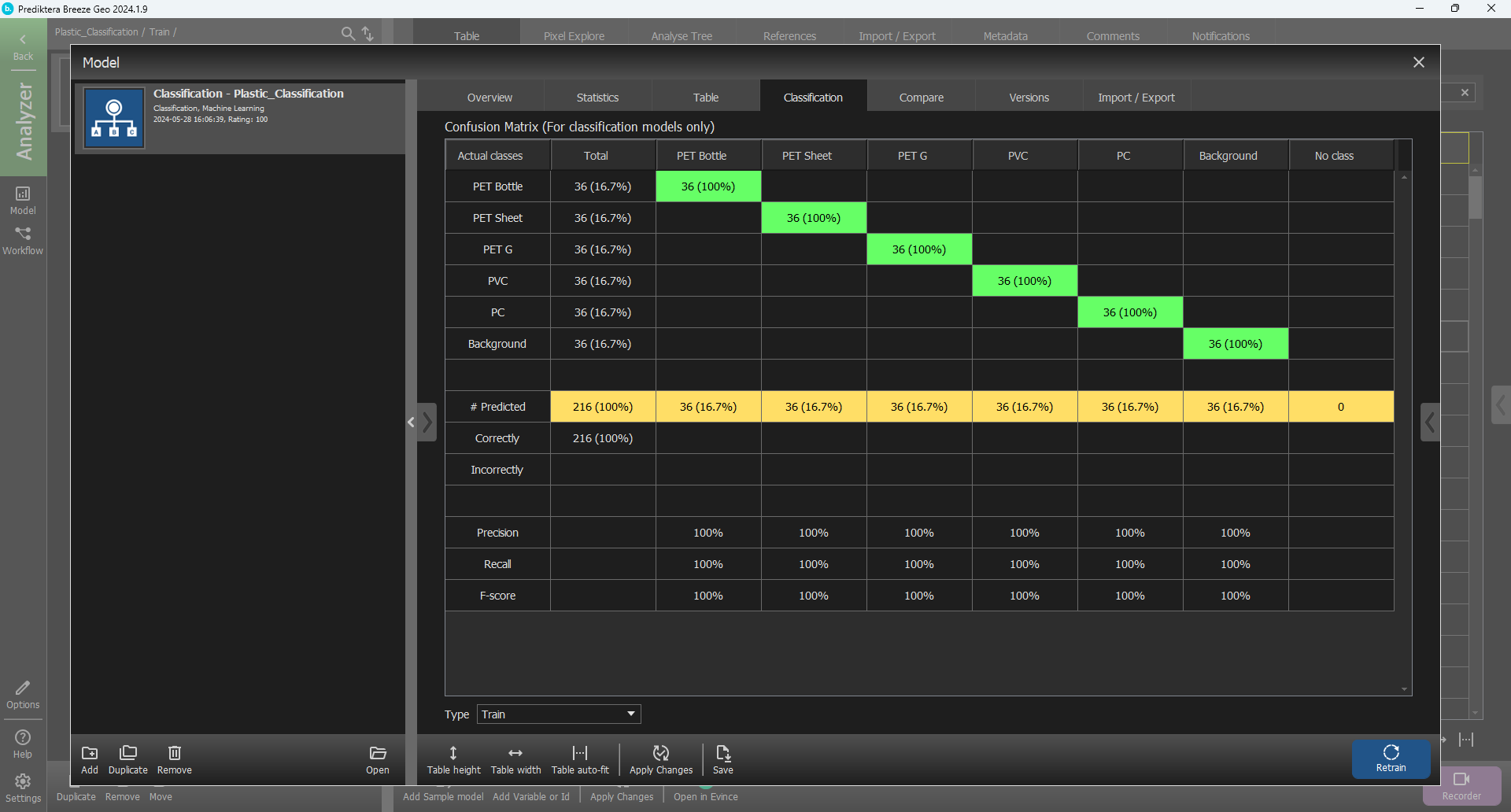

Go to the “Classification” tab to see how well the trained model worked on the training set.

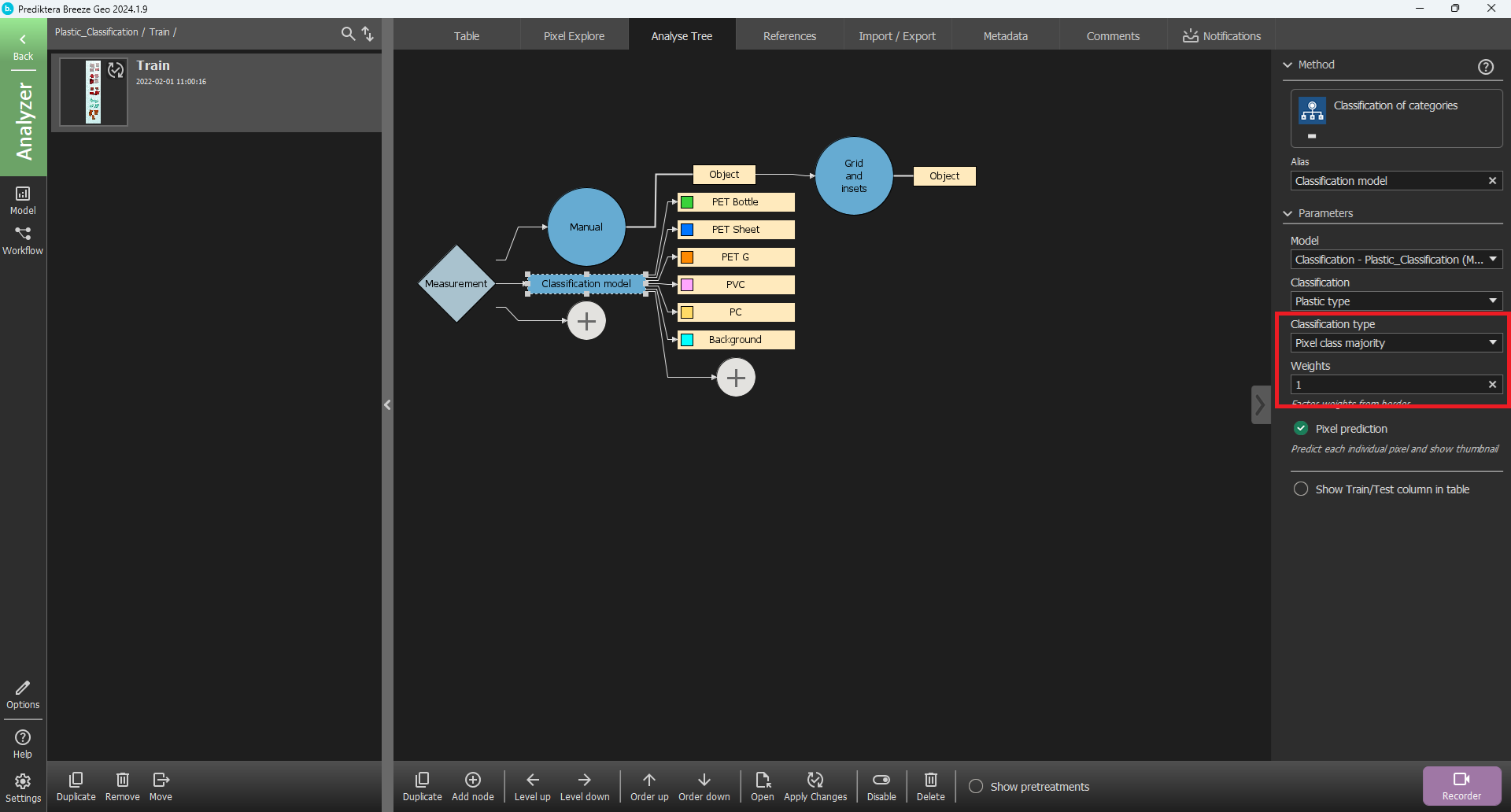

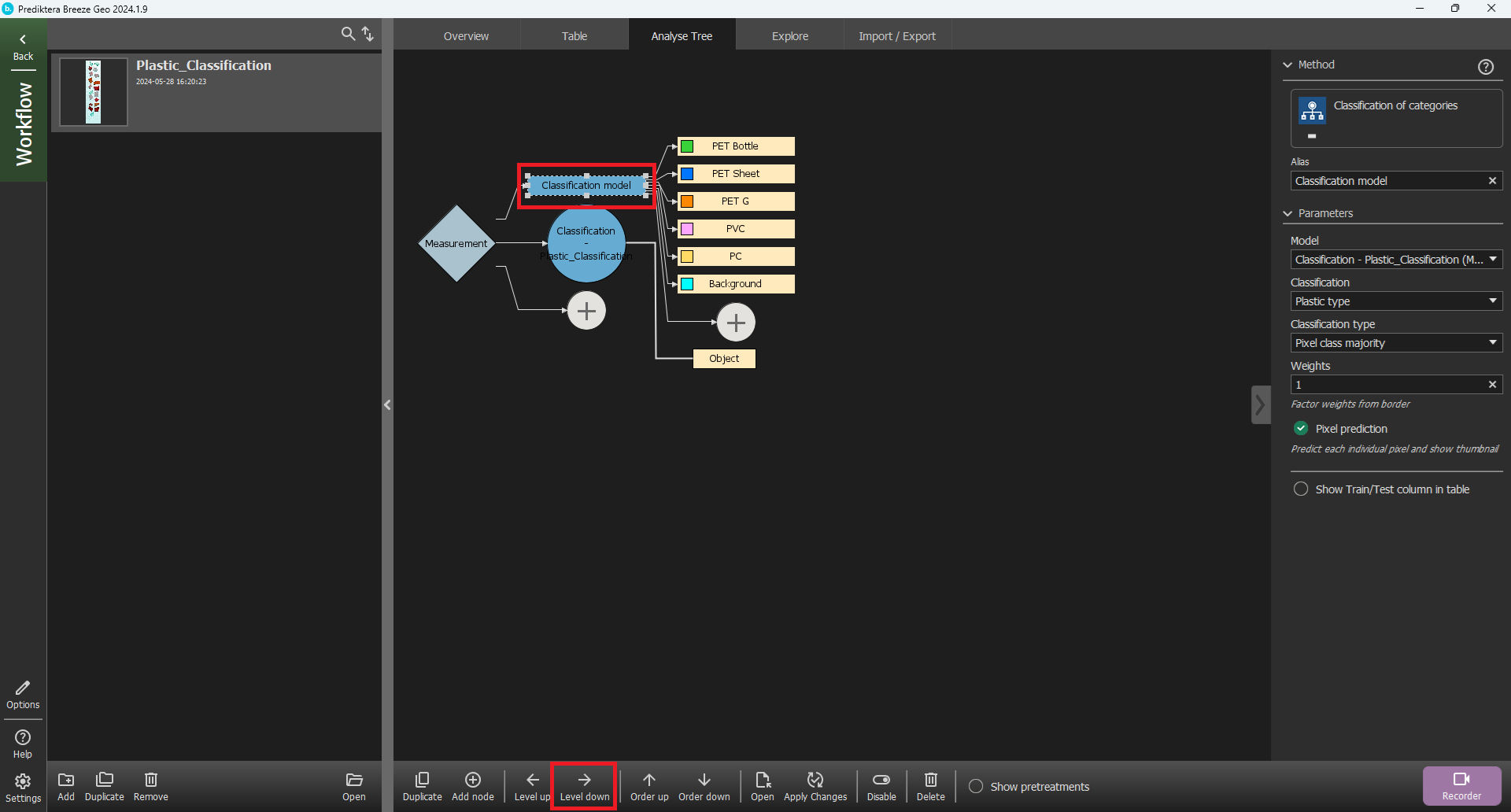

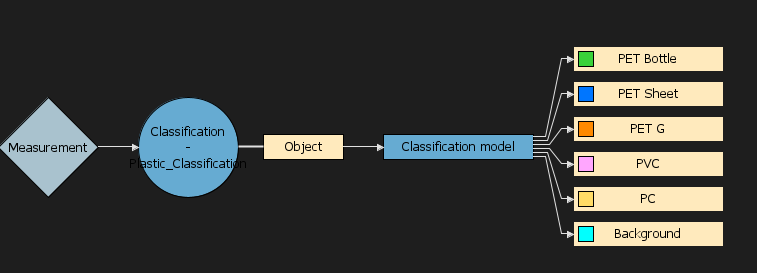

Close the model dialog, select Train and go to the “Analyse Tree”.

Click on “Measurement” and press the plus sign.

Select “Descriptor” and “Classification of categories” and write an “Alias” name like “Classification model”

The classification model will now be added to the Analysis tree. In the menu on the right side, set the “Classification Type” to “Pixel class majority”. The “Weights” should have value “1”.

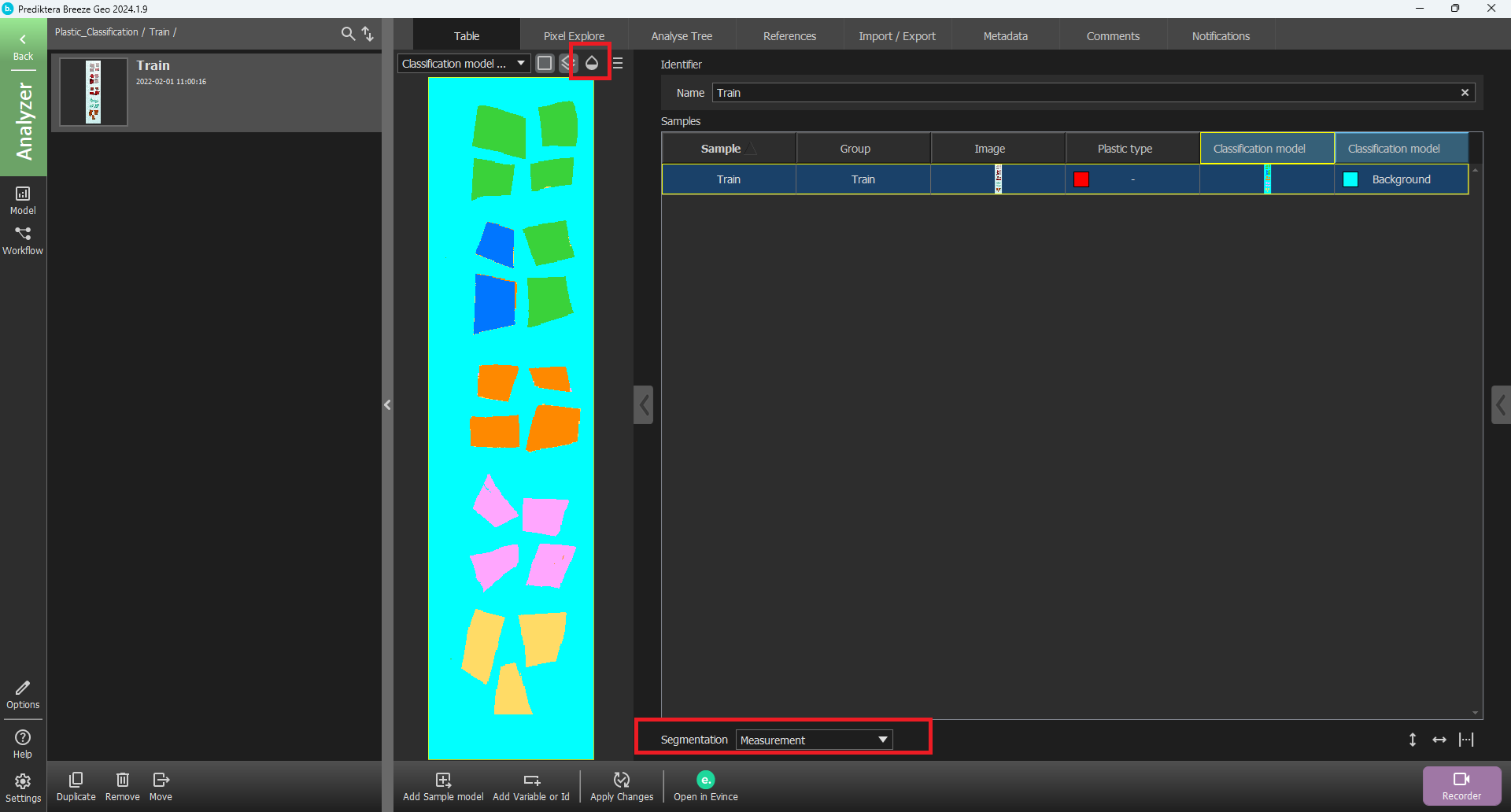

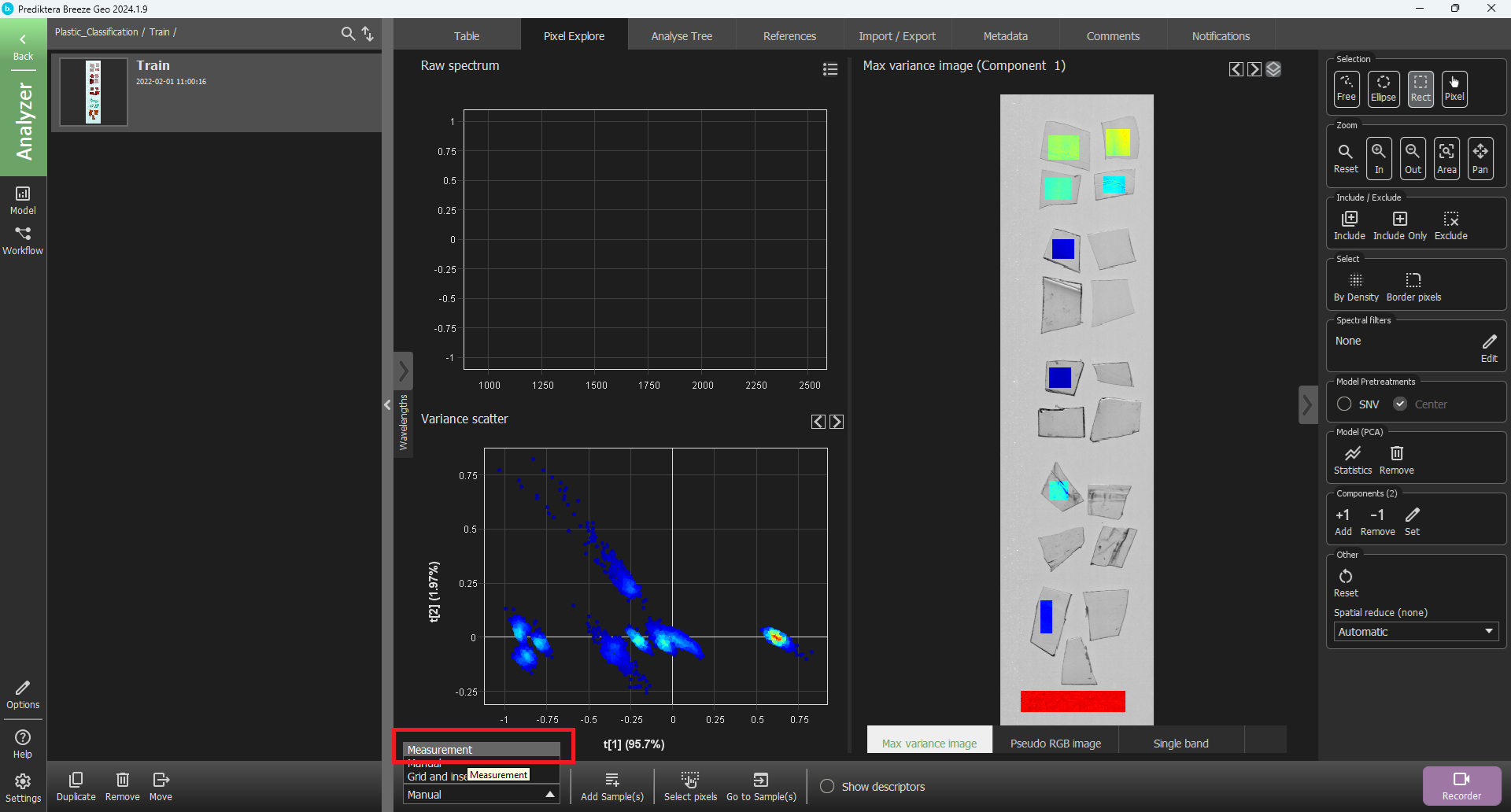

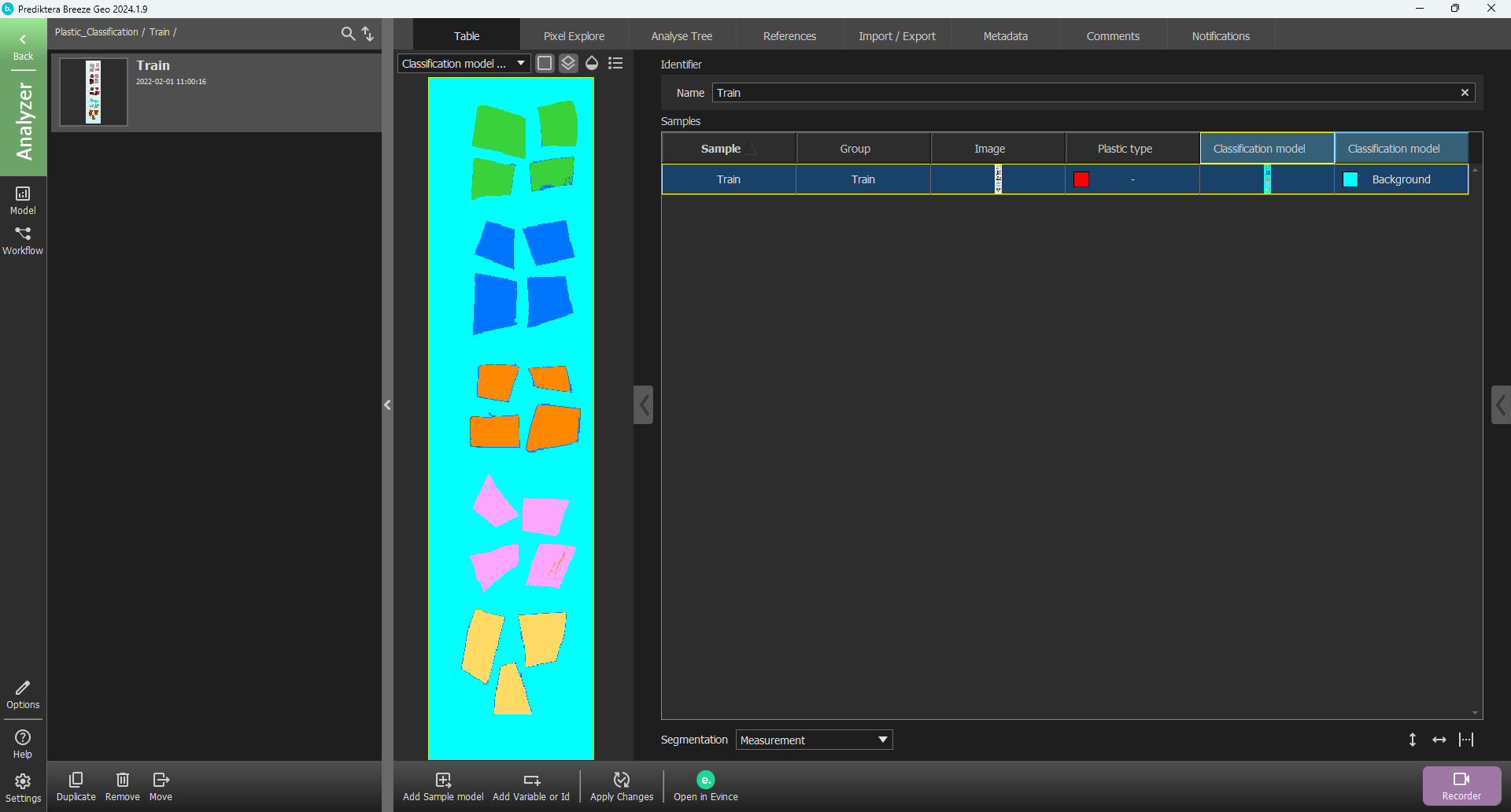

Go to the Table view again and press “Apply changes”. The classification model will now be applied to your image. Select the “Measurement” segmentation level and click in the column for the classification to see the results on the image. You can also press the button to add a legend for the image showing the color coding for the classes.

If you view the image with the “blend” button not selected it might be easier to see the difference between the different colors.

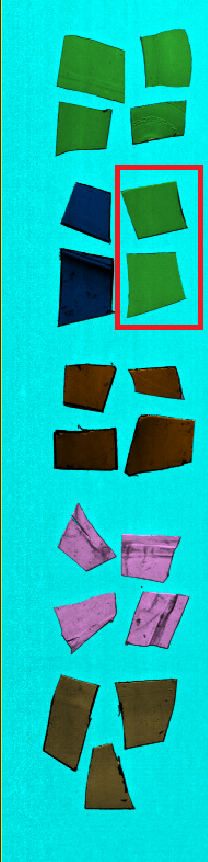

As you can see, in this case, two of the “PET Sheet” samples are wrongly classified as “PET Bottle”. To see if these can be correctly classified, we will add more of them to the training data.

Since these two classes seem to be a bit difficult to distinguish we will add the rest of the plastic bits for both of them to the training of the model.

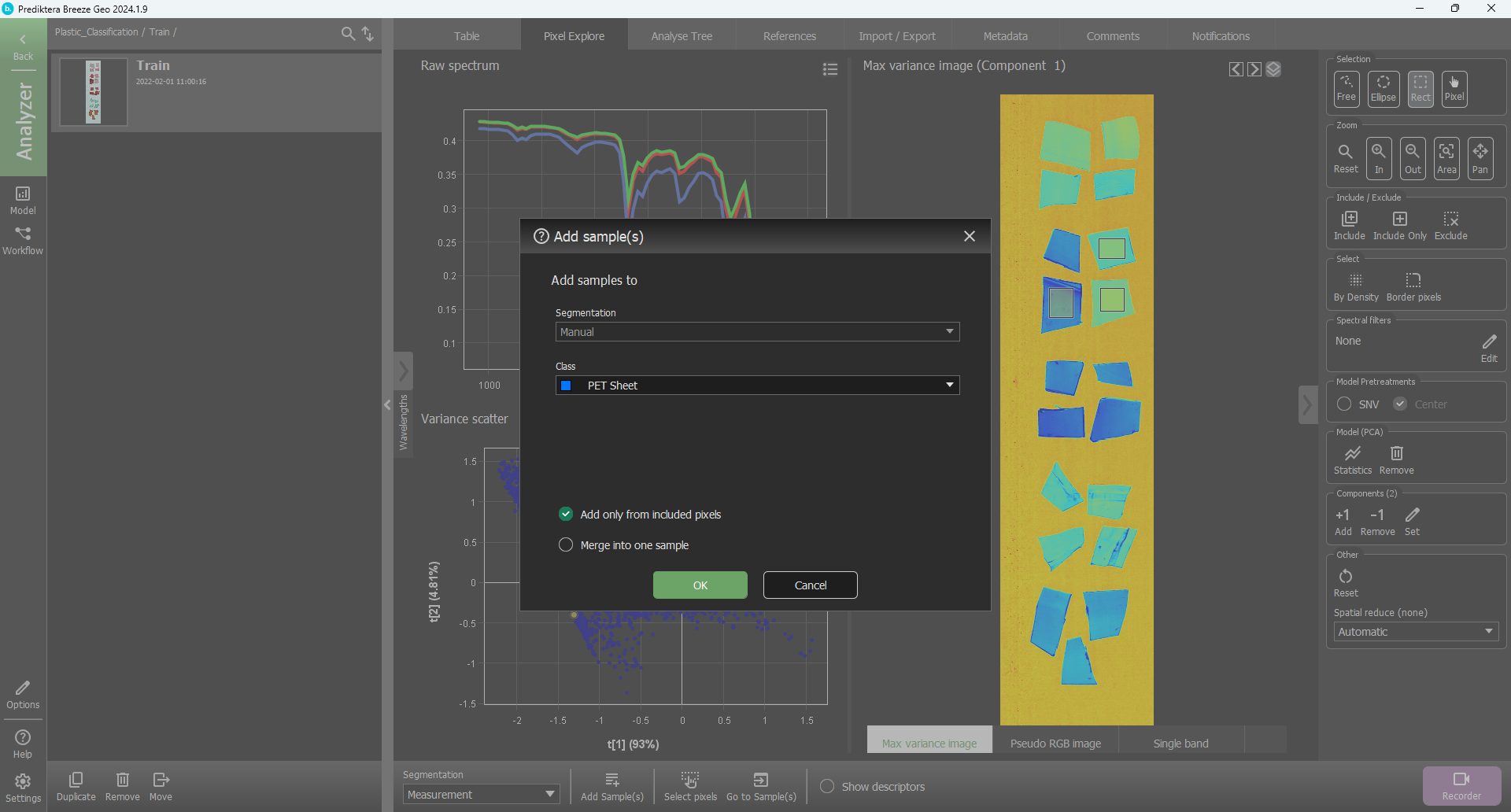

Go to “Pixel Explore” and select an area of the three unused pieces of PET Bottle (hold down ctrl and select with mouse).

The press “Add Sample” and select the “PET Bottle” class.

If you go to table you can see that this area has now been added to “Manual” segmentation.

Go back into pixel explore

As you can see the view looks different. This is because we are on the manual segment level. We can change this by pressing the drop-down menu called segmentation and changing to “Measurement”.

Now select the three pieces for PET Sheet.

Press “Add Sample(s)” select PET Sheet as your class and press OK

Go back to “Model” and press Retrain on the model we created earlier.

On step 1 press “Next”.

In Step 2 we need to add the new segments that we created. As you can see in the information we have 432 in total but only 216 are included.

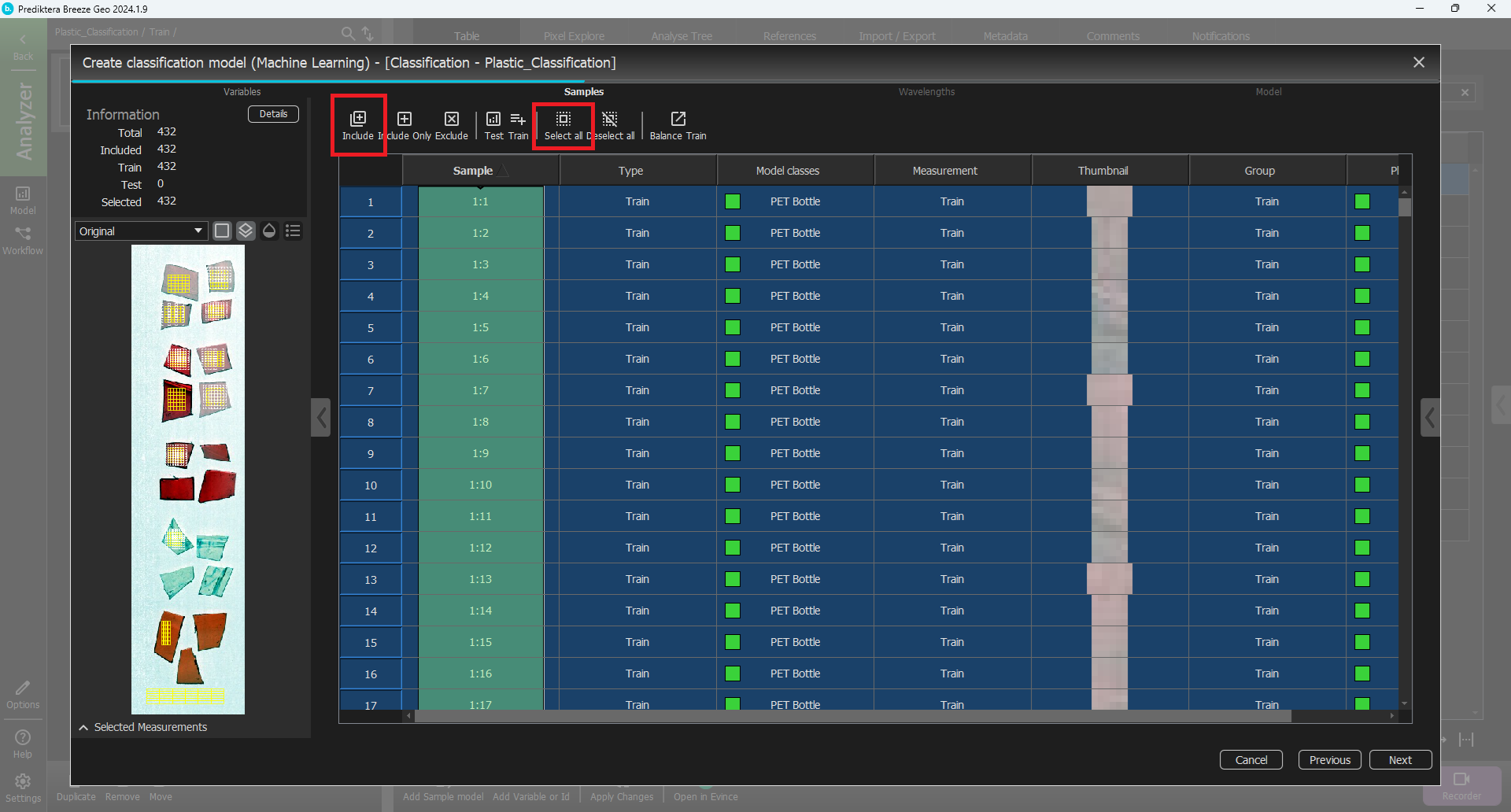

Press “Select all” and then “Include”

You can now see that the information changed we now include all the 432 grid parts in the train column.

Press “Next”

In Step 3 we press Next again.

In Step 4 of the modeling wizard we need to train the model again so we press train.

When the model is done we press finish.

Close the model dialog and change the segmentation level in the table to “Measurement” and press Apply changes

Press the images corresponding to the classification.

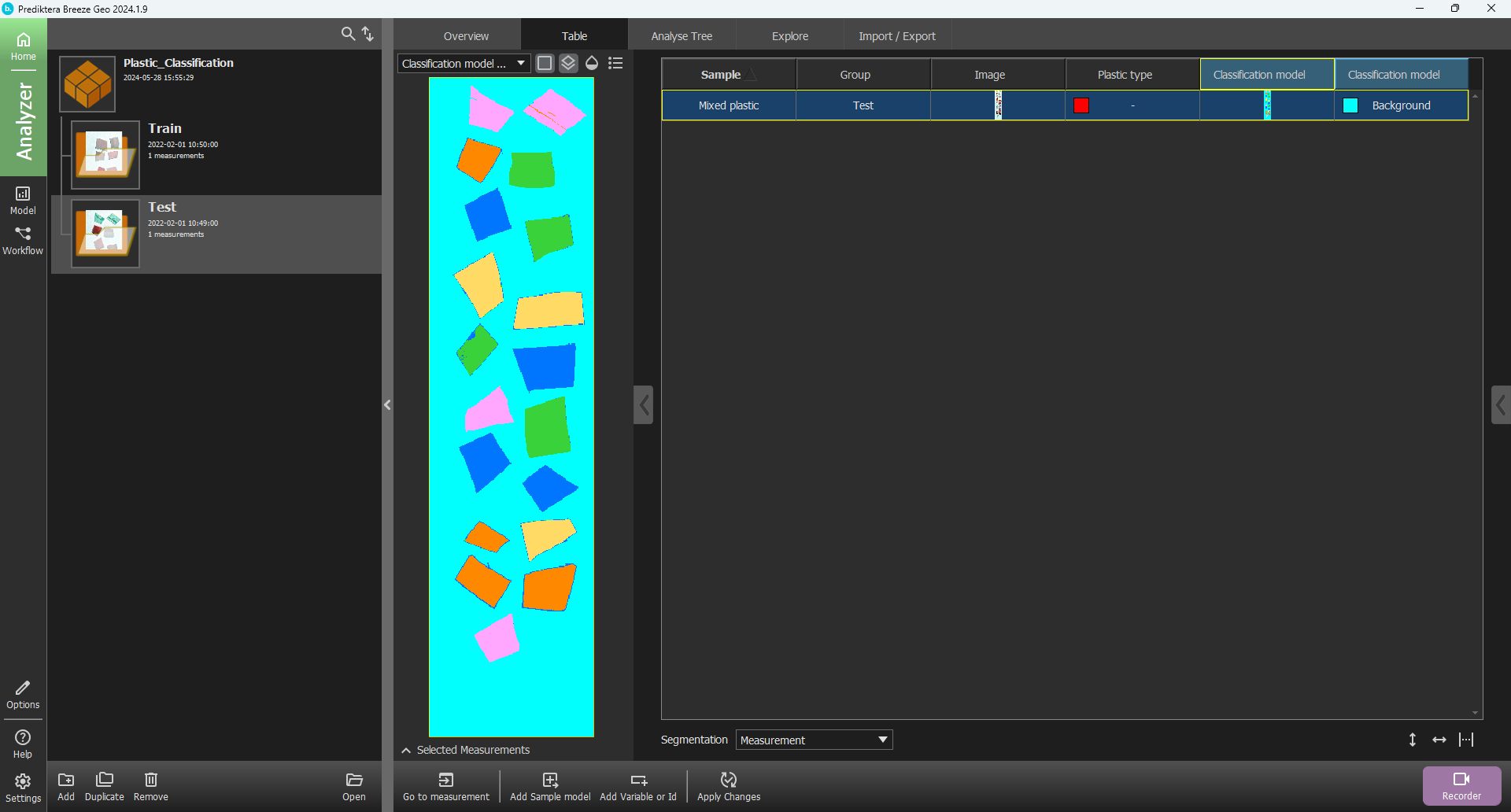

We can now see that everything is correctly classified on the “Train” image. Let’s go to the “Test” image and see if how the model is classifying these samples. Press “Back” to go to the Group level and select the Test” image. Press Apply changes to see the classification. You should get results like you can see here.

Simulate real-time prediction

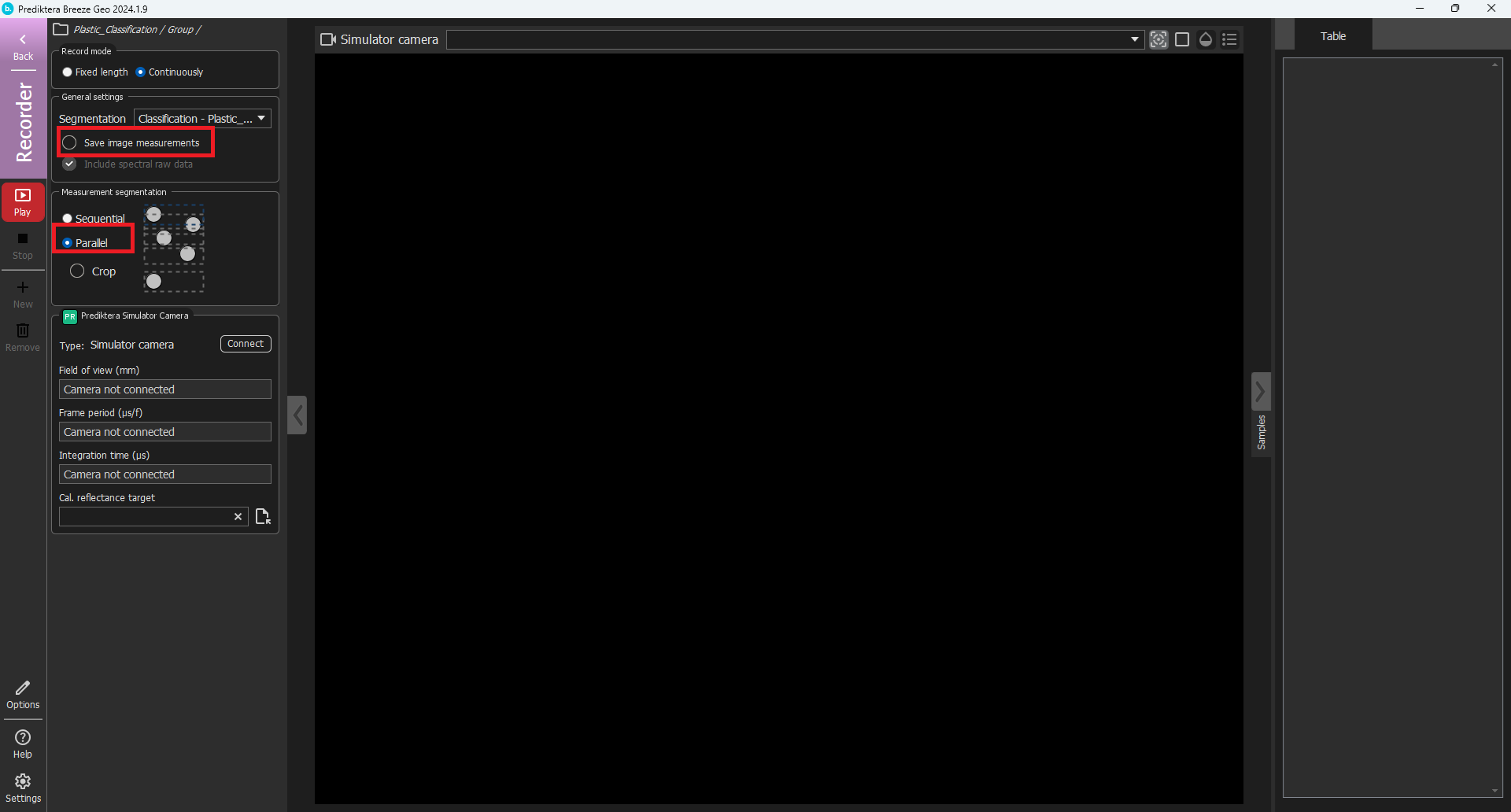

Now that you have created a model, we need to prepare the simulated environment.

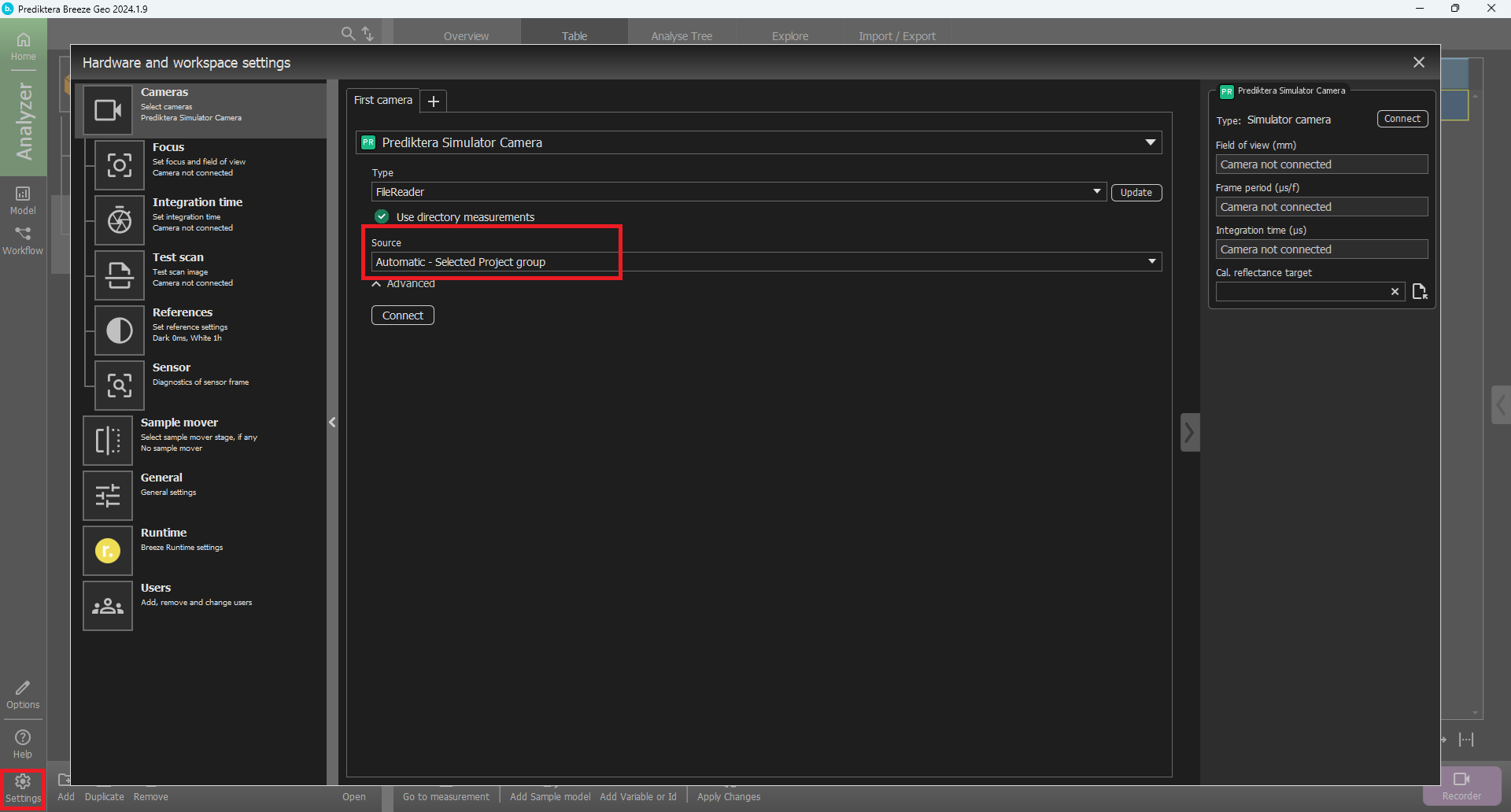

Press the “Settings” button

Select “Cameras” in the left table.

Make sure the Selected camera is “Prediktera Simulator Camera”.

The type is “FileReader” and Source “Automatic - Selected project group”

Then press “Connect”.

Press the “Close” button.

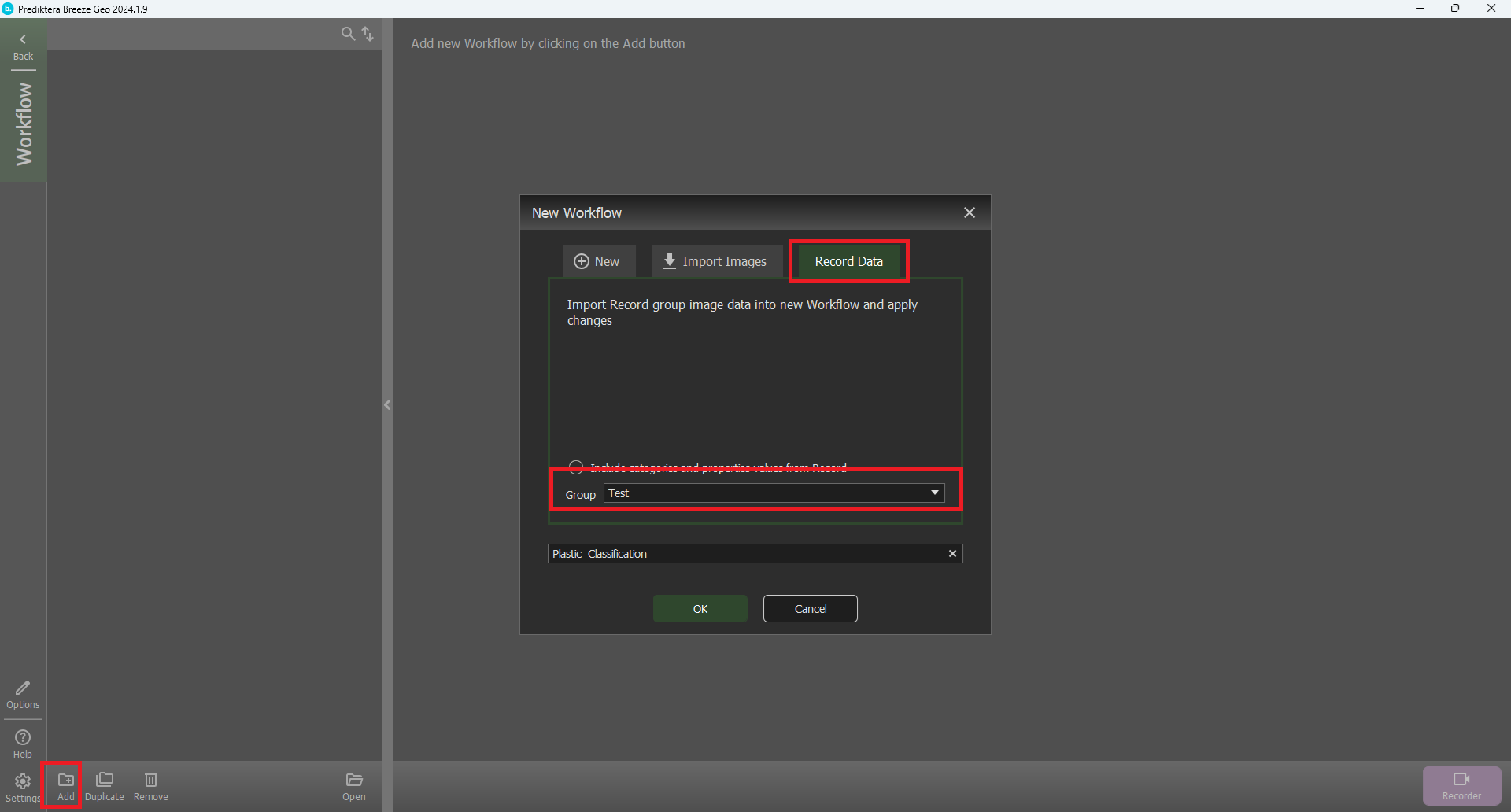

Press the “Workflow” button and select the Plastic classification project.

Press the “Add” button

Select the “Record Data” tab and select “Test” group. Press “OK”.

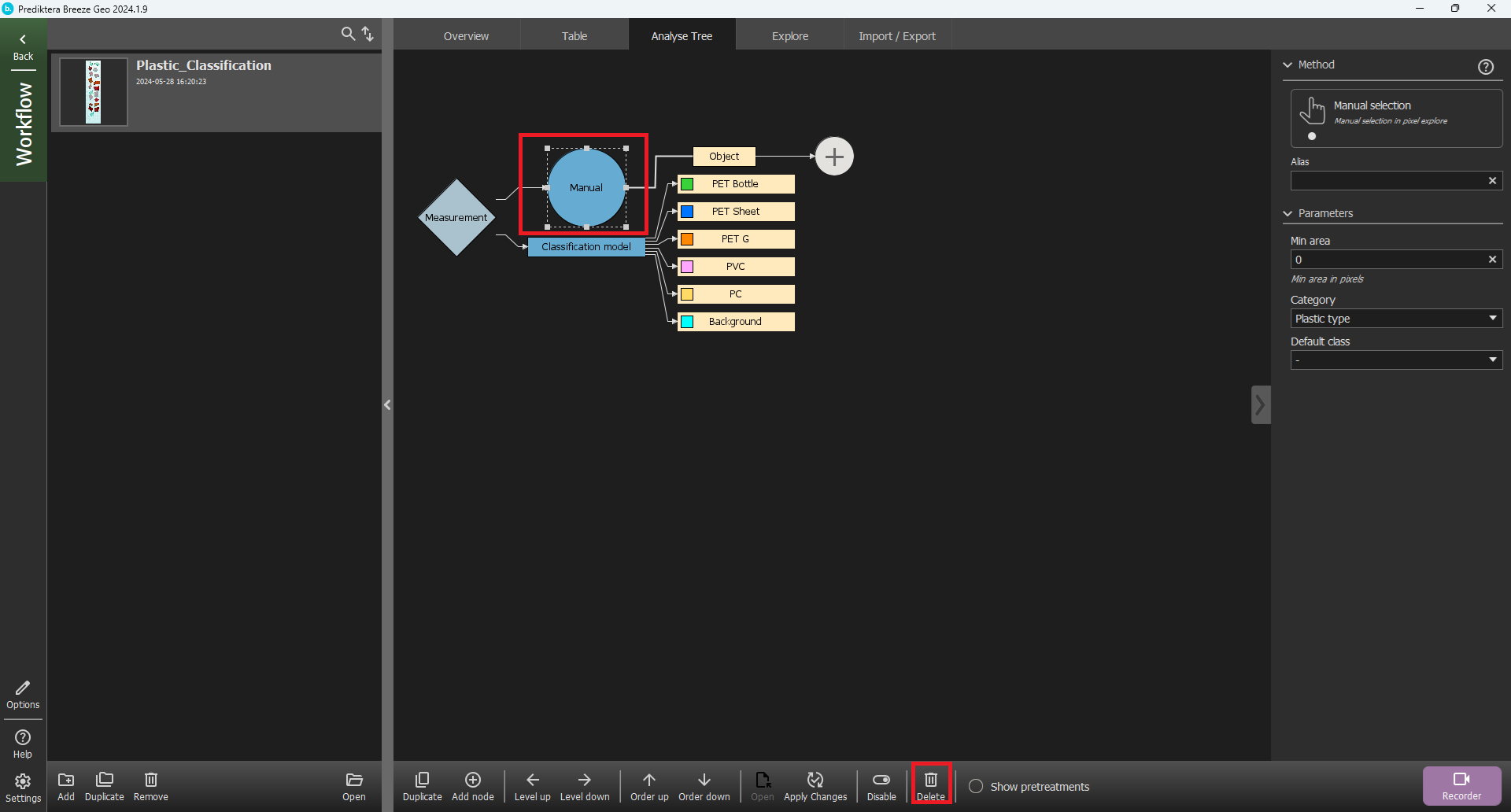

Go the the “Analyse Tree” tab and click on the “Manual” segmentation node and then press “Delete”.

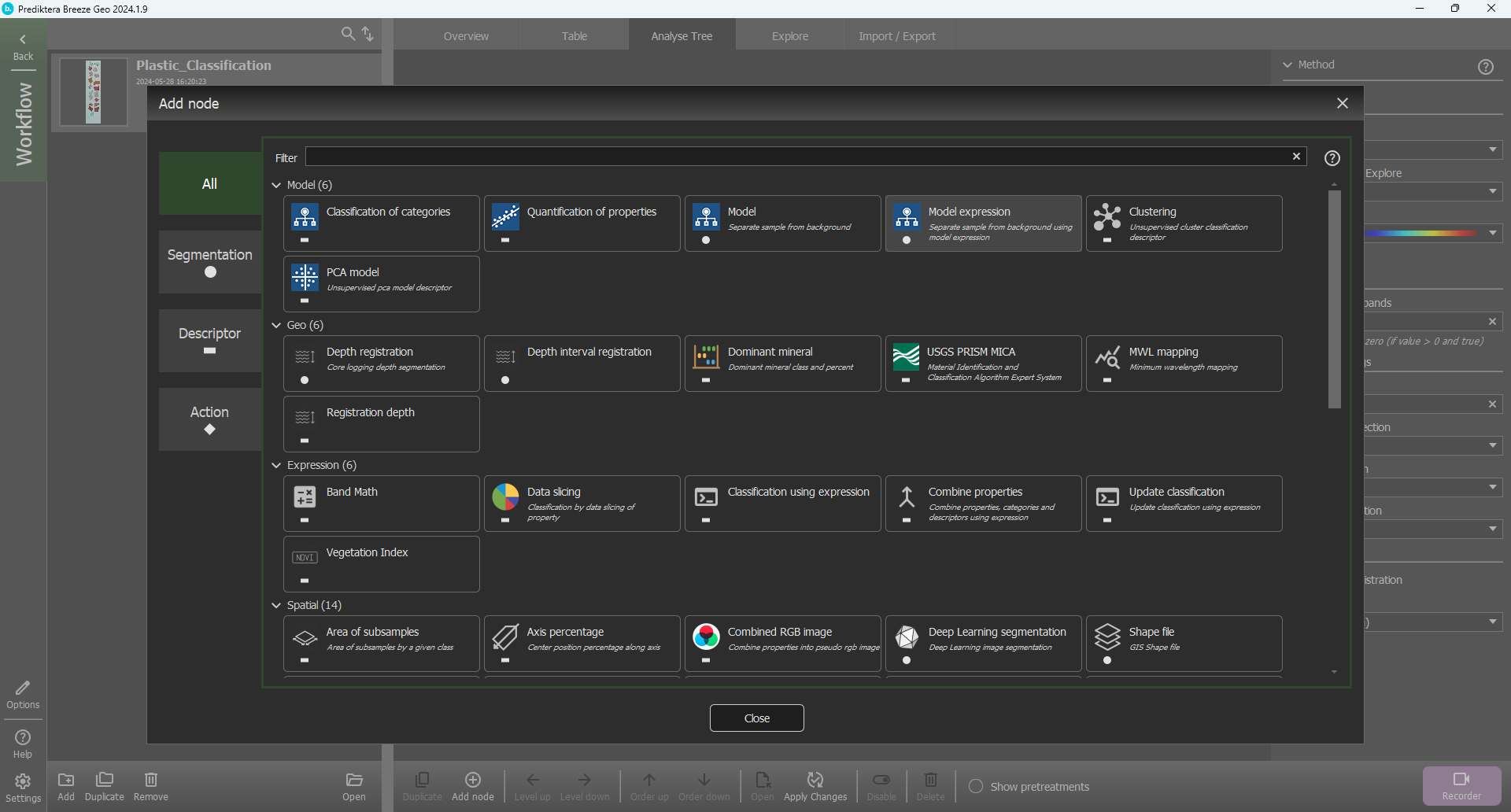

Click on the “Measurement” node, the plus sign after it. Then select “Segmentation” and “Model Expression”.

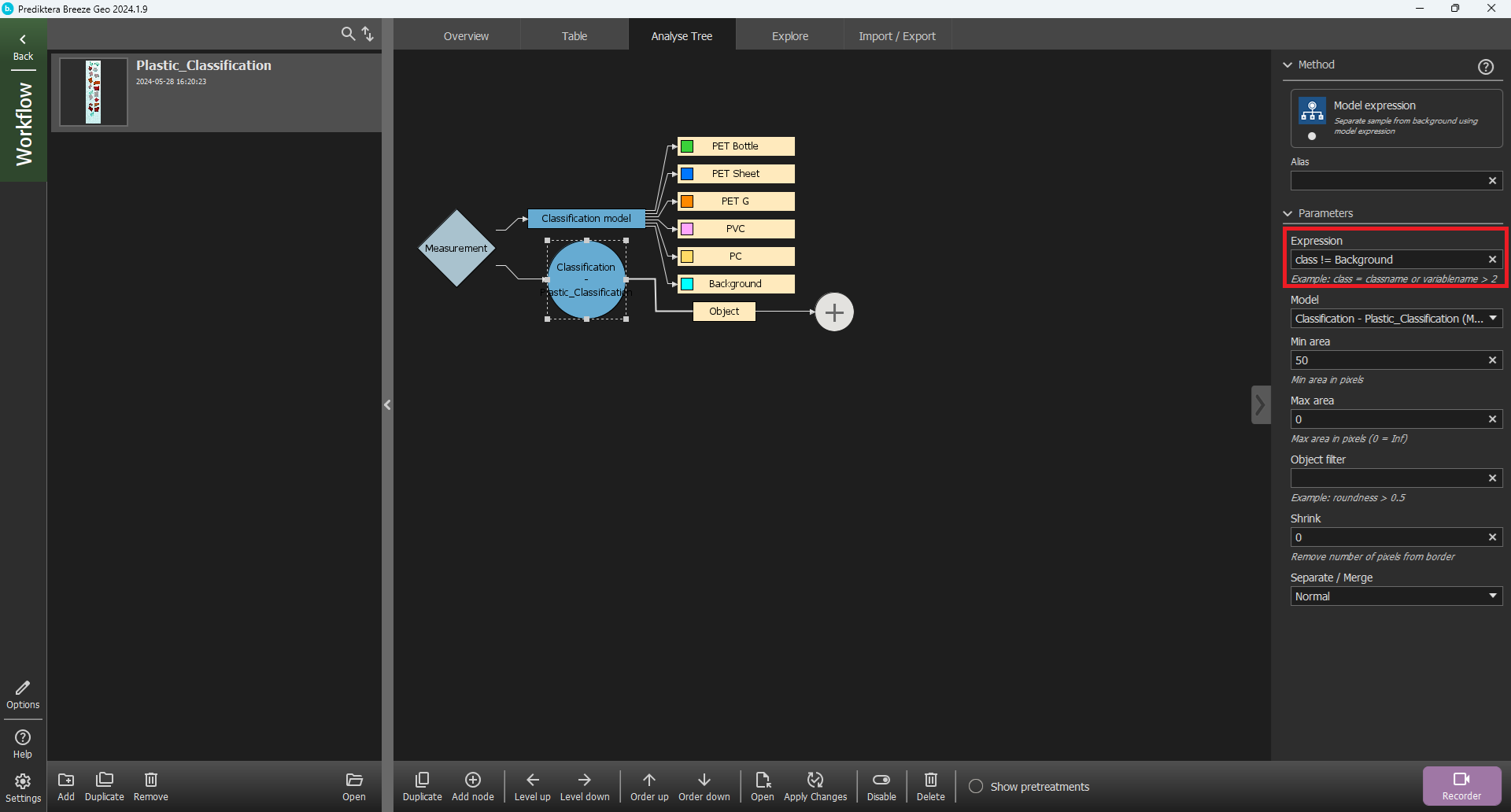

In the Expression field write Class != Background. This means that we will segment out pixels that are not from the “Background” class.

Click on the Classification model node and then press the “Level down” button to move the model to the end of the tree.

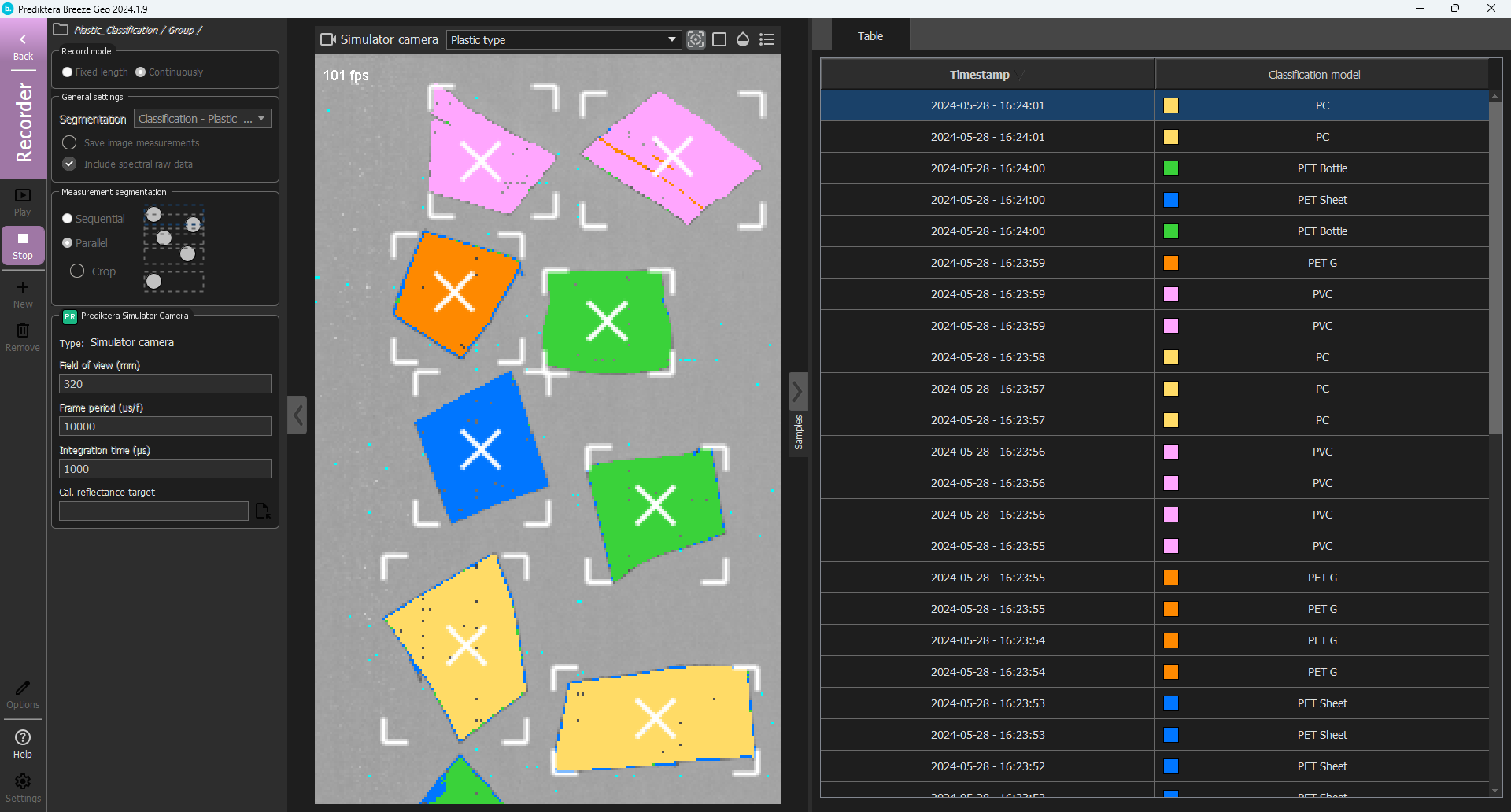

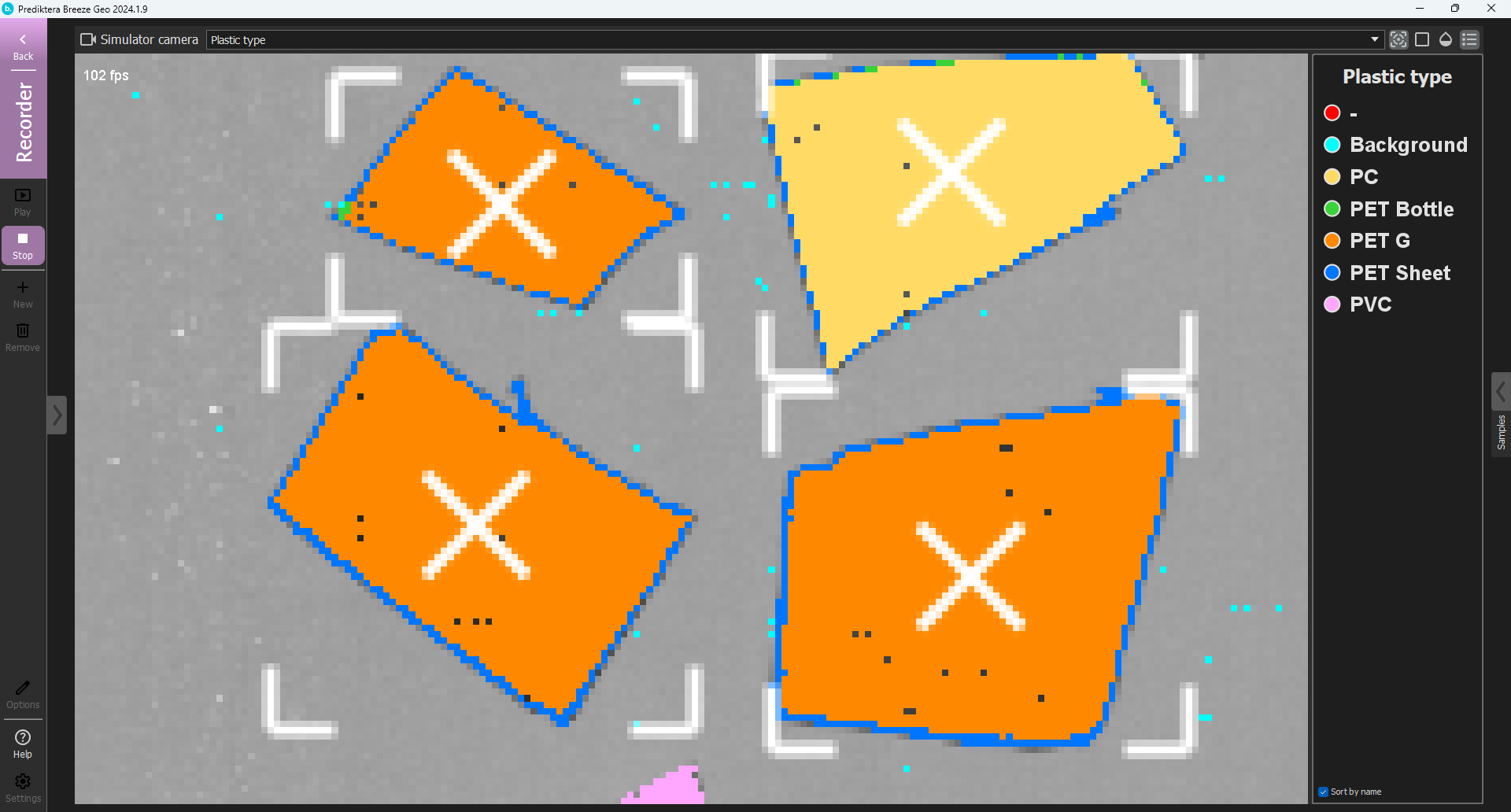

The model classification is now ready for real-time predictions. To test this, click on “Recorder” and the same images used in training the model and the test set will be evaluated in a real-time scenario.

Uncheck the “Save image measurements and calculated descriptors” option and select “Parallel” measurement segmentation.

Click on “Play”.

The data will now be analyzed in a real-time mode with automatic segmentation of the plastic objects.

Press this button on the right side of the image

To get a bigger view of the samples. You can turn on/off the settings for the blend, legend and target tracking setting for the visualization.

Good job! Watch the plastic classification roll by and receive a perfect classification. Click “Stop” to stop the predictions.