Overview

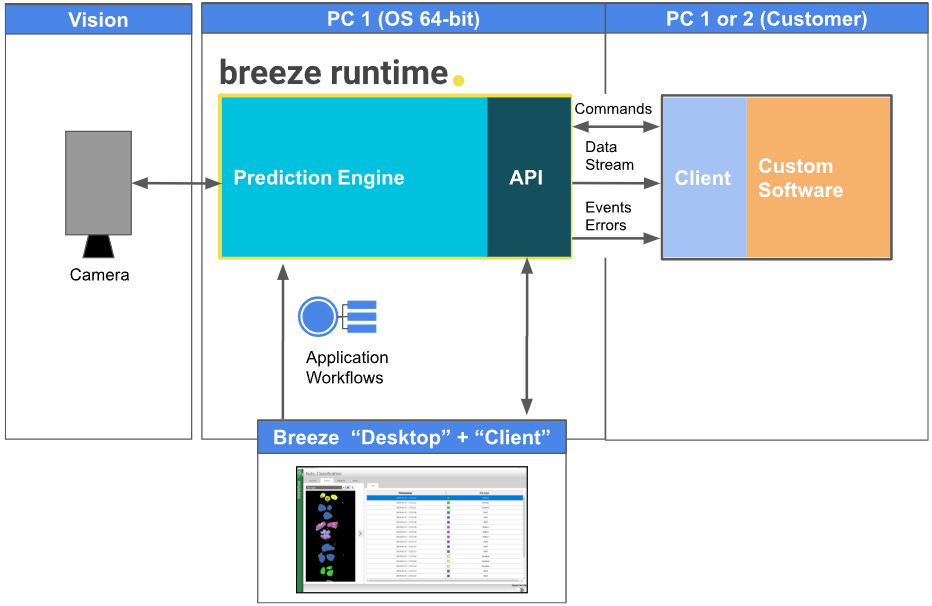

This overview shows the parts of a complete system setup integrating Breeze Runtime with a custom software. The customer software can run on PC 1 or a separate PC 2.

Commands

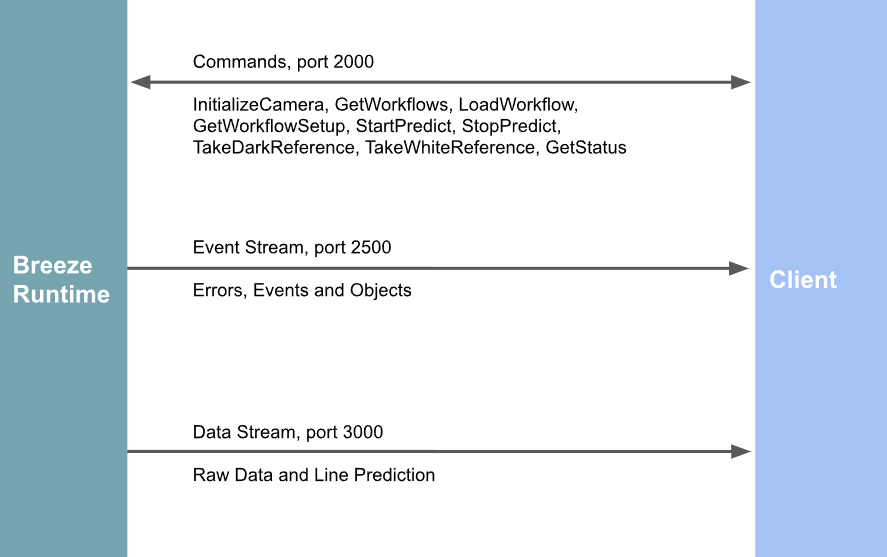

The commands and the server’s response are sent over TCP/IP on port 2000. The message format is unindented JSON ended with CR + LF (ASCII 13 + ASCII 10).

You can use curl with telnet for communicating with Breeze Runtime and send commands.

curl -v telnet://<ip>:<port>

Example

echo -en '{"Command":"GetStatus","Id":"IdString"}\r\n' | nc <ip> <port>

If the commands are in a file separated with newline make sure the line-ending is CRLF. Translate line-endings in place using for example unix2dos or translate when posting to Breeze Runtime with for example tr:

cat multiple-commands-file.txt | tr '\n' '\r\n'| nc <ip> <port>

Camera commands

Initialize camera

Using Breeze default settings

Message:

{

"Command" : "InitializeCamera",

"Id" : "IdString"

}

Optional. Camera specific settings

Message:

{

"Command" : "InitializeCamera",

"Id" : "IdString",

"DeviceName" : "SimulatorCamera",

"RequestedPort" : 3000,

"TimeOut" : 5 // (seconds)

...

}

-

The optional parameter

RequestedPortcan be used to set the data stream port, which defaults to 3000. -

The optional parameter

TimeOutis in seconds. Used in for example when waiting for the camera to become stable. -

The command can have any number of camera-specific arguments (see table below).

Reply:

{

"Id" : "IdString",

"Success" : true

}

Available camera manufacturers

HySpex

HySpex SDK

-

Swir, Vnir, Etc.

{

"DeviceName" : "HySpexCamera",

"SetFilePath" : "<path>\<SetFileDirectory>",

"CameraType" : "VNIR_1800_SN0940",

"RequestedPort" : 3000, // optional; default = 3000

"ControlMode" : "disabled", // optional; default = "disabled" (disabled, master)

"LensId" : "0", // optional; default = "0"

"FramesToAverage" : 1, // optional; default = 1

"MotorType" : "translation", // optional; default = "translation" (translation, rotation)

"BufferSize" : 1024, // optional; default = 1024

"Trigger" : "default", // optional; default = "default" (default, external, internal)

"BufferSizePreProcessing" : 128, // optional; default = 128

"ImageOption" : "RAW_BP", // optional; default = "RAW_BP" (RAW, BGSUB, RE, RAW_BP, HSNR_RAW, HSNR_RE, HSNR_RAW_BP)

}

For more information see HySpex hardware installation

Specim

LUMO SDK

-

Swir, FX-10, FX-17, Mwir, Etc.

{

"DeviceName" : "SpecimCamera",

"CameraType" : "FX17 with NI", // Camera name from Lumo or "FileReader",

"ScpFilePath" : "<path>\<file>.scp"

}

For more information see Specim hardware installation guide

Specim FX-50

JSON-RPC 2.0 standard.

{

"DeviceName" : "GenericeCamera",

"CameraType" : "Cortex",

"HostIp" : "127.0.0.1",

"TimeOut" : 5, //optional; default = 5

"TimeoutExceptionMs" : 5000, // optional; default = 5000

"InitializeTimeOut" : 5, // optional; default = TimeOut

"StableTimeOut" : 5, // optional; default = TimeOut

"PreprocessingTimeOut" : 5, // optional; default = TimeOut

"BinningSpatial" : 1, // optional; default = 1

"BinningSpectral" : 1, // optional; default = 1

"BufferSize" : 100 // optional; default = 100

}

Default values on initialization and more see Specim FX50

INNO-SPEC

INNO-SPEC SDK Deprecated

{

"DeviceName" : "InnoSpecCamera",

"CameraType" : "RedEye",

"MissingPixelsFilePath" : "<path>\<file>.xxx" // (optional)

}

INNO-SPEC Photon Focus-based camera

{

"DeviceName" : "InnoSpecPhotonFocusCamera",

"CameraType" : "GigE Camera - XXX.YYY.ZZZ.LLL",

"BinningSpatial" : 1, // Only applicable when no config file used - Default = 1

"BinningSpectral" : 1, // Only applicable when no config file used - Default = 1

"ConfigFilePath" : "C:\\Users\\user\\Documents\\InnoSpec\\HSI-17-121421.toml", // Optional

"CorrectionMode" : "OffsetGainHotpixel", // optional, default = "OffsetGainHotpixel"

"ModeId" : "1x1", // Only applicable when using config file

"SpatialRoi" : "", // Optional

"SpectralRoi" : "1", // Optional

"TriggerActivation" : "RisingEdge",

"TriggerDelay" : 0, // Default = 0

"TriggerMode" : false, // Default = false

"TriggerSource" : "Line0"

}

Where XXX.YYY.ZZZ.LLL is the IP address of the camera

See INNO-SPEC for more information on the different properties.

Basler

Pylon SDK

{

"DeviceName" : "BaslerCamera",

"CameraType" : "Basler ac1920-155um 12345", // Where `ac1920-155um` model name and `12345` is the camera serial number

"BinningHorizontal" : 1, //optional; default = 1,

"BinningVertical" : 1 // optional; default = 1

}

Detection Technology

Detection Technology - X-ray sensor with Ethernet connection

{

"DeviceName" : "DeeTeeCamera",

"CameraType" : "X-Scan",

"HostIp" : "127.0.0.1",

"SpatialBinning" : 0, //optional; default : 0

"BufferSize" : 100, //optional; default : 100

"TimeoutExceptionMs" : 5000, //optional; default : 5000

"TimeOut" : 20 //optional; default : 20

}

Calibration data

{

"Ob" : 52000,

"DynCalEnable" : false,

"DynCalLines" : 100,

"DynCalThreshold" : 0.01,

"DynCalNbBlockSaved" : 10

}

Geometric calibration

{

"GeoCorrEnable" : true,

"SD" : 1160,

"DLE" : 36.411,

"DHE" : 40.600,

"CenterPixel" : 458.5

}

For additional start up settings and in-depth explanations see Detection Technology camera

Prediktera

FileReader test camera.

{

"DeviceName" : "SimulatorCamera"

}

If only DeviceName is specified, a simulated camera with 9 frames with an image width at 10 pixels will be used. The frames contain one object. The frames 'wrap around'; the 10th frame will be the same as the first one, etc. The CameraType will be "SimulatorCamera" (see below). By specifying the data files used, any raw data stream can be used, e.g.:

{

"RawDataFilePath" : "Data\Nuts\measurement.raw",

"DarkReferenceFilePath" : "Data\Nuts\darkref_measurement.raw",

"WhiteReferenceFilePath" : "Data\Nuts\whiteref_measurement.raw",

"CameraType" : "NutsSimulatorCamera"

}

-

The file paths are relative to the directory where BreezeRuntime.exe is located.

-

The parameter

CameraTypecan be any text, and theCameraTypefield in the reply from theGetStatuscommand will be set to this text. -

See Running Breeze Runtime with Breeze Client, at the end of this page, for instructions on how to download and install the nuts test data, which should be used in conjunction with the workflow with ID “NutsWorkflow”.

Unispectral

Unispectral Monarch Camera - USB-C

{

"CameraType" : "Monarch II",

"DarkReferenceValue" : 64,

"DeviceName" : "UnispectralCamera",

"Gain" : 1, // optional; default = 1

"BinningSpatial" : 1, // optional; default = 1

"BinningSpectral" : 1, // optional; default = 1

"SpectralRoi" : "0;0;0;0;1;1;1;1;1;0" // optional; default = "1;1;1;1;1;1;1;1;1;1"

}

SpectralRoi = "1" is equivalent to "1;1;1;1;1;1;1;1;1;1" - meaning all bands are included

For more information see: Unispectral

Resonon

Resonon Basler-based cameras (Pika L, XC2, and UV), see Resonon

USB or Ethernet connected cameras.

May required drivers to be installed on the computer. See Basler

{

"DeviceName" : "ResononBaslerCamera",

"CameraType" : "BaslerCamera serial-number",

"BinningHorizontal" : 1, // optional; default = loaded from Resonon camera specific settings

"BinningVertical" : 1 // optional; default = loaded from Resonon camera specific settings

}

serial-number is the serial number device id specified on the camera

Resonon Allied Vision-based cameras (Pika IR, IR+, IR-L, and IR-L+)

{

"DeviceName" : "ResononAlliedVisionCamera",

"CameraType" : "GigE device-id",

"BinningHorizontal" : 1, // optional; default = loaded from Resonon camera specific settings

"BinningVertical" : 1 // optional; default = loaded from Resonon camera specific settings

}

device-id is the device id specified on the camera

Qtechnology

qtec C-Series

{

"DeviceName" : "ServerCamera",

"CameraType" : "Server",

"Host" : "10.100.10.10",

"PixelFormat" : "short", // optional; default = "short"

"SpectralRoi" : "1" // optional; default = "1" - all wavelenghts included

"BinningSpatial" : 1, // optional; default = 1

"BinningSpectral" : 1 // optional; default = 1

}

For more information see: Qtechnology

Prediktera Data Server

{

"DeviceName" : "DataServerCamera",

"CameraType" : "Server",

"Port" : 2200,

"Width" : 384,

"Height" : 31,

"Wavelength" : "1164.52;1209.84;1255.04;1300.16;1345.2;1390.17;1435.09;1479.97;1524.81;1569.64;1614.45;1659.25;1704.05;1748.85;1793.66;1838.47;1883.3;1928.12;1972.95;2017.79;2062.62;2107.45;2152.26;2197.05;2241.81;2286.53;2331.2;2375.81;2420.34;2464.78;2509.12"

"MaxSignal" : 1, // optional; default = 1

"DataSize" : "Float" // optional; valid values "Byte", "Short", "Float", "Double"

}

Changes in MaxSignal needs to be reflected in DataSize and vice-versa

For more information see: Prediktera Data Server

Disconnect camera

Message:

{

"Command" : "DisconnectCamera",

"Id" : "IdString",

"CameraId" : 0 // Optional if one (1) camera, mandatory if more than 1 camera

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : "Success"

}

Get camera property

Message:

{

"Command" : "GetCameraProperty",

"Id" : "IdString",

"CameraId" : 0, // Optional if one (1) camera, mandatory if more than 1 camera

"Property" : "FrameRate"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : "100"

}

Below is a list of properties, which all logical cameras have. Some physical cameras cannot be queried for some properties though, and a default value will be returned by the logical camera. Some logical cameras have properties not listed here, but that can still be retrieved. E.g. The camera “SimulatorCamera” has the property UniqueProperty (Float, 32 bits) to demonstrate this feature. It also has the property “State”, which can be used for simulating dark and white references (see commands TakeDarkReference and TakeWhiteReference).

|

Property |

Original Data Type |

Comment |

|---|---|---|

|

|

Float, 32 bits |

µs |

|

|

Float, 32 bits |

Hz |

|

|

Boolean |

|

|

|

Integer, 32 bits |

No of pixels |

|

|

Integer, 32 bits |

No of pixels |

|

|

string |

E.g. |

|

|

Integer, 32 bits |

Maximum signal value |

|

|

Float, 32 bits |

Sensor temperature (We don't have a way to export this macro.). We don't have a way to export this macro. We don't have a way to export this macro. |

|

|

Integer |

1 = Byte, 2 = Short (Integer, 16 bits), 4 = Float (Float, 32 bits), 8 = Double (Float, 64 bits) |

|

|

Integer |

1 = BIL (Band Interleaved by Line) 2 = BIP (Band Interleaved by Pixel) |

Set camera property

Message:

{

"Command" : "SetCameraProperty",

"Id" : "IdString",,

"CameraId" : 0, // Optional if one (1) camera, mandatory if more than 1 camera

"Name" : "FrameRate",

"Value" : "200"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : "200"

}

Below is a list of properties, which all logical cameras have. Some physical cameras cannot have some properties set though, and the set operation will not have any effect. Some logical cameras have properties not listed here, but that can still be set. E.g. The camera “SimulatorCamera” has the property UniqueProperty (Float, 32 bits) to demonstrate this feature. It has also the property "State", which can be used when simulating dark and white references (see commands TakeDarkReference and TakeWhiteReference).

|

Property |

Original Data Type |

Comment |

|---|---|---|

|

|

Float, 32 bits |

µs |

|

|

Float, 32 bits |

Hz |

Raw-data capture commands

These commands start and stop recording of raw data from the camera. Data can be obtained in client code from Data stream (stream type 1), or saved to disk on the Runtime PC. Saving to disk happens when recording is stopped with Stop capture.

Raw-data capture corresponds to what happens when Test Scan is performed in Breeze Settings for a camera.

You cannot capture raw data at the same time as executing a workflow (see Start predict). If you want the Runtime to save raw data you can use the CaptureOnPredict command.

Start capture

Start the raw-data capture.

Message:

{

"Command" : "StartCapture",

"Id" : "IdString",

"CameraId" : 0, // Optional if one (1) camera, mandatory if more than 1 camera

"NumberOfFrames" : 10,

"Folder" : "Path to folder" //Optional

}

The parameter NumberOfFrames is optional; if omitted, the camera will capture until StopCapture is sent.

The parameter Folder is optional; if provided, the Runtime will store the captured frames in a measurement.raw file in the chosen folder.

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Stop capture

Stops capturing raw data, and saves to a measurement on disk, if the Folder option was used (see Start capture).

Message:

{

"Command" : "StopCapture",

"CameraId" : 0, // Optional if one (1) camera, mandatory if more than 1 camera

"Id" : "IdString"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Prediction commands

Get workflows

Message:

{

"Command" : "GetWorkflows",

"Id" : "IdString"

"IncludeTestWorkflows" : true

}

The parameter IncludeTestWorkflows specifies whether the test workflows bundled with Breeze Runtime (see below) should be included; If the parameter is false or omitted, only the workflows created by the user with Breeze are included.

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : "

[

{

"Name": "Test Workflow",

"Id": "TestWorkflow",

....

Load workflow

Loads a workflow to use for predictions, and returns detailed information about the workflow. A single workflow can be active at the time in the Runtime.

Message:

{

"Command" : "LoadWorkflow",

"Id" : "IdString",

"WorkflowId" : "TestWorkflow",

"UseReferences" : true,

"Xml" : null, // can be omitted

"FieldOfView" : 10.5

}

-

To load a server-side workflow, the parameter

WorkflowIdis set to one of the IDs in the reply fromGetWorkflows. -

To load a client-side workflow, the parameter

Xmlis set to the contents of a client-side workflow XML file. -

Either

WorkflowIdorXmlmust be specified, but not both. -

FieldOfViewis optional. if omitted, the value for the current camera will be used from settings in Breeze on the local computer.

Reply:

The reply to this command contains detailed information on workflow in the returned Message JSON object. Specifically these parts of the Message are useful to understand the data returned in the Runtime’s Event stream and Data stream:

-

The

Settings/PredictionModevalueNormalcorresponds to the Pixel Prediction Lines document settings for the data stream. -

The value of

ObjectFormatand itsDescriptorsvalue contain definitions of the JSON data for each object in the Event Stream. -

The the

StreamFormatvalue is an object defining the data in the binary Data Stream. Since it contains binary data, you need this information to parsing the data. The value ofTypefor each descriptor in theStreamFormat/Linesobject specifies the size of the data: Type:Property means it is a quantitative value sin a 32-bit float data type. Type:Category - a byte containing a class index.

Tip: click below to expand the JSON Message body to view an example.

{

"Id" : "IdString",

"Success" : true,

"Message" :

"{

"Name": "Test Workflow",

....

CreatedTime has the format yyyyMMddhhmmss.

Delete workflow

Message:

{

"Command" : "DeleteWorkflow",

"WorkflowId" : "IdString"

}

Open shutter

Message:

{

"Command" : "OpenShutter",

"CameraId" : 0, // Optional if one (1) camera, mandatory if more than 1 camera

"Id" : "IdString"

}

Open the camera shutter

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Close shutter

Message:

{

"Command" : "CloseShutter",

"CameraId" : 0, // Optional if one (1) camera, mandatory if more than 1 camera

"Id" : "IdString"

}

Closes the camera shutter.

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Take dark reference

Message:

{

"Command" : "TakeDarkReference",

"CameraId" : 0, // Optional if one (1) camera, mandatory if more than 1 camera

"Id" : "IdString"

}

If the camera “SimulatorCamera” is used (see command Initialize camera), the camera state must be set to DarkReference first; either use the command CloseShutter, or use the command

SetProperty to set State to DarkReference. When the shutter is opened the state is set to Normal.

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Dark reference quality control

Automatic dark reference quality control checks

-

The standard deviation between lines is lower than 5%

-

The average signal relative to max signal is lower than 50%

Invalid dark reference reply example:

Reply:

{

"Id" : "IdString",

"Success" : false,

"Code" : 1006

"Error" : "InvalidDarkReference"

"Message" : "Variation over lines is higher than 5%"

}

Take white reference

Message:

{

"Command" : "TakeWhiteReference",

"Id" : "IdString"

}

If the camera “SimulatorCamera” is used (see command Initialize Camera), the camera property State must be set to WhiteReference first, using the command SetCameraProperty. After the reference is taken the state can be set to Normal.

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : "Type=WhiteReferenceQuality;State=Good;Message=;StderrLines=0.00024;StderrPixels=0.01095;Min=38189;Mean=48029.7;Median=49055;Max=52960;Std=3717.01;StdError=0.07739;SaturatedPixels=25;TotalSaturated=2"

}

The Message field contains a semicolon-delimited string of white reference quality metrics:

-

Type – identifies the type of quality report (

WhiteReferenceQuality). -

State – overall evaluation of the reference quality (

Good,Warning,Error) -

Message – optional message with additional context (empty in this case).

-

StderrLines – standard error calculated across line intensities.

-

StderrPixels – standard error calculated across pixel intensities.

-

Min – minimum intensity value observed.

-

Mean – average intensity value across all pixels.

-

Median – median intensity value across all pixels.

-

Max – maximum intensity across all pixels.

-

Std – standard deviation of intensity values (spread/variance).

-

StdError – overall standard error of the mean.

-

SaturatedPixels – number of pixels at maximum measurable intensity in a single line/frame.

-

TotalSaturated – total number of frames/lines containing saturation events.

White reference quality control

Automatic white reference quality control checks

-

The standard deviation between lines is lower than 5%

-

The average signal relative to max signal is lower than 99%

-

The average signal relative to max signal is higher than 50%

-

The standard deviation between average pixels is lower than 5%

10% of border pixels on each side is not included in the calculation

Invalid white reference reply example:

Reply:

{

"Id" : "IdString",

"Success" : false,

"Code" : 1007

"Error" : "InvalidWhiteReference"

"Message" : "White reference less than 50% of max signal"

}

Get workflow setup

Should be called to retrieve prediction setup from the active workflow, which another client loaded.

Message:

{

"Command" : "GetWorkflowSetup",

"Id" : "IdString"

}

Reply:

See command LoadWorkflow.

Start predict

Message:

{

"Command" : "StartPredict",

"Id" : "IdString",

"SendPredictionLines" : true,

"SendPredictionObjects" : true,

"IncludeObjectShape" : true,

"FrameCount" : -1

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Parameters:

-

SendPredictionLines(optional, defaults totrue): if prediction data is sent in the Data stream. -

SendPredictionObjects(optional, defaults totrue): if object information is sent in the Event Stream. -

IncludeObjectShape(optional, defaults tofalse): Whether start y offset, object center, and border coordinates should be included with object identified event. -

FrameCount: the number of frames to capture. If -1, the Runtime will capture until stopped with StopPredict.

The identified objects are sent over the event stream (please see section Event Stream). This is an example object:

{

"Event" : "PredictionObject",

"Code" : 4000,

"Message" : "%7B......"

}

PredictionObject

Message field un-escaped and indented:

{

"Id": "9a327679-12cd-4442-996b-8268d24a5e50",

"CameraId": 0,

"SegmentationId": "dc5bae5e",

"StartTime": 638919780939627360,

"EndTime": 638919780951329271,

"StartLine": 2001,

"EndLine": 2500,

"Children": [],

"Descriptors":

[

1.0,

45.4867249,

47.89075,

6.40655136

],

"Shape":

{

"Center": [968, 249],

"Border":

[

[0, 0],

[1936, 0],

[1936, 499],

[0, 499]

]

}

}

Stop predict

Message:

{

"Command" : "StopPredict",

"Id" : "IdString"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Get status

Message:

{

"Command" : "GetStatus",

"Id" : "IdString"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" :

"{\"State\": \"Predicting\",

\"WorkflowId\": \"abcde\",

\"CameraType\": \"MWIR\",

\"FrameRate\": 200.0, // Hz

\"IntegrationTime\": 1500.0, // µs

\"Temperature\": 293.15, // K

\"DarkReferenceValidTime\": 4482.39258, // s

\"WhiteReferenceValidTime\": 9483.392, // s

\"LicenseExpiryDate\": \"2018-12-31\", // yyyy-MM-dd

\"SystemTime\": 636761502932108549,

\"SystemTimeFormat\": \"Utc100NanoSeconds\"}"

}

-

The original data type for

SystemTimeis an unsigned 64-bit integer.

States:

-

Idle -

LoadingWorkflow -

Predicting -

StoppingPrediction -

CapturingRawPixelLines -

CapturingMultipleFrames

The field Temperature represents the camera sensor temperature in Kelvin degrees. Celsius degrees = Temperature - 273.15. Fahrenheit degrees = Temperature x 1.8 - 459.67.

Get property

Message:

{

"Command" : "GetProperty",

"Id" : "IdString",

"Property" : "PropertyString",

//Optional:

"NodeId" : "NodeIdString",

"Name" : "FieldName"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : "result string"

}

The field NodeId can be used to get property information from a node in the analysis tree. If it is not specified then workflow global properties will be fetched.

Available Properties are:

-

Version -

State -

WorkspacePath -

WorkflowId -

DarkReferenceValidTime -

WhiteReferenceValidTime -

DarkReferenceFile -

WhiteReferenceFile -

LicenseExpiryDate -

SystemTime -

SystemTimeFormat -

PredictorThreads-

Return number of threads used in prediction.

Default -1 = number of available virtual cpu cores

-

-

AvailableCameraProviders-

Returns list of semicolon separated available providers

-

-

Fields-

Returns list of semicolon separated workflow fields

-

-

FieldValue-

Requires “

Name" -

Workflow field value

-

Example 1

Message:

{

"Command" : "GetProperty",

"Id" : "1",

"Property" : "Version"

}

Reply:

{

"Id" : "1",

"Success" : true,

"Message" : "2021.1.0.999 Release"

}

Example 2

Message:

{

"Command" : "GetProperty",

"Id" : "2",

"Property" : "FieldValue",

"Name" : "Voltage"

}

Reply:

{

"Id" : "2",

"Success" : true,

"Message" : "100"

}

Set property

Message:

{

"Command" : "SetProperty",

"Id" : "IdString",

"Property" : "PropertyString",

"Value" : "ValueString"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : "result string"

}

Available Properties to set are:

-

PredictorThreads-

The number of threads used in prediction.

Default = -1 (number of available virtual CPU cores)

-

Re-Initialize prediction

If there is a camera problem during prediction then Initialize command can be called to reconnect the camera and if successful start the prediction again. The tries parameter decides the number of tries that will be done before returning the error code CameraNotStable: 1009 error code.

Re-Initialize command sequence order

-

If predicting before Re-Initialize command was called

-

StopPredict

-

-

For a number of tires until the camera is initialized

-

DisconnectCamera -

InitializeCamera

-

-

If predicting before Re-Initialize command was called

-

LoadWorkflow(Same workflow as loaded before) -

StartPredict

-

Message:

{

"Command" : "Initialize",

"Id" : "IdString",

"Tries" : 10,

"TimeBetweenTrialSec" : 10

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Start capture on predict

Start capture measurements while predicting. The measurements are stored in the folder:

{Breeze workspace folder}/Data/Runtime/Measurements/{date}

Capture folders are named by date when starting the capture. The recorded measurements stored in that folder are divided by the max number count argument. The measurements are called “Measurement_1”, “Measurement_2”,...“Measurement_N”.

Message:

{

"Command" : "StartCaptureOnPredict",

"Id" : "IdString",

"Name" : "Name of measurements",

"MaxFrameCount" : 1000, // Max number of frames per measurement

"Object" : true | false, // If data should be saved only when objects are identified

"PredictionRaw" : true | false, // If prediction data should be saved when available (per object or MaxFrameCount)

"Thumbnail" : true | false // If png|jpg thumbnail image should be saved

}

Parameters

Object

-

When segmentation is enabled, setting

"Object": trueensures that measurements are captured only when an object is detected. -

If no object is detected, the corresponding image lines will be skipped and not written to disk, reducing storage usage.

PredictionRaw

-

Controls whether a prediction ENVI RAW file should be generated. Two files will be generate in same folder as measurement files.

-

measurement_prediction.raw

-

measurement_prediction.hdr

-

-

If no predictions are available in the Analyse Tree, no RAW files will be created.

-

Can be combined with

"Object": trueto generate prediction data only for segmented objects. -

If

"Object"is not set, then“MaxFrameCount"is used.-

This requires an Analyse Tree that is valid at the measurement level to generate data.

-

Thumbnail

-

Generates a thumbnail image in .png or .jpg format (depending on image size).

-

Can be combined with

"Object": trueto generate thumbnails only for segmented objects. -

If

"Object"is not set, then“MaxFrameCount"is used and thumbnails are generated for all frames. -

The output format is selected as follows:

imageSize = height × width × 4

if (imageSize < 100,000 OR height ≥ max_ushort) then

use PNG

else

use JPG

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Stop capture on predict

Message:

{

"Command" : "StopCaptureOnPredict",

"Id" : "IdString"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Apply changes on ENVI image files

Apply changes using specific Breeze Runtime Workflow on ENVI image files on disk with extension (raw, bil, bip, bsq or img) to create pixel predictions ([FILE_NAME]_prediction.raw), measurement thumbnail ([FILE_NAME].jpg) and result from descriptors ([FILE_NAME].xml).

The output files are saved in the measurement’s source folder, unless you provide a full path to an output folder using the OutputFolder parameter. The output folder will be created if it does not exist. Setting OutputFolder to “null” or null puts the output in the source measurement’s folder.

Each measurement folder must contain dark reference (darkref_[FILE_NAME].raw) and white reference (whiteref_[FILE_NAME].raw) if the raw data should be converted to reflectance or absorbance.

Message:

{

"Command" : "ApplyChanges",

"Id" : "IdString",

"XmlFile" : "{path to Runtime workflow}/workflow.xml",

"Files" : [

"{path to ENVI image file 1}",

"{path to ENVI image file 2}",

"{path to ENVI image file N}"

],

"OutputFolder": "{path to optional output directory}"

}

Reply:

{

"Id" : "IdString",

"Success" : true,

"Message" : ""

}

Error Handling and Events

Here is some additional information regarding error formats and responses.

Error Types

Errors can occur in two ways:

-

Command Errors

Sent as part of a command reply when that command fails. -

Event Errors

Sent via the event stream when something goes wrong server-side (e.g. during prediction or capture).

1. Command Errors

When a command fails:

-

Success

-

Type:

boolean -

Value:

false

-

-

Error

-

Type:

string -

Description: A short error code

-

-

Code

-

Type:

integer -

Description: A numeric error code

-

-

Message

-

Type:

string -

Description: A human-readable description of what went wrong

-

Example reply:

{

"Id" : "IdString",

"Success" : false,

"Message" : "Camera is not initialized",

"Error" : "GeneralError",

"Code" : 3000

}

When an error occurs on the server-side during prediction or capture, the error information is sent via the event stream. Log files can be found in the directory “%USERPROFILE%\.Prediktera\Breeze” in the home directory, e.g. “C:\Users\<user name>\.Prediktera\Breeze. “

2. Event Stream

When an error occurs server-side (for example, during prediction or capture), it’s sent as an event rather than a command response. To investigate these errors in detail, check the log files at:

%USERPROFILE%\.Prediktera\Breeze

– for example

C:\Users\<user name>\.Prediktera\Breeze

These logs contain full stack traces and diagnostic details for any event-stream errors.

Learn more in Breeze log files and troubleshooting.

Command Error Codes

These will be sent in response to a failed command.

|

Error Code |

Constant |

Description |

|

1000 |

GeneralCommandError |

General command error. Message field gives more details. |

|

3001 |

UnknownError |

Unexpected error in runtime command call. Stack trace gives more details.

JSON

|

|

1002 |

InvalidLicense |

Sent on StartCapture if no valid license is found for Breeze. Sent on StartPredict if no valid license is found for BreezeAPI. |

|

1003 |

MissingReferences |

Sent on StartPredict if references are needed and both references are missing. |

|

1004 |

MissingDarkReference |

Sent on StartPredict if references are needed and dark reference is missing. |

|

1005 |

MissingWhiteReference |

Sent on StartPredict if references are needed and white reference is missing. |

|

1006 |

InvalidDarkReference |

Sent on TakeDarkReference if the captured dark reference is not good enough. Message will include detailed explanation. |

|

1007 |

InvalidWhiteReference |

Sent on TakeWhiteReference if the captured white reference is not good enough. Message will include a detailed explanation. |

|

1008 |

MissingDarkReferenceFile |

Sent on TakeWhiteReference if white reference intensity is used and there is no dark reference file to read. |

|

1009 |

CameraNotStable |

Sent on Initialize when cannot reinitialize prediction after given number tries |

Event Stream

Events and errors that do not belong to a command will be sent over TCP/IP on port 2500. The message format is unindented JSON ended with CR + LF (ASCII 13 + ASCII 10). All errors, except those in command responses, will also be sent over this channel.

Regular Event Codes

These events can be sent during startup, prediction, and capture.

Example

Message:

{

"Event" : "DarkReferenceOutdated",

"Code" : 2001

}

|

Event Code |

Constant |

Description |

|

2000 |

reserved for event server |

|

|

2001 |

DarkReferenceOutdated |

Dark reference is outdated |

|

2002 |

WhiteReferenceOutdated |

White reference is outdated |

|

2003 |

WorkflowListChanged |

Workflow in Runtime folder has been changed, removed or added |

Error Event Codes

These events can be sent during startup, prediction, and capture. An error event will have the field Event = “Error” and the field “Error” specifies the name of the error.

Example

Message:

{

"Event" : "Error",

"Error" : "UnknownError",

"Message" : "<message>",

"Code" : 3001,

"StackTrace": "At RtPredict.cs:281..."

}

|

Error Code |

Constant |

Description |

|---|---|---|

|

3000 |

|

General error. Message field gives more details. |

|

3001 |

|

Unexpected error during prediction or capture. Message will include error details JSON

|

|

3002 |

|

Forwards error code generated by camera supplier. A new field CameraErrorCode will be present in event. Example: JSON

|

|

3003 |

|

Prediction frame queue overflow. Please use a lower camera frame rate. |

Prediction Object

|

Event Code |

Constant |

Description |

|---|---|---|

|

4000 |

|

The Runtime has identified an object. Its properties can be found in the message field as escaped JSON. See Start Predict for details. JSON

|

Data Stream

The data stream from the server is sent over TCP/IP. By default, the port is 3000 but you can change it with the InitializeCamera command. A client connects to this port and reads output data from the Runtime for all pixels, line by line.

When a client connects to the Data stream to obtain data, it must keep up with the data flow from the Runtime. Otherwise, the Runtime’s send queue can overflow resulting in lost data, and a SendQueueOverflow error returned to the client in the Event stream, and also logged as warnings in the Runtime log. This means that a client that isn’t interested in data, still must read it, or otherwise close the connection to the Runtime.

All integers and floats in the stream use little-endian encoding.

Stream Header

Each packet begins with a fixed-length header:

[StreamType][FrameNumber][Timestamp][MetadataSize][DataBodySize]

-

StreamType (1 byte)

-

1 = Raw Pixel Line

-

2 = Prediction Lines

-

3 = RGB Pixel Line

-

4 = StreamStarted / EndOfStream

-

-

FrameNumber (64-bit integer)

Frame number assigned by the camera. -

Timestamp (64-bit integer)

Number of 100-nanosecond intervals since 00:00:00 UTC on January 1, 0001. -

MetadataSize (32-bit integer)

Byte count of the metadata body. -

DataBodySize (32-bit integer)

Byte count of the data body.

Metadata body

Immediately following the header, the metadata body contains four 32-bit integer fields, each measured in 100-nanosecond units:

-

CameraProcessingTime

-

CameraDeltaTimeToPreviousFrame

-

BreezeProcessingTime

-

BreezeDeltaTimeToPreviousFrame

Data body

The contents of the data body depend on StreamType:

1. Raw Pixel Line

-

Description: Raw bytes received directly from the camera.

-

Header Timestamp: Time when the raw data arrived.

This data comes is used when Test Scan is done in Settings in Breeze desktop, or when the Start capture Runtime command is used.

2. Prediction Lines

-

Header Timestamp: Time when the raw frame data arrived from the camera.

-

Layout: One line per array, matching the

LineWidthfrom the LoadWorkflow reply. -

Components:

-

Classification Vector (byte array)

-

Range: 0 (No class) to 255 (class index)

-

Example 1:

[0,0,0,0,1,1,1,1,0,0,0,2,2,2,2,0,0,0,0,0,3,3,3,0,0,…] -

Example 2:

[0,0,1,1,1,0,1,1,0,0,0,1,1,1,1,0,0,0,0,0,1,1,1,0,0,…](sample vector) Data Type: Byte

-

-

Quantification Vector (32-bit float array)

-

Range: –MinFloat to +MaxFloat

-

Example:

[0,0,0,0,4.5,5.2,3.8,6.5,0,0,0,…]

-

-

Confidence Values (byte array)

-

Range: 5 (High) to 1 (Low), or 0 if not part of the model

[0,0,0,0,3,3,3,3,0,0,0,5,5,5,5,0,0,0,0,0,1,1,1,0,0,…]

-

-

3. Rgb Pixel Line

-

Description: Color pixel data.

-

Data Body: Triplets of bytes for red, green and blue.

-

Range: 0–255 per channel

-

Example:

[50,100,150], [50,45,55], [85,65,255], …

RGB pixel lines correspond to the real-time visualization in Breeze Recorder and Breeze Client where you use the toolbar to change the variable to see. To change the visualization variable or background blend programmatically, use SetProperty as described below in https://prediktera.atlassian.net/wiki/spaces/BR/pages/564200816/Developers+reference+guide#RuntimeConfigurationVariable

4. Stream Start / End

-

Header Timestamp: Time when the control message was sent.

-

Data Body: ASCII strings, either

-

StreamStarted -

EndOfStream

-

These packets are sent following the commands StartPredict and StopPredict, respectively.

Runtime Configuration

You can adjust visualization settings at runtime with the Developers reference guide | setProperty command:

SetProperty("Property" = "VisualizationVariable", "Value" = "<Raw | Reflectance | Absorbance | Descriptor names>")

SetProperty("Property" = "VisualizationBlend", "Value" = "True or False")

-

VisualizationVariable: Selects which data channel to display.

-

VisualizationBlend: Enables or disables blending mode.

CameraId can be added to both Visualization settings

Runtime Command Switches

|

Switch |

Description |

|

e.g. " |

Set no of calculation threads |

|

e.g. /w:"C:\temp\My Folder" |

Override current workspace path |

|

|

Execute BreezeRuntime as EventServer (see below) |

|

e.g., |

Override minimum loglevel for console logging

|

|

e.g., |

Log session id |

Running Breeze Runtime with Breeze Runtime Client

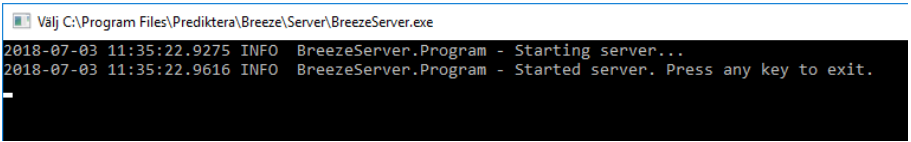

1. Start “BreezeRuntime.exe”. Note: Located under “C:\Program

Files\Prediktera\Breeze\Runtime”. If the optional event server is used (see Event Stream), start “BreezeRuntime.exe /e” instead.

2. Start “BreezeClient.exe” from the Start menu

3. Press the “Connect” button.

4. Select workflow exported from Breeze and press “Load”

5. Start predicting on the loaded workflow by pressing “Start”

6. View real-time predictions under the “Realtime” tab

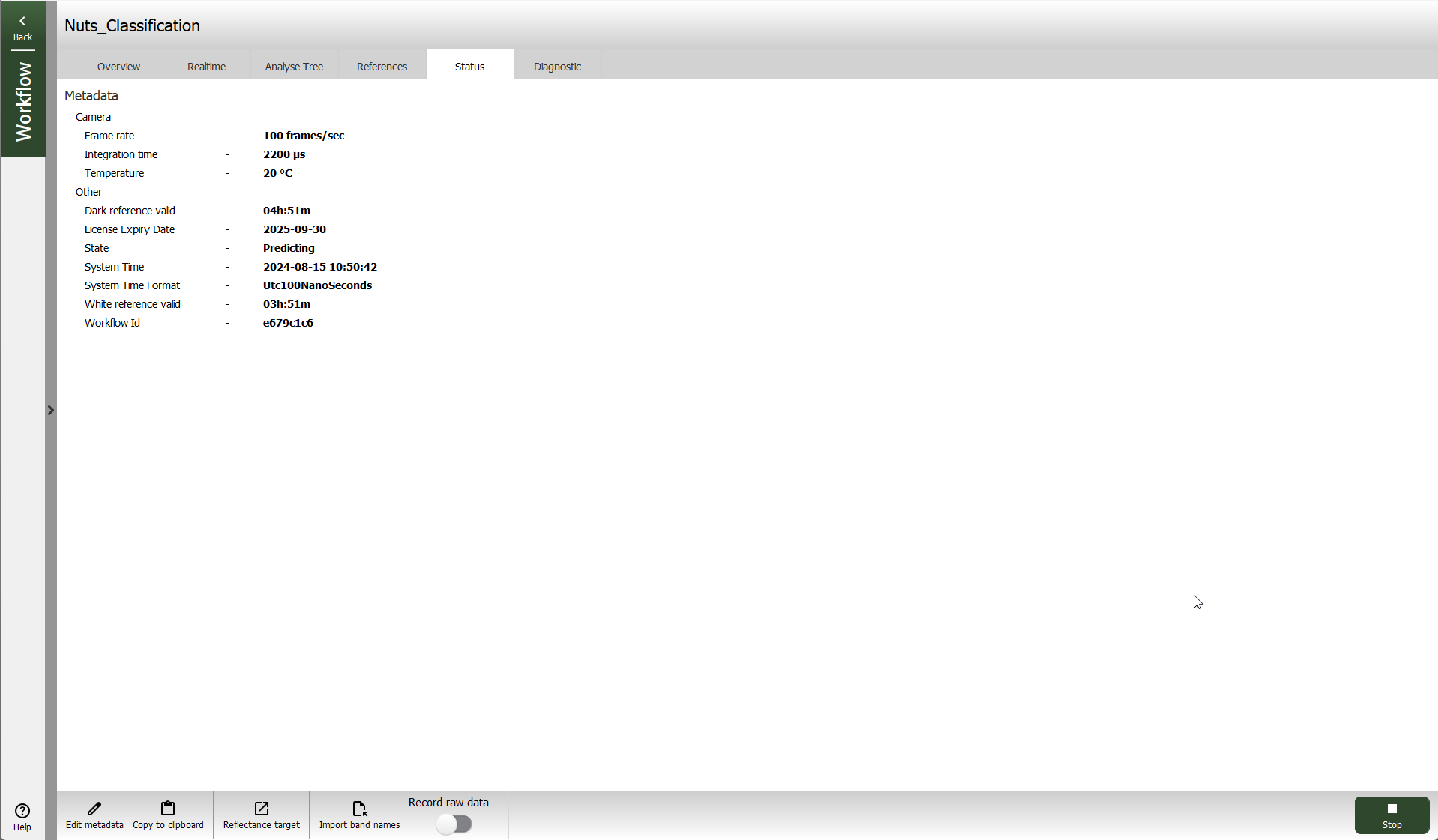

7. View Breeze Runtime status under the “Status” tab